Build an M3 Anvil! Cluster

|

Alteeve Wiki :: How To :: Build an M3 Anvil! Cluster |

First off, what is an Anvil!?

In short, it's a system designed to keep servers running through an array of failures, without need for an internet connection.

Think about ship-board computer systems, remote research facilities, factories without dedicated IT staff, un-staffed branch offices and so forth. Where most hosted solutions expect for technical staff to be available in short order, and Anvil! is designed to continue functioning properly for weeks or months with faulty components.

In these cases, the Anvil! system will predict component failure and mitigate automatically. It will adapt to changing threat conditions, like cooling or power loss, including automatic recovery from full power loss. It is designed around the understanding that a fault condition may not be repaired for weeks or months, and can do automated risk analysis and mitigation.

That's an Anvil! cluster!

An Anvil! cluster is designed so that any component in the cluster can fail, be removed and a replacement installed without needing a maintenance window. This includes power, network, compute and management systems.

Components

The minimum configuration needed to host servers on an Anvil! is this;

| Management Layer | |

|---|---|

| Striker Dashboard 1 | Striker Dashboard 2 |

| Anvil! Node | |

| Node 1 | |

| Subnode 1 | Subnode 2 |

| Foundation Pack 1 | |

| Ethernet Switch 1 | Ethernet Switch 2 |

| Switched PDU 1 | Switched PDU 2 |

| UPS 1 | UPS 2 |

With this configuration, you can host as many servers as you would like, limited only by the resources of Node 1 (itself made of a pair of physical nodes with your choice of processing, RAM and storage resources).

Scaling

To add capacity for hosted servers, individual nodes can be upgraded (online!), and/or additional nodes can be added. There is no hard limit on how many nodes can be in a given cluster.

Each 'Foundation Pack' can handle as many nodes as you'd like, though for reasons we'll explain in more detail later, it is recommended to run two to four nodes per foundation pack.

Management Layer; Striker Dashboards

The management layer, the Striker dashboards, have no hard limit on how many Node Blocks they can manage. All node-blocks record their data to the Strikers (to offload processing and storage loads). There is a practical limit to how many node blocks can use the Strikers, but this can be accounted for in the hardware selected for the dashboards.

Nodes

An Anvil! cluster uses one or more nodes, with each node being a pair of matched physical subnodes configured as a single logical unit. The power of a given node block is set by you and based on the loads you expect to place on it.

There is no hard limit on how many node blocks exist in an Anvil! cluster. Your servers will be deployed across the node blocks and, when you want to add more servers than you currently have resource for, you simple add another node block.

Foundation Packs

A foundation pack is the power and ethernet layer that feeds into one or more node blocks. At it's most basic, it consists of three pairs of equipment;

- Two stacked (or VLT-domain'ed) ethernet switches.

- Two switched PDUs (network-switched power bars

- Two UPSes.

Each UPS feeds one PDU, forming two separate "power rails". Ethernet switches and all sub-nodes are equipped with redundant PSUs, with one PSU fed by either power rail.

In this way, any component in the foundation pack can fault, and all equipment will continue to have power and ethernet resources available. How many Anvil! node-pairs can be run on a given foundation pack is limited only by the sizing of the selected foundation pack equipment.

Configuration

| Note: This is SUPER basic and minimal at this stage. |

Striker Dashboards

Striker dashboards are often described as "cheap and cheerful", generally being a fairly small and inexpensive device, like a Dell Optiplex 3090, Intel NUC, or similar.

You can choose any vendor you wish, but when selecting hardware, be mindful that all Scancore data is stored in PostgreSQL databases running on each dashboard. As such, we recommend an Intel Core i5 or AMD Ryzen 5 class CPU, 8 GiB or more of RAM, a ~250 GiB SSD (mixed use, decent IOPS) storage and two ethernet ports.

Striker Dashboards host the Striker web interface, and act as a bridge between your IFN network and the Anvil! cluster's BCN management network. As such, they must have a minimum of two ethernet ports.

Node Pairs

An Anvil! Node Pair is made up of two identical physical machines. These two machines act as a single logical unit, providing fault tolerance and automated live migrations of hosted servers to mitigate against predicted hardware faults.

Each sub-node (a single hardware node) must have;

- Redundant PSUs

- Six ethernet ports (eight recommended). If six, use 3x dual-port. If eight, 2x quad port will do.

- Redundant storage (RAID level 1 (mirroring) or level 5 or 6 (striping with parity). Sufficient capacity and IOPS to host the servers that will run on each pair.

- IPMI (out-of-band) management ports. Strongly recommend on a dedicated network interface.

- Sufficient CPU core count and core speed for expected hosted servers.

- Sufficient RAM for the expected servers (note that the Anvil! reserves 8 GiB).

Disaster Recovery (DR) Host

Optionally, a "third node" of a sort can be added to a node-pair. This is called a DR Host, and should (but doesn't have to be) identical to the node pair hardware it is extending.

A DR (disaster recovery) Host acts as a remotely hosted "third node" that can be manually pressed into service in a situation where both nodes in a node pair are destroyed. A common example would be a DR Host being in another building on a campus installation, or on the far side of the building / vessel.

A DR host can in theory be in another city, but storage replication speeds and latency need to be considered. Storage replication between node pairs is synchronous, where replication to DR can be asynchronous. However, consideration of storage loads are required to insure that storage data can keep up with the rate of data change.

Foundation Pack Equipment

The Anvil! is, fundamentally, hardware agnostic. That said, the hardware you select must be configured to meet the Anvil! requirements.

As we are hardware agnostic, we've created three linked pages. As we validate hardware ourselves, we will expand hardware-specific configuration guides. If you've configured foundation pack equipment not in the pages below, and you are willing, we would love to add your configuration instructions to our list.

Striker, Node and DR Host Configuration

In UEFI (BIOS), configure;

- Striker Dashboards to power on after power loss in all cases.

- Configure Subnodes to stay powered off after power loss in all cases.

- Configure any machines with redundant PSUs to balance the load across PSUs (don't use "hot spare" where only one PSU is active carrying the full load)

If using RAID

- If you have two drives, configure RAID level 1 (mirroring)

- If using 3 to 8 drives, configure RAID level 5 (striping with N-1 parity)

- If using 9+ drives, configure RAID level 6 (striping with N-2 parity)

Note that a server on a given node-pair will have it's data mirrored, effectively creating a sort of RAID level 11 (mirror of mirrors), 15 (mirror of N-1 stripes) or 16 (mirror of N-2 stripes). This is why we're comfortable pushing RAID level 5 to 8 disks.

Installation of Base OS

For all three machine types; (striker dashboards, node-pair sub-node, dr host), begin with a minimal RHEL 8 or CentOS Stream 8 install.

| Note: This tutorial assumes an existing understanding of installed RHEL 8. If you are new to RHEL, you can setup a free Red Hat account, and then follow their installation guide. |

Base OS Install

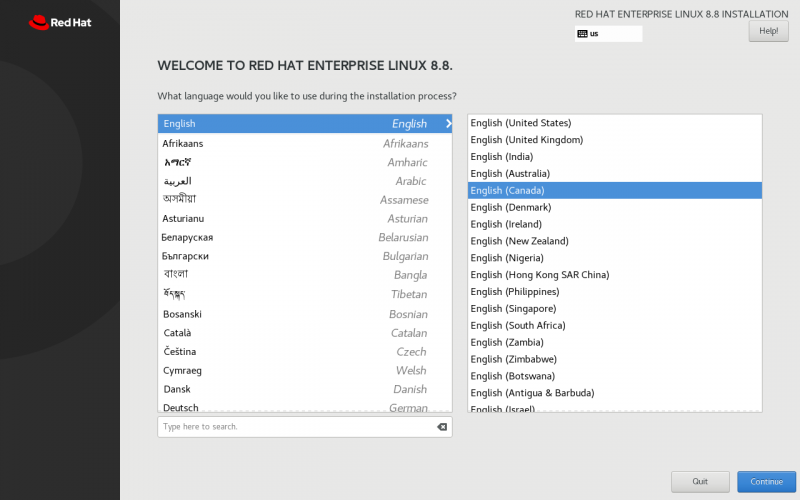

| Note: Every effort has been made in the development of the Anvil! to ensure it will work with localisations. However, parsing of command output has been tested with Canadian and American English. As such, it is recommended that you install using one of these localisations. If you use a different localisation, and run into any problems, please let us know and we will try to add support. |

Localisation

Choose your localisation;

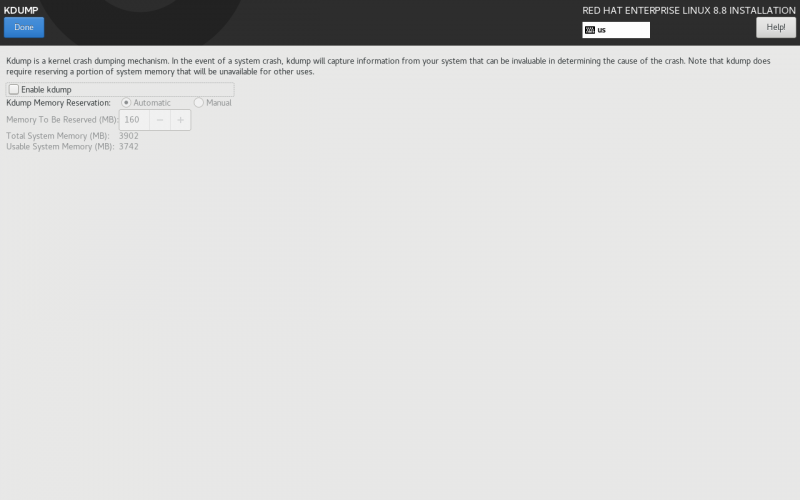

KDUMP

Disable kdump; This prevents kernel dumps if the OS crashes, but it means the host will recover faster. If you want to leave kdump enabled, that is fine, but be aware of the slower recovery times. Note that a subnode getting fenced will be forced off, and so kernel dumps won't be collected regardless of this configuration.

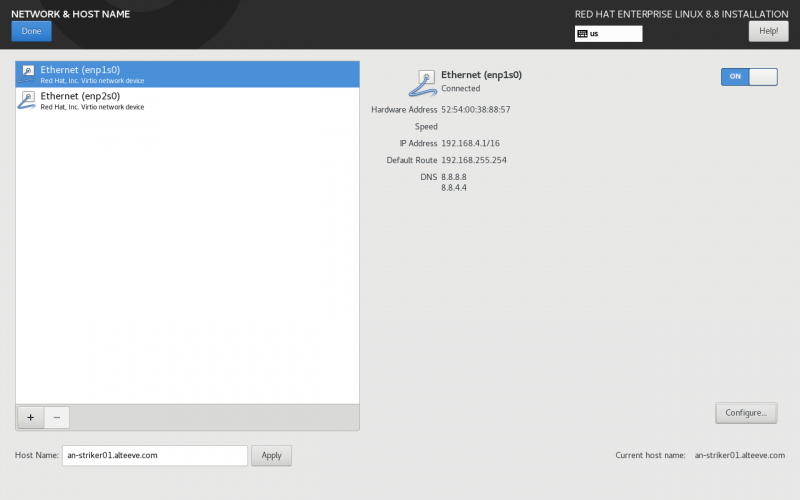

Network & Host Name

Set the host name for the machine. It's useful to do this before configuring the network, so that the volume group name includes the host's short host name. This doesn't effect the operation of the Anvil! system, but it can assist with debugging down the road.

| Note: Don't worry about configuring the network, this will be handles by the Anvil! later. Setting the IFN IP at this stage can be useful, but is not required. |

Time & Date

Setting the timezone is very much specific to you and your install. The most important part is that the time zone is set consistently across all machines in the Anvil! cluster.

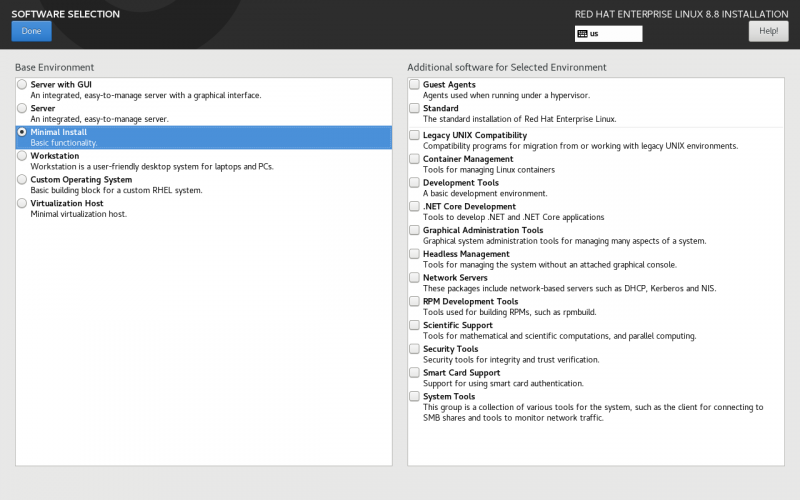

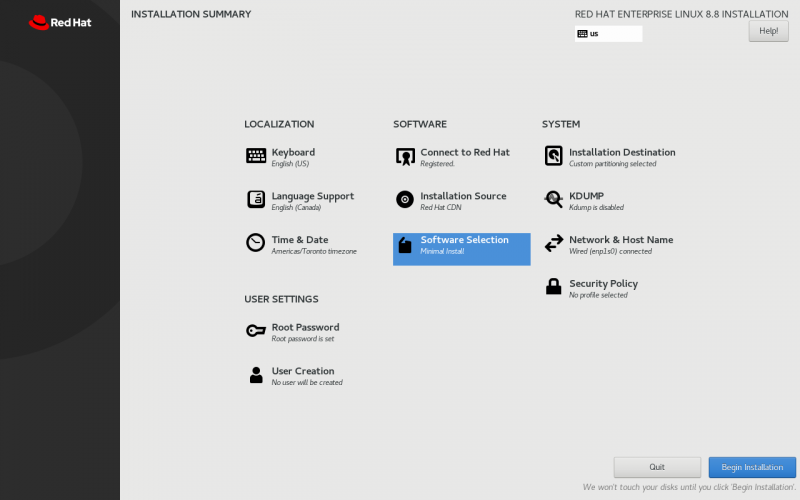

Software Selection

All machines can start with a Minimal Install. On Strikers, if you'd prefer to use Server With GUI, that is fine, but it is not needed at this step. The anvil-striker RPM will pull in the graphical interface.

| Note: If you select a graphical install on a Striker Dashboard, create a user called admin and set a password for that user. |

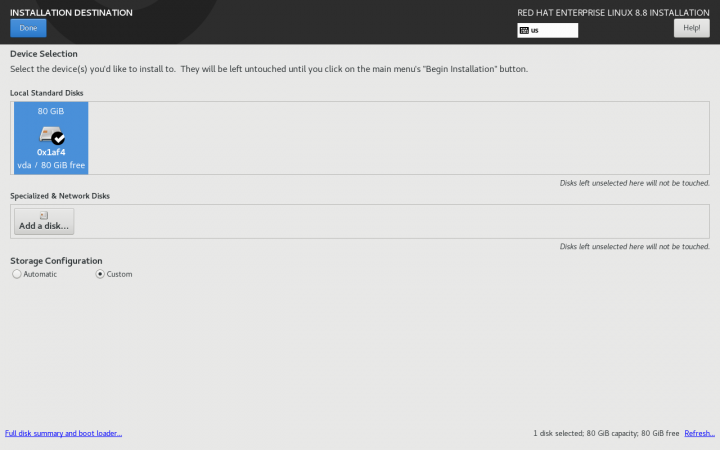

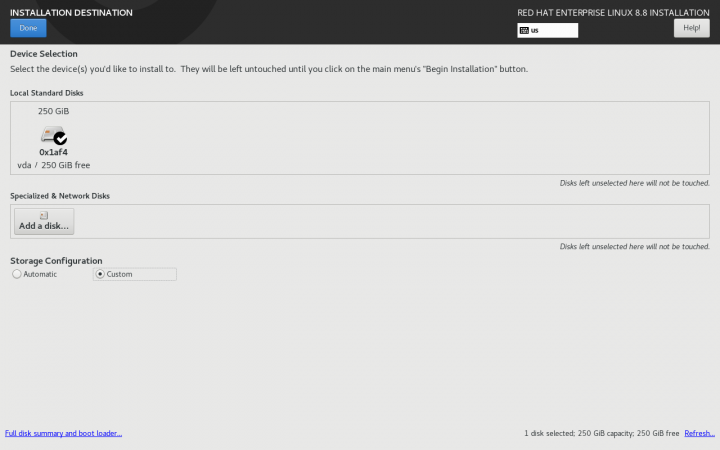

Installation Destination

| Note: It is strongly suggested to set the host name before configuring storage. |

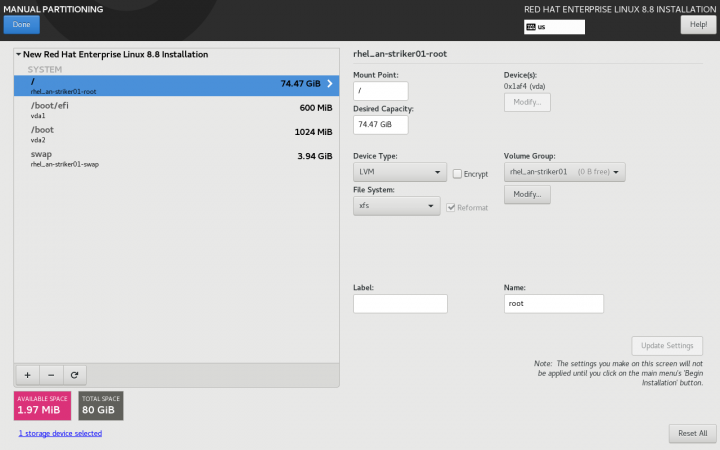

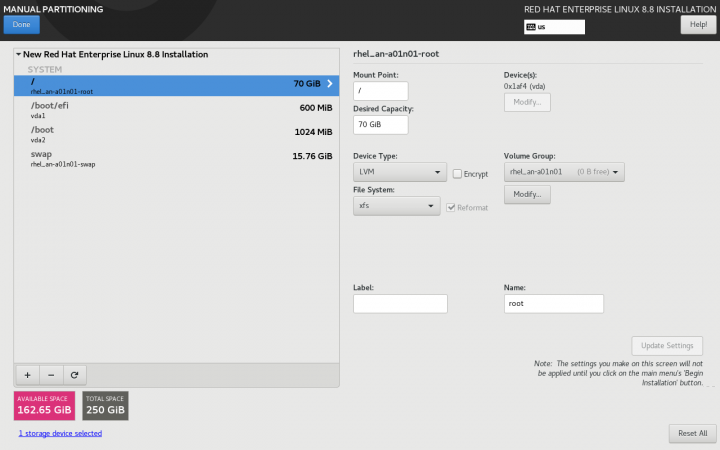

| Note: This is where the installation of a Striker dashboard will differ from an Anvil! Node's sub-node or DR host |

In this example, there is a single hard drive that will be configured. It's entirely valid to have a dedicated OS drive, and using a second drive for hosting servers. If you're planning to use a different storage plan, then you can ignore this stage. The key requirement is that there is unused space sufficiently large to host the servers you plan to run on a given node or DR host.

| Striker Dashboards | Anvil! Subnodes and DR Hosts |

|---|---|

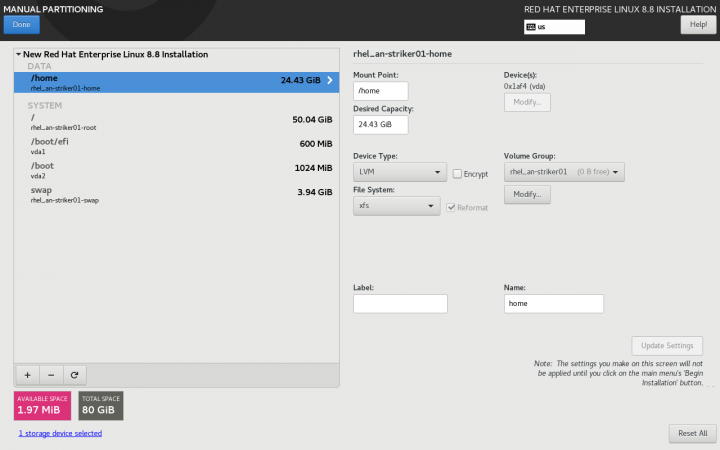

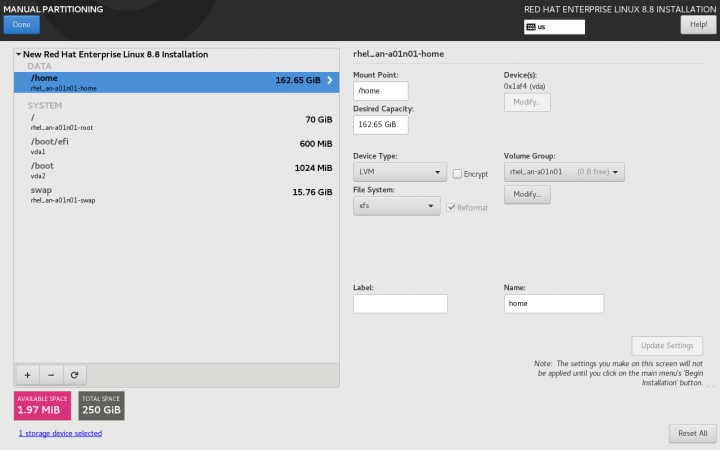

Click on Click here to create them automatically. This will create the base storage configuration, which we will adapt.

| Striker Dashboards | Anvil! Subnodes and DR Hosts |

|---|---|

In all cases, the auto-created /home logical volume will be deleted.

- For Striker dashboards, after deleting /home, assign the freed space to the / partition. To do this, select the / partition, and set the Desired Capacity to some much larger size than is available (like 1TiB), and click on Update Setting. The size will change to the largest valid value.

- For Anvil! subnodes and DR hosts, simply delete the /home partition, and do not give the free space to /. The space freed up by deleting /home will be used later for hosting servers.

| Striker Dashboards | Anvil! Subnodes and DR Hosts |

|---|---|

From this point forward, the rest of the OS install is the same for all systems.

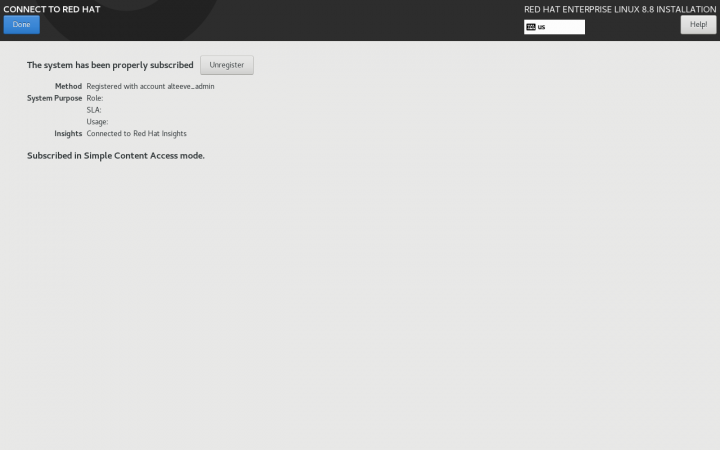

Optional; Connect to Red Hat

If you are installing RHEL 8, as opposed to CentOS Stream 8, you can register the server during installation. If you don't do this, the Anvil! will give you a chance to register the server during the installation process also.

Root Password

Set the root user password.

Begin Installation

With everything selected, click on Begin Installation. When the install has completed, reboot into the minimal install.

Post OS Install Configuration

Setting up the Alteeve repos is the same, but after that, the steps start to diverge depending on which machine type we're setting up in the Anvil! cluster.

Installing the Alteeve Repo

There are two Alteeve repositories that you can install; Community and Enterprise. Which is used is selected after the repository RPM is installed. Lets install the repo RPM, and then we will discuss the differences before we select one.

dnf install https://alteeve.com/an-repo/el8/alteeve-el8-repo-latest.noarch.rpm

Updating Subscription Management repositories.

Last metadata expiration check: 21:14:12 ago on Thu 27 Jul 2023 07:00:28 PM EDT.

alteeve-el8-repo-latest.noarch.rpm 37 kB/s | 10 kB 00:00

Dependencies resolved.

==================================================================================================================================

Package Architecture Version Repository Size

==================================================================================================================================

Installing:

alteeve-el8-repo noarch 0.2-1 @commandline 10 k

Transaction Summary

==================================================================================================================================

Install 1 Package

Total size: 10 k

Installed size: 3.6 k

Is this ok [y/N]:

Downloading Packages:

Running transaction check

Transaction check succeeded.

Running transaction test

Transaction test succeeded.

Running transaction

Preparing : 1/1

Installing : alteeve-el8-repo-0.2-1.noarch 1/1

Verifying : alteeve-el8-repo-0.2-1.noarch 1/1

Installed products updated.

Installed:

alteeve-el8-repo-0.2-1.noarch

Complete!

Selecting a Repository

There are two released version of the Anvil! cluster. There are pros and cons to both options;

Community Repo =

The Community repository is the free repo that anyone can use. As new builds pass our CI/CD test infrastructure, the versions in this repository are automatically built.

This repository always has the latest and greatest from Alteeve. We use Jenkins and a suite of proprietary test suite to ensure that the quality of the releases is excellent. Of course, Alteeve is a company of humans, and there's always a small chance that a bug could get through. Our free community repository is community supported, and it's our wonderful users who help us improve and refine our Anvil! platform.

Enterprise Repo

The Enterprise repository is the paid-access repository. The releases in the enterprise repo are "cherry picked" by Alteeve, and subjected to more extensive testing and QA. This repo is designed for businesses who want the most stable releases.

Using this repo opens up the option of active monitoring of your Anvil! cluster by Alteeve, also!

If you choose to get the Enterprise repo, please contact us and we will provide you with a custome repository key.

Configuring the Alteeve Repo

Enabling the Alteeve Repo

On all machines, post OS install, add the Anvil! repo;

dnf -y install https://www.alteeve.com/an-repo/m3/anvil-release-latest.noarch.rpm

Once installed, you need to enable the repository.

| Repository Options | |

|---|---|

| Community | This is free, and it gets the latest updates generated by our CI/CD testing infrastructure. |

| Commercial | This is paid and includes a support contract. Updates are curated for maximum reliability. |

If you purchase a commercial support agreement, you will be provided a key to access the commercial repository.

To enable the Anvil! repository, run either:

| Repository Options | |

|---|---|

| Community | alteeve-repo-setup

|

| Commercial | alteeve-repo-setup -k <key>

|

Now update the OS;

dnf update

If the kernel was updated, it is recommended (but not required) to reboot now.

In all cases, the Anvil! will rename network interfaces. For this to work, the biosdevname package needs to be removed.

dnf remove biosdevname

Now, we can install the anvil RPM. This next step is what defines a given machine as a Striker, Node or DR Host. As such, be careful to install the right one for the machine you're configuring.

| Install the Anvil! RPM | |

|---|---|

| Striker Dashboard | dnf install anvil-striker

|

| Node | dnf install anvil-node

|

| DR Host | dnf install anvil-dr

|

| Any questions, feedback, advice, complaints or meanderings are welcome. | ||||

| Us: Alteeve's Niche! | Support: Mailing List | IRC: #clusterlabs on Libera Chat | ||

| © Alteeve's Niche! Inc. 1997-2023 | Anvil! "Intelligent Availability™" Platform | |||

| legal stuff: All info is provided "As-Is". Do not use anything here unless you are willing and able to take responsibility for your own actions. | ||||