Difference between revisions of "Build an M3 Anvil! Cluster"

| (61 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

{{howto_header}} | {{howto_header}} | ||

{{warning|1=This is a work in progress document. While this header is here, please do not consider this article complete or accurate.}} | |||

First off, what is an [[Anvil!]]? | |||

In these cases, the Anvil! system will predict component failure and mitigate. It will adapt to changing threat conditions, like cooling or power loss, including automatic recovery from full power loss. It is designed around the understanding that a fault condition may not be repaired for weeks or months, and can do automated risk analysis and mitigation. | In short, it's a system designed to keep servers running through an array of failures, without need for an internet connection. | ||

Think about ship-board computer systems, remote research facilities, factories without dedicated IT staff, un-staffed branch offices and so forth. Where most hosted solutions expect for technical staff to be available in short order, and Anvil! is designed to continue functioning properly for weeks or months with faulty components. | |||

In these cases, the Anvil! system will predict component failure and mitigate automatically. It will adapt to changing threat conditions, like cooling or power loss, including automatic recovery from full power loss. It is designed around the understanding that a fault condition may not be repaired for weeks or months, and can do automated risk analysis and mitigation. | |||

That's an Anvil! cluster! | That's an Anvil! cluster! | ||

An Anvil! cluster is designed so that any component in the cluster can fail, be removed and a replacement installed without needing a maintenance window. This includes power, network, compute and management systems. | |||

= Components = | = Components = | ||

| Line 21: | Line 27: | ||

|style="text-align:center;"| [[Striker]] Dashboard 2 | |style="text-align:center;"| [[Striker]] Dashboard 2 | ||

|- | |- | ||

!colspan="2"| | !colspan="2"| Anvil! Node | ||

|- | |- | ||

|style="text-align:center;" colspan="2"| ''Node | |style="text-align:center;" colspan="2"| ''Node 1'' | ||

|- | |- | ||

|style="text-align:center;"| | |style="text-align:center;"| Subnode 1 | ||

|style="text-align:center;"| | |style="text-align:center;"| Subnode 2 | ||

|- | |- | ||

! colspan="2"| Foundation Pack 1 | ! colspan="2"| Foundation Pack 1 | ||

| Line 40: | Line 46: | ||

|} | |} | ||

With this configuration, you can host as many servers as you would like, limited only by the resources of ''Node | With this configuration, you can host as many servers as you would like, limited only by the resources of ''Node 1'' (itself made of a pair of physical nodes with your choice of processing, RAM and storage resources). | ||

= Scaling = | = Scaling = | ||

To add capacity for hosted servers, individual nodes can be upgraded (online!), and/or additional nodes can be added. There is no hard limit on how many nodes can be in a given cluster. | |||

Each 'Foundation Pack' can handle as many nodes as you'd like, though for reasons we'll explain in more detail later, it is recommended to run two to four nodes per foundation pack. | |||

== Management Layer; Striker Dashboards == | == Management Layer; Striker Dashboards == | ||

| Line 50: | Line 58: | ||

The management layer, the Striker dashboards, have no hard limit on how many ''Node Blocks'' they can manage. All node-blocks record their data to the Strikers (to offload processing and storage loads). There is a practical limit to how many node blocks can use the Strikers, but this can be accounted for in the hardware selected for the dashboards. | The management layer, the Striker dashboards, have no hard limit on how many ''Node Blocks'' they can manage. All node-blocks record their data to the Strikers (to offload processing and storage loads). There is a practical limit to how many node blocks can use the Strikers, but this can be accounted for in the hardware selected for the dashboards. | ||

== | == Nodes == | ||

An ''Anvil!'' cluster uses one or more node | An ''Anvil!'' cluster uses one or more nodes, with each node being a pair of matched physical subnodes configured as a single logical unit. The power of a given node block is set by you and based on the loads you expect to place on it. | ||

There is no hard limit on how many node blocks exist in an Anvil! cluster. Your servers will be deployed across the node blocks and, when you want to add more servers than you currently have resource for, you simple add another node block. | There is no hard limit on how many node blocks exist in an Anvil! cluster. Your servers will be deployed across the node blocks and, when you want to add more servers than you currently have resource for, you simple add another node block. | ||

| Line 124: | Line 132: | ||

Note that a server on a given node-pair will have it's data mirrored, effectively creating a sort of RAID level 11 (mirror of mirrors), 15 (mirror of N-1 stripes) or 16 (mirror of N-2 stripes). This is why we're comfortable pushing RAID level 5 to 8 disks. | Note that a server on a given node-pair will have it's data mirrored, effectively creating a sort of RAID level 11 (mirror of mirrors), 15 (mirror of N-1 stripes) or 16 (mirror of N-2 stripes). This is why we're comfortable pushing RAID level 5 to 8 disks. | ||

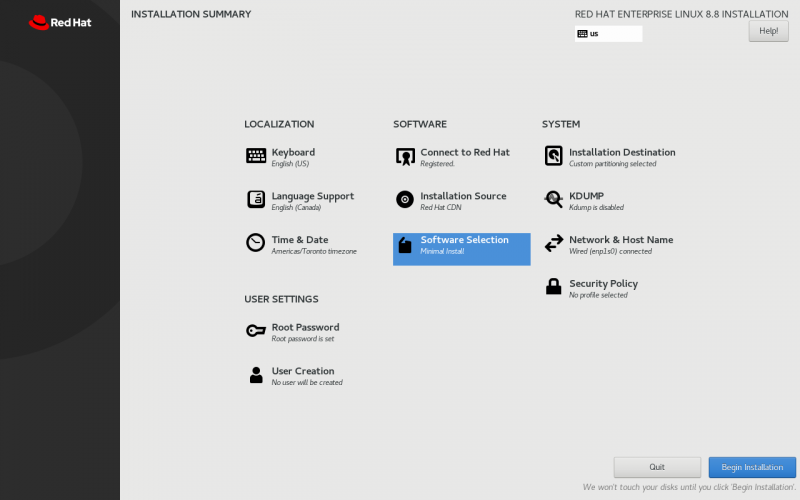

= Base OS | = Installation of Base OS = | ||

For all three machine types; (striker dashboards, node-pair sub-node, dr host), begin with a minimal [[RHEL]] 8 or [[CentOS Stream]] 8 install. | For all three machine types; (striker dashboards, node-pair sub-node, dr host), begin with a minimal [[RHEL]] 8 or [[CentOS Stream]] 8 install. | ||

{{note|1=This tutorial assumes an existing understanding of installed [[RHEL 8]]. If you are new to RHEL, you can setup a free Red Hat account, and then follow their [https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/8/html/performing_a_standard_rhel_8_installation/index installation guide].}} | |||

== Base OS Install == | |||

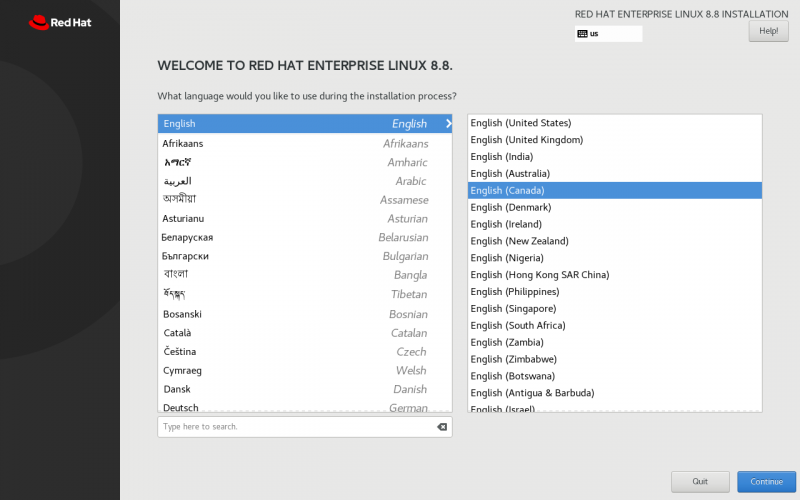

{{note|1=Every effort has been made in the development of the Anvil! to ensure it will work with localisations. However, parsing of command output has been tested with Canadian and American English. As such, it is recommended that you install using one of these localisations. If you use a different localisation, and run into any problems, please [[Anvil! Support|let us know]] and we will try to add support.}} | |||

=== Localisation === | |||

Choose your localisation; | |||

[[image:an-striker01-rhel8-m3-os-install-01.png|thumb|center|800px|Localisation selection.]] | |||

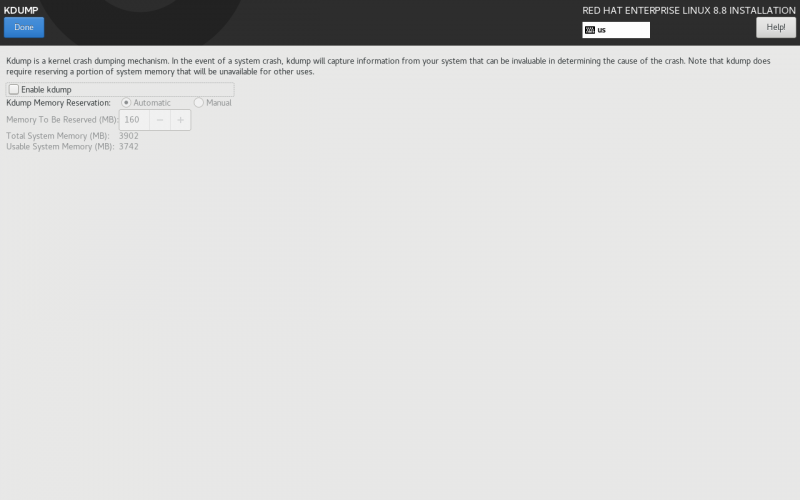

=== KDUMP === | |||

Disable <span class="code">kdump</span>; This prevents kernel dumps if the OS crashes, but it means the host will recover faster. If you want to leave <span class="code">kdump</span> enabled, that is fine, but be aware of the slower recovery times. Note that a subnode getting [[fenced]] will be forced off, and so kernel dumps won't be collected regardless of this configuration. | |||

[[image:an-striker01-rhel8-m3-os-install-02.png|thumb|center|800px|Disable kdump.]] | |||

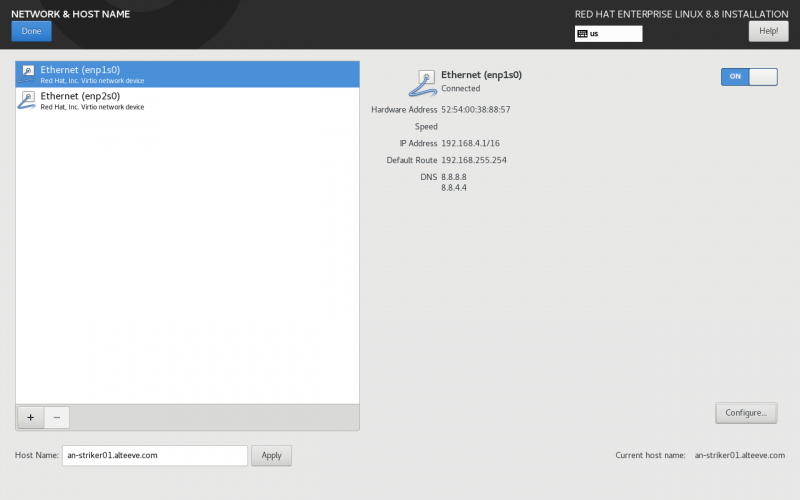

=== Network & Host Name === | |||

Set the host name for the machine. It's useful to do this ''before'' configuring the network, so that the [[volume group]] name includes the host's short host name. This doesn't effect the operation of the Anvil! system, but it can assist with debugging down the road. | |||

{{note|1=Don't worry about configuring the network, this will be handles by the Anvil! later. Setting the [[IFN]] IP at this stage can be useful, but is not required.}} | |||

[[image:an-striker01-rhel8-m3-os-install-03.png|thumb|center|800px|Set the host name.]] | |||

=== Time & Date === | |||

Setting the timezone is very much specific to you and your install. The most important part is that the time zone is set consistently across all machines in the Anvil! cluster. | |||

[[image:an-striker01-rhel8-m3-os-install-04.png|thumb|center|800px|Setting the timezone consistently on all Anvil! cluster systems.]] | |||

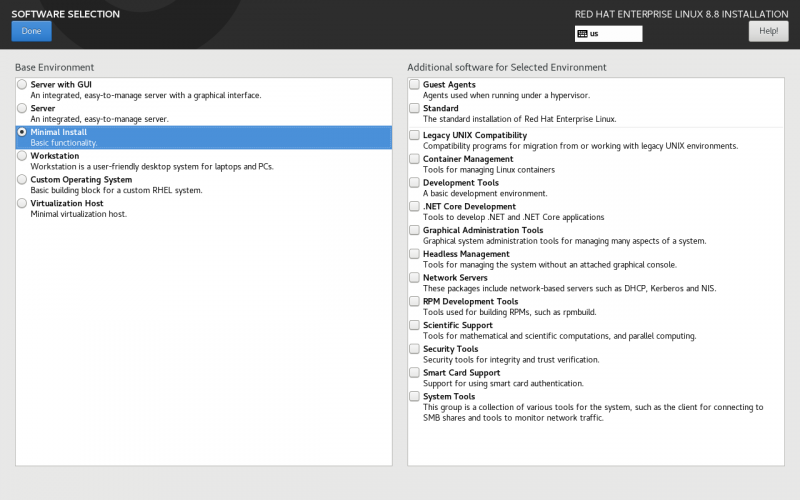

=== Software Selection === | |||

All machines can start with a ''Minimal Install''. On Strikers, if you'd prefer to use ''Server With GUI'', that is fine, but it is not needed at this step. The <span class="code">anvil-striker</span> [[RPM]] will pull in the graphical interface. | |||

{{note|1=If you select a graphical install on a Striker Dashboard, create a user called <span class="code">admin</span> and set a password for that user.}} | |||

[[image:an-striker01-rhel8-m3-os-install-05.png|thumb|center|800px|Selecting the ''Minimal Install''.]] | |||

= | === Installation Destination === | ||

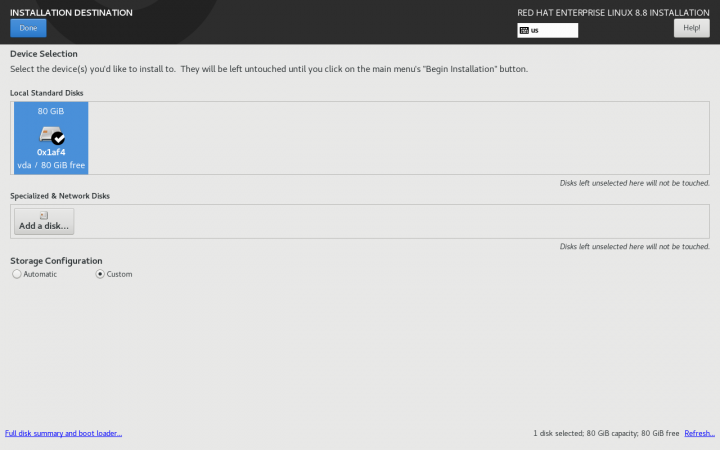

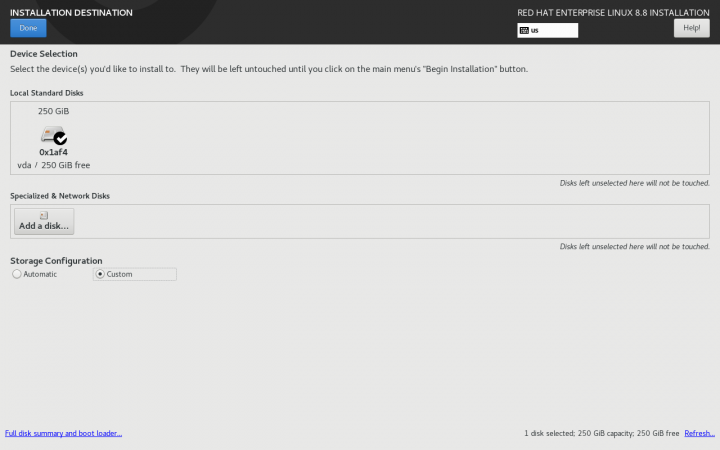

{{note|1=It is strongly suggested to set the host name before configuring storage.}} | |||

{{note|1=This is where the installation of a Striker dashboard will differ from an Anvil! Node's sub-node or DR host}} | |||

In this example, there is a single hard drive that will be configured. It's entirely valid to have a dedicated OS drive, and using a second drive for hosting servers. If you're planning to use a different storage plan, then you can ignore this stage. The key requirement is that there is unused space sufficiently large to host the servers you plan to run on a given node or DR host. | |||

{| class=" | {| | ||

!class="code"|Striker Dashboards | |||

!class="code"|Anvil! Subnodes and DR Hosts | |||

|- | |- | ||

! | |[[image:an-striker01-rhel8-m3-os-install-06.png|thumb|center|720px|Striker Dashboard Drive Selection.]] | ||

|[[image:an-striker01-rhel8-m3-os-install-07.png|thumb|center|720px|Subnode or DR Host Drive Selection.]] | |||

|} | |||

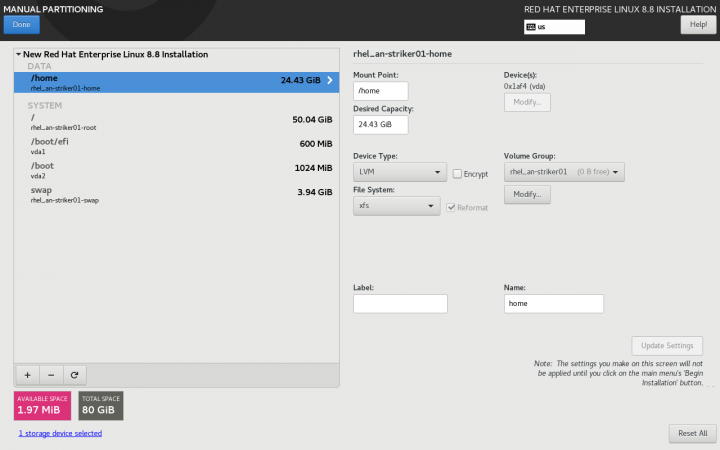

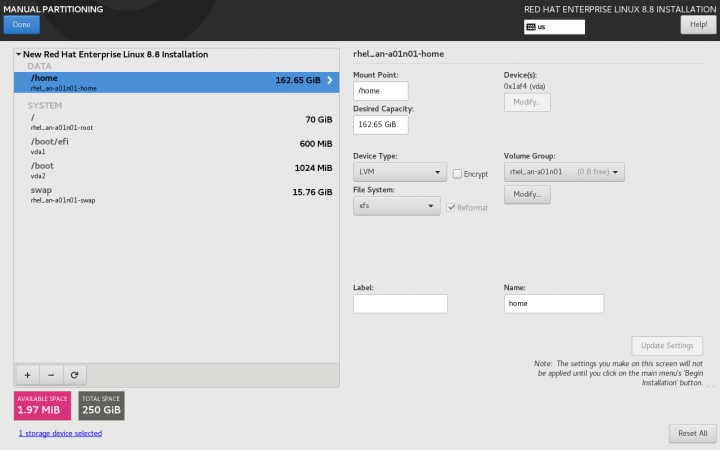

Click on ''Click here to create them automatically''. This will create the base storage configuration, which we will adapt. | |||

{| | |||

!class="code"|Striker Dashboards | |||

!class="code"|Anvil! Subnodes and DR Hosts | |||

|- | |- | ||

| ''' | |[[image:an-striker01-rhel8-m3-os-install-08.png|thumb|center|720px|Striker Dashboard auto-configured disk layout.]] | ||

|[[image:an-striker01-rhel8-m3-os-install-09.png|thumb|center|720px|Subnode or DR Host auto-configured disk layout.]] | |||

|} | |||

In all cases, the auto-created <span class="code">/home</span> [[logical volume]] will be deleted. | |||

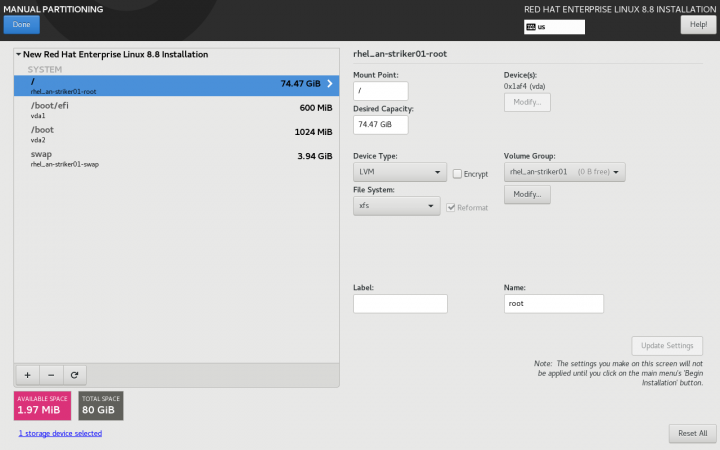

* For Striker dashboards, after deleting <span class="code">/home</span>, assign the freed space to the <span class="code">/</span> partition. To do this, select the <span class="code">/</span> partition, and set the ''Desired Capacity'' to some much larger size than is available (like <span class="code">1TiB</span>), and click on ''Update Setting''. The size will change to the largest valid value. | |||

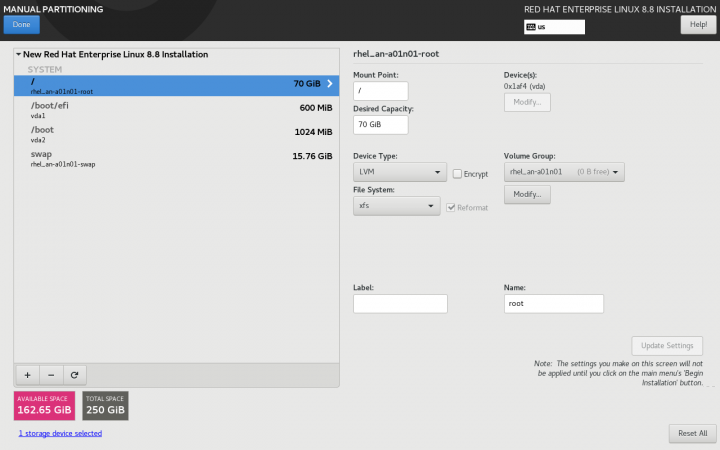

* For Anvil! subnodes and DR hosts, simply delete the <span class="code">/home</span> partition, and '''do not''' give the free space to <span class="code">/</span>. The space freed up by deleting <span class="code">/home</span> will be used later for hosting servers. | |||

{| | |||

!class="code"|Striker Dashboards | |||

!class="code"|Anvil! Subnodes and DR Hosts | |||

|- | |- | ||

| | |[[image:an-striker01-rhel8-m3-os-install-10.png|thumb|center|720px|Striker Dashboard /home LV deleted.]] | ||

|[[image:an-striker01-rhel8-m3-os-install-11.png|thumb|center|720px|Subnode or DR Host /home LV deleted.]] | |||

|} | |} | ||

If you | From this point forward, the rest of the OS install is the same for all systems. | ||

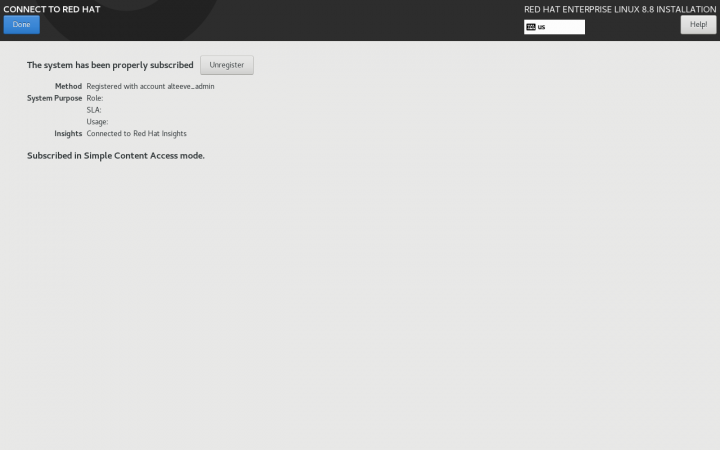

=== Optional; Connect to Red Hat === | |||

If you are installing RHEL 8, as opposed to CentOS Stream 8, you can register the server during installation. If you don't do this, the Anvil! will give you a chance to register the server during the installation process also. | |||

[[image:an-striker01-rhel8-m3-os-install-12.png|thumb|center|720px|Registering the system with Red Hat.]] | |||

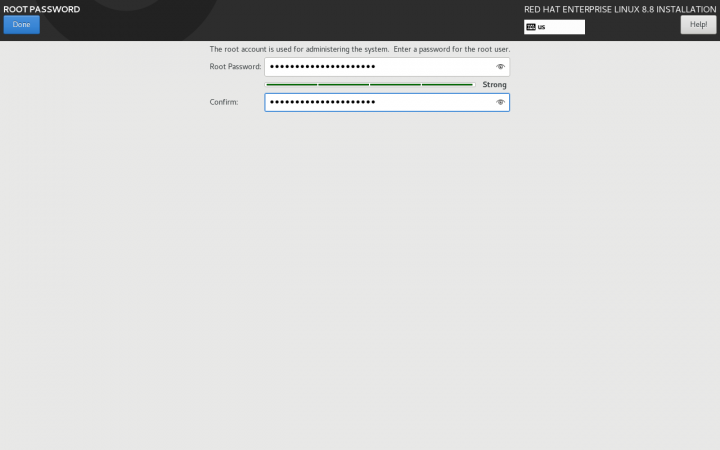

=== Root Password === | |||

Set the <span class="code">root</span> user password. | |||

[[image:an-striker01-rhel8-m3-os-install-13.png|thumb|center|720px|Setting the root user password.]] | |||

=== Begin Installation === | |||

With everything selected, click on ''Begin Installation''. When the install has completed, reboot into the minimal install. | |||

[[image:an-striker01-rhel8-m3-os-install-14.png|thumb|center|800px|Ready to install!]] | |||

<span class="code"></span> | |||

= Post OS Install Configuration = | |||

Setting up the [[Alteeve]] repos is the same, but after that, the steps start to diverge depending on which machine type we're setting up in the Anvil! cluster. | |||

== Installing the Alteeve Repo == | |||

{{note|1=Our repo pulls in a bunch of other packages that will be needed shortly.}} | |||

There are two Alteeve repositories that you can install; [[Community]] and [[Enterprise]]. Which is used is selected after the repository RPM is installed. Lets install the repo RPM, and then we will discuss the differences before we select one. | |||

<syntaxhighlight lang="bash"> | |||

dnf install https://alteeve.com/an-repo/m3/alteeve-release-latest.noarch.rpm | |||

</syntaxhighlight> | |||

<syntaxhighlight lang="text"> | |||

Updating Subscription Management repositories. | |||

Last metadata expiration check: 0:39:42 ago on Fri 28 Jul 2023 04:21:39 PM EDT. | |||

anvil-release-latest.noarch.rpm 59 kB/s | 12 kB 00:00 | |||

Dependencies resolved. | |||

================================================================================================================================== | |||

Package Arch Version Repository Size | |||

================================================================================================================================== | |||

Installing: | |||

alteeve-release noarch 0.1-2 @commandline 12 k | |||

Installing dependencies: | |||

dwz x86_64 0.12-10.el8 rhel-8-for-x86_64-appstream-rpms 109 k | |||

efi-srpm-macros noarch 3-3.el8 rhel-8-for-x86_64-appstream-rpms 22 k | |||

ghc-srpm-macros noarch 1.4.2-7.el8 rhel-8-for-x86_64-appstream-rpms 9.4 k | |||

go-srpm-macros noarch 2-17.el8 rhel-8-for-x86_64-appstream-rpms 13 k | |||

make x86_64 1:4.2.1-11.el8 rhel-8-for-x86_64-baseos-rpms 498 k | |||

ocaml-srpm-macros noarch 5-4.el8 rhel-8-for-x86_64-appstream-rpms 9.5 k | |||

openblas-srpm-macros noarch 2-2.el8 rhel-8-for-x86_64-appstream-rpms 8.0 k | |||

perl x86_64 4:5.26.3-422.el8 rhel-8-for-x86_64-appstream-rpms 73 k | |||

<...snip...> | |||

perl-version x86_64 6:0.99.24-1.el8 rhel-8-for-x86_64-appstream-rpms 67 k | |||

python-rpm-macros noarch 3-45.el8 rhel-8-for-x86_64-appstream-rpms 16 k | |||

python-srpm-macros noarch 3-45.el8 rhel-8-for-x86_64-appstream-rpms 16 k | |||

python3-pyparsing noarch 2.1.10-7.el8 rhel-8-for-x86_64-baseos-rpms 142 k | |||

python3-rpm-macros noarch 3-45.el8 rhel-8-for-x86_64-appstream-rpms 15 k | |||

qt5-srpm-macros noarch 5.15.3-1.el8 rhel-8-for-x86_64-appstream-rpms 11 k | |||

redhat-rpm-config noarch 131-1.el8 rhel-8-for-x86_64-appstream-rpms 91 k | |||

rust-srpm-macros noarch 5-2.el8 rhel-8-for-x86_64-appstream-rpms 9.3 k | |||

systemtap-sdt-devel x86_64 4.8-2.el8 rhel-8-for-x86_64-appstream-rpms 88 k | |||

unzip x86_64 6.0-46.el8 rhel-8-for-x86_64-baseos-rpms 196 k | |||

zip x86_64 3.0-23.el8 rhel-8-for-x86_64-baseos-rpms 270 k | |||

Installing weak dependencies: | |||

perl-Encode-Locale noarch 1.05-10.module+el8.3.0+6498+9eecfe51 rhel-8-for-x86_64-appstream-rpms 22 k | |||

perl-IO-Socket-SSL noarch 2.066-4.module+el8.3.0+6446+594cad75 rhel-8-for-x86_64-appstream-rpms 298 k | |||

perl-Mozilla-CA noarch 20160104-7.module+el8.3.0+6498+9eecfe51 rhel-8-for-x86_64-appstream-rpms 15 k | |||

perl-TermReadKey x86_64 2.37-7.el8 rhel-8-for-x86_64-appstream-rpms 40 k | |||

Enabling module streams: | |||

perl 5.26 | |||

perl-IO-Socket-SSL 2.066 | |||

perl-libwww-perl 6.34 | |||

Transaction Summary | |||

================================================================================================================================== | |||

Install 159 Packages | |||

Total size: 23 M | |||

Total download size: 23 M | |||

Installed size: 64 M | |||

</syntaxhighlight> | |||

<syntaxhighlight lang="text"> | |||

Is this ok [y/N]: | |||

</syntaxhighlight> | |||

<syntaxhighlight lang="text"> | |||

Downloading Packages: | |||

(1/158): perl-Scalar-List-Utils-1.49-2.el8.x86_64.rpm 173 kB/s | 68 kB 00:00 | |||

(2/158): perl-Data-Dumper-2.167-399.el8.x86_64.rpm 147 kB/s | 58 kB 00:00 | |||

(3/158): perl-PathTools-3.74-1.el8.x86_64.rpm 155 kB/s | 90 kB 00:00 | |||

(4/158): perl-threads-shared-1.58-2.el8.x86_64.rpm 127 kB/s | 48 kB 00:00 | |||

(5/158): perl-Unicode-Normalize-1.25-396.el8.x86_64.rpm 431 kB/s | 82 kB 00:00 | |||

(6/158): zip-3.0-23.el8.x86_64.rpm 896 kB/s | 270 kB 00:00 | |||

(7/158): perl-MIME-Base64-3.15-396.el8.x86_64.rpm 101 kB/s | 31 kB 00:00 | |||

(8/158): perl-Pod-Simple-3.35-395.el8.noarch.rpm 879 kB/s | 213 kB 00:00 | |||

<...snip...> | |||

(156/158): python-srpm-macros-3-45.el8.noarch.rpm 65 kB/s | 16 kB 00:00 | |||

(157/158): perl-open-1.11-422.el8.noarch.rpm 818 kB/s | 78 kB 00:00 | |||

(158/158): perl-utils-5.26.3-422.el8.noarch.rpm 269 kB/s | 129 kB 00:00 | |||

---------------------------------------------------------------------------------------------------------------------------------- | |||

Total 1.6 MB/s | 23 MB 00:14 | |||

Running transaction check | |||

Transaction check succeeded. | |||

Running transaction test | |||

Transaction test succeeded. | |||

Running transaction | |||

Preparing : 1/1 | |||

Installing : perl-Digest-1.17-395.el8.noarch 1/159 | |||

Installing : perl-Digest-MD5-2.55-396.el8.x86_64 2/159 | |||

Installing : perl-Data-Dumper-2.167-399.el8.x86_64 3/159 | |||

Installing : perl-libnet-3.11-3.el8.noarch 4/159 | |||

Installing : perl-Net-SSLeay-1.88-2.module+el8.6.0+13392+f0897f98.x86_64 5/159 | |||

<...snip...> | |||

Installing : perl-CPAN-2.18-397.el8.noarch 156/159 | |||

Installing : perl-Encode-devel-4:2.97-3.el8.x86_64 157/159 | |||

Installing : perl-4:5.26.3-422.el8.x86_64 158/159 | |||

Installing : alteeve-release-0.1-2.noarch 159/159 | |||

Running scriptlet: alteeve-release-0.1-2.noarch 159/159 | |||

Verifying : perl-Scalar-List-Utils-3:1.49-2.el8.x86_64 1/159 | |||

Verifying : perl-PathTools-3.74-1.el8.x86_64 2/159 | |||

Verifying : perl-Data-Dumper-2.167-399.el8.x86_64 3/159 | |||

Verifying : perl-threads-shared-1.58-2.el8.x86_64 4/159 | |||

Verifying : perl-Encode-4:2.97-3.el8.x86_64 5/159 | |||

<...snip...> | |||

Verifying : python-srpm-macros-3-45.el8.noarch 155/159 | |||

Verifying : perl-SelfLoader-1.23-422.el8.noarch 156/159 | |||

Verifying : perl-open-1.11-422.el8.noarch 157/159 | |||

Verifying : perl-utils-5.26.3-422.el8.noarch 158/159 | |||

Verifying : alteeve-release-0.1-2.noarch 159/159 | |||

Installed products updated. | |||

Installed: | |||

alteeve-release-0.1-2.noarch dwz-0.12-10.el8.x86_64 | |||

efi-srpm-macros-3-3.el8.noarch ghc-srpm-macros-1.4.2-7.el8.noarch | |||

<...snip...> | |||

redhat-rpm-config-131-1.el8.noarch rust-srpm-macros-5-2.el8.noarch | |||

systemtap-sdt-devel-4.8-2.el8.x86_64 unzip-6.0-46.el8.x86_64 | |||

zip-3.0-23.el8.x86_64 | |||

Complete! | |||

</syntaxhighlight> | |||

== Selecting a Repository == | |||

There are two released version of the Anvil! cluster. There are pros and cons to both options; | |||

=== Community Repo === | |||

The ''Community'' repository is the free repo that anyone can use. As new builds pass our [[CI/CD]] test infrastructure, the versions in this repository are automatically built. | |||

This repository always has the latest and greatest from Alteeve. We use Jenkins and a suite of proprietary test suite to ensure that the quality of the releases is excellent. Of course, Alteeve is a company of humans, and there's always a small chance that a bug could get through. Our free community repository is community supported, and it's our wonderful users who help us improve and refine our Anvil! platform. | |||

=== Enterprise Repo === | |||

The ''Enterprise'' repository is the paid-access repository. The releases in the enterprise repo are "cherry picked" by Alteeve, and subjected to more extensive testing and QA. This repo is designed for businesses who want the most stable releases. | |||

Using this repo opens up the option of active monitoring of your Anvil! cluster by Alteeve, also! | |||

If you choose to get the Enterprise repo, please [[contact us]] and we will provide you with a custome repository key. | |||

== Configuring the Alteeve Repo == | |||

To configure the repo, we will use the <span class="code">alteeve-repo-setup</span> program that was just installed. | |||

You can see a full list of options, including the use of the <span class="code">--key <uuid></span> to enable to Enterprise Repo. For this tutorial, we will configure the community repo. | |||

<syntaxhighlight lang="bash"> | |||

alteeve-repo-setup | alteeve-repo-setup | ||

</syntaxhighlight> | </syntaxhighlight> | ||

<syntaxhighlight lang="text"> | |||

You have not specified an Enterprise repo key. This will enable the community | |||

repository. We work quite hard to make it as stable as we possibly can, but it | |||

does lead Enterprise. | |||

</syntaxhighlight> | |||

<syntaxhighlight lang="text"> | |||

Proceed? [y/N]: | |||

</syntaxhighlight> | |||

<syntaxhighlight lang="text"> | |||

Writing: [/etc/yum.repos.d/alteeve-anvil.repo]... | |||

Repo: [rhel-8] created successfuly. | |||

This is RHEL 8. Once subscribed, please enable this repo; | |||

# subscription-manager repos --enable codeready-builder-for-rhel-8-x86_64-rpms | |||

NOTE: On *nodes*, also add the High-Availability Addon repo as well; | |||

# subscription-manager repos --enable rhel-8-for-x86_64-highavailability-rpms | |||

</syntaxhighlight> | |||

If you are installing using CentOS Stream 8, you are done now and can move on. | |||

=== RHEL 8 Additional Repos === | |||

If you are using RHEL 8 proper, with the system now subscribed, we now need to enable additional repositories | |||

On all systems; | |||

<syntaxhighlight lang="bash"> | |||

subscription-manager repos --enable codeready-builder-for-rhel-8-x86_64-rpms | |||

</syntaxhighlight> | |||

<syntaxhighlight lang="text"> | |||

Repository 'codeready-builder-for-rhel-8-x86_64-rpms' is enabled for this system. | |||

</syntaxhighlight> | </syntaxhighlight> | ||

On Anvil! '''subnodes only''', not Striker dashboards or DR hosts. | |||

<syntaxhighlight lang="bash"> | <syntaxhighlight lang="bash"> | ||

subscription-manager repos --enable rhel-8-for-x86_64-highavailability-rpms | |||

</syntaxhighlight> | |||

<syntaxhighlight lang="text"> | |||

Repository 'rhel-8-for-x86_64-highavailability-rpms' is enabled for this system. | |||

</syntaxhighlight> | |||

<syntaxhighlight lang="text"> | |||

Is this ok [y/N]: | |||

</syntaxhighlight> | |||

<syntaxhighlight lang="text"> | |||

Running transaction check | |||

Transaction check succeeded. | |||

Running transaction test | |||

Transaction test succeeded. | |||

Running transaction | |||

Preparing : 1/1 | |||

Erasing : biosdevname-0.7.3-2.el8.x86_64 1/1 | |||

Running scriptlet: biosdevname-0.7.3-2.el8.x86_64 1/1 | |||

Verifying : biosdevname-0.7.3-2.el8.x86_64 1/1 | |||

Installed products updated. | |||

Removed: | |||

biosdevname-0.7.3-2.el8.x86_64 | |||

Complete! | |||

</syntaxhighlight> | </syntaxhighlight> | ||

== Installing Anvil! Packages == | |||

This is the step where, from a software perspective, Anvil! cluster systems differentiate to become Striker Dashboards, Anvil! subnodes, and DR hosts. Which a given machine becomes depends on which [[RPM]] is installed. The three RPMs that set a machine's role are; | |||

{| | |||

!style="text-align: right;"|Striker Dashboards: | |||

|class="code"|anvil-striker | |||

|- | |||

!style="text-align: right;"|Anvil! Subnodes: | |||

|class="code"|anvil-node | |||

|- | |||

!style="text-align: right;"|DR Hosts: | |||

|class="code"|anvil-dr | |||

|} | |||

=== Removing biosdevname === | |||

{{note|1=When M3 is ported to [[EL9]], the network interfaces will no longer be renamed in the same manner, and removing <span class="code">biosdevname</span> will no longer be required.}} | |||

Before we can install these RPMs, though, we need to remove the package called <span class="code">biosdevname</span>. Anvil! [[M3]] on [[EL8]] renames the network interfaces to reflect their role. By default, network interfaces are named using predictive names based on their physical location in the machine. Removing this package disables that feature and allows us to rename the interfaces | |||

<syntaxhighlight lang="bash"> | <syntaxhighlight lang="bash"> | ||

dnf remove biosdevname | dnf remove biosdevname | ||

</syntaxhighlight> | </syntaxhighlight> | ||

<syntaxhighlight lang="text"> | |||

Is this ok [y/N]: | |||

</syntaxhighlight> | |||

<syntaxhighlight lang="text"> | |||

Updating Subscription Management repositories. | |||

Dependencies resolved. | |||

-================================================================================================================================= | |||

Package Architecture Version Repository Size | |||

-================================================================================================================================= | |||

Removing: | |||

biosdevname x86_64 0.7.3-2.el8 @rhel-8-for-x86_64-baseos-rpms 67 k | |||

Transaction Summary | |||

-================================================================================================================================= | |||

Remove 1 Package | |||

Freed space: 67 k | |||

</syntaxhighlight> | |||

=== Striker Dashboards; Installing anvil-striker === | |||

Now we're ready to install! | |||

{{note|1=Given the install of the OS was minimal, these RPMs pull in a ''lot'' of RPMs. The output below is truncated.}} | |||

Thus, let's install the RPMs on our systems. | |||

<syntaxhighlight lang="bash"> | |||

dnf install anvil-striker | dnf install anvil-striker | ||

</syntaxhighlight> | </syntaxhighlight> | ||

|- | <syntaxhighlight lang="text"> | ||

| | Updating Subscription Management repositories. | ||

Last metadata expiration check: 0:07:31 ago on Fri 28 Jul 2023 06:00:20 PM EDT. | |||

Dependencies resolved. | |||

-================================================================================================================================= | |||

Package Arch Version Repository Size | |||

-================================================================================================================================= | |||

Installing: | |||

anvil-striker noarch 2.90-1.2704.25b460.el8 anvil-community-rhel-8 3.7 M | |||

Installing dependencies: | |||

GConf2 x86_64 3.2.6-22.el8 rhel-8-for-x86_64-appstream-rpms 1.0 M | |||

ModemManager-glib x86_64 1.20.2-1.el8 rhel-8-for-x86_64-baseos-rpms 338 k | |||

NetworkManager-initscripts-updown | |||

noarch 1:1.40.16-3.el8_8 rhel-8-for-x86_64-baseos-rpms 143 k | |||

SDL x86_64 1.2.15-39.el8 rhel-8-for-x86_64-appstream-rpms 218 k | |||

<...snip...> | |||

telnet x86_64 1:0.17-76.el8 rhel-8-for-x86_64-appstream-rpms 72 k | |||

vino x86_64 3.22.0-11.el8 rhel-8-for-x86_64-appstream-rpms 461 k | |||

xorg-x11-server-Xorg x86_64 1.20.11-15.el8 rhel-8-for-x86_64-appstream-rpms 1.5 M | |||

Enabling module streams: | |||

llvm-toolset rhel8 | |||

nodejs 10 | |||

perl-App-cpanminus 1.7044 | |||

perl-DBD-Pg 3.7 | |||

perl-DBI 1.641 | |||

perl-YAML 1.24 | |||

postgresql 10 | |||

python36 3.6 | |||

Transaction Summary | |||

-================================================================================================================================= | |||

Install 686 Packages | |||

Total download size: 601 M | |||

Installed size: 2.1 G | |||

</syntaxhighlight> | |||

<syntaxhighlight lang="text"> | |||

Is this ok [y/N]: | |||

</syntaxhighlight> | |||

<syntaxhighlight lang="text"> | |||

Downloading Packages: | |||

(1/686): htop-3.2.1-1.el8.x86_64.rpm 549 kB/s | 172 kB 00:00 | |||

(2/686): perl-Algorithm-C3-0.11-6.el8.noarch.rpm 457 kB/s | 29 kB 00:00 | |||

(3/686): perl-Class-C3-0.35-6.el8.noarch.rpm 781 kB/s | 37 kB 00:00 | |||

<...snip...> | |||

(684/686): webkit2gtk3-jsc-2.38.5-1.el8_8.5.x86_64.rpm 3.7 MB/s | 3.7 MB 00:00 | |||

(685/686): webkit2gtk3-2.38.5-1.el8_8.5.x86_64.rpm 7.7 MB/s | 21 MB 00:02 | |||

(686/686): firefox-102.13.0-2.el8_8.x86_64.rpm 5.5 MB/s | 109 MB 00:19 | |||

---------------------------------------------------------------------------------------------------------------------------------- | |||

Total 6.0 MB/s | 601 MB 01:40 | |||

Anvil Community Repository (rhel-8) 1.6 MB/s | 1.6 kB 00:00 | |||

Importing GPG key 0xD548C925: | |||

Userid : "Alteeve's Niche! Inc. repository <support@alteeve.ca>" | |||

Fingerprint: 3082 E979 518A 78DD 9569 CD2E 9D42 AA76 D548 C925 | |||

From : /etc/pki/rpm-gpg/RPM-GPG-KEY-Alteeve-Official | |||

</syntaxhighlight> | |||

<syntaxhighlight lang="text"> | |||

Is this ok [y/N]: y | |||

</syntaxhighlight> | |||

<syntaxhighlight lang="text"> | |||

Key imported successfully | |||

Running transaction check | |||

Transaction check succeeded. | |||

Running transaction test | |||

Transaction test succeeded. | |||

Running transaction | |||

Running scriptlet: npm-1:6.14.11-1.10.24.0.1.module+el8.3.0+10166+b07ac28e.x86_64 1/1 | |||

Preparing : 1/1 | |||

Installing : gdk-pixbuf2-2.36.12-5.el8.x86_64 1/686 | |||

Running scriptlet: gdk-pixbuf2-2.36.12-5.el8.x86_64 1/686 | |||

Installing : atk-2.28.1-1.el8.x86_64 2/686 | |||

Installing : libwayland-client-1.21.0-1.el8.x86_64 3/686 | |||

<...snip...> | |||

Verifying : bind-utils-32:9.11.36-8.el8_8.1.x86_64 684/686 | |||

Verifying : webkit2gtk3-jsc-2.38.5-1.el8_8.5.x86_64 685/686 | |||

Verifying : webkit2gtk3-2.38.5-1.el8_8.5.x86_64 686/686 | |||

Installed products updated. | |||

Installed: | |||

GConf2-3.2.6-22.el8.x86_64 | |||

ModemManager-glib-1.20.2-1.el8.x86_64 | |||

NetworkManager-initscripts-updown-1:1.40.16-3.el8_8.noarch | |||

<...snip...> | |||

yum-utils-4.0.21-19.el8_8.noarch | |||

zenity-3.28.1-2.el8.x86_64 | |||

zlib-devel-1.2.11-21.el8_7.x86_64 | |||

Complete! | |||

</syntaxhighlight> | |||

Done! | |||

==== Setting the Anvil! Password ==== | |||

{{note|1=This step is required on Striker dashboards only. There will be a chance to change the password on the other machines later.}} | |||

On Striker dashboards, you need to set the initial password. Generally this will be the same as what you set for the <span class="code">root</span> user during the OS install earlier. It doesn't have to be, of course, but if you use a different password in this step, note that the <span class="code">root</span> user password will change to the new password. | |||

To update the password, run the <span class="code">anvil-change-password</span> too. | |||

<syntaxhighlight lang="bash"> | |||

anvil-change-password --new-password "super secret password" | |||

</syntaxhighlight> | |||

<syntaxhighlight lang="text"> | |||

About to update the local passwords (shell users, database and web interface). | |||

</syntaxhighlight> | |||

<syntaxhighlight lang="text"> | |||

Proceed? [y/N] y | |||

</syntaxhighlight> | |||

<syntaxhighlight lang="text"> | |||

Updating the Striker user: [admin] password... Set-Cookie:anvil_user_uuid=; expires=-1d; | |||

Set-Cookie:anvil_user_hash=; expires=-1d; | |||

Done. | |||

Updating the database user: [postgres] password... Done. | |||

Updating the database user: [admin] password... Done. | |||

Updating the local config file: [super secret password] database password... Done. | |||

Updating the shell user: [admin] password... Done. | |||

Updating the shell user: [root] password... Done. | |||

Finished! | |||

NOTE: You must update the password of any other system using this host's | |||

database manually! | |||

</syntaxhighlight> | |||

This is the same tool you will use later on if you ever want to change the password for the Striker Dashboard, or for any Anvil! node (using the <span class="code">--anvil <name></span> switch). | |||

Done! | |||

=== Anvil! Subnode; Installing anvil-node === | |||

<syntaxhighlight lang="bash"> | |||

dnf install anvil-node | dnf install anvil-node | ||

</syntaxhighlight> | </syntaxhighlight> | ||

|- | <syntaxhighlight lang="text"> | ||

| | Updating Subscription Management repositories. | ||

Last metadata expiration check: 0:00:47 ago on Fri 28 Jul 2023 06:10:29 PM EDT. | |||

Dependencies resolved. | |||

-================================================================================================================================= | |||

Package Arch Version Repository Size | |||

-================================================================================================================================= | |||

Installing: | |||

anvil-node noarch 2.90-1.2704.25b460.el8 anvil-community-rhel-8 25 k | |||

Installing dependencies: | |||

NetworkManager-initscripts-updown | |||

noarch 1:1.40.16-3.el8_8 rhel-8-for-x86_64-baseos-rpms 143 k | |||

akmod-drbd x86_64 9.2.4-1.el8 anvil-community-rhel-8 1.3 M | |||

akmods noarch 0.5.7-8.el8 anvil-community-rhel-8 34 k | |||

alsa-lib x86_64 1.2.8-2.el8 rhel-8-for-x86_64-appstream-rpms 497 k | |||

<...snip...> | |||

rubygem-io-console x86_64 0.4.6-110.module+el8.6.0+15956+aa803fc1 rhel-8-for-x86_64-appstream-rpms 68 k | |||

rubygem-rdoc noarch 6.0.1.1-110.module+el8.6.0+15956+aa803fc1 rhel-8-for-x86_64-appstream-rpms 457 k | |||

telnet x86_64 1:0.17-76.el8 rhel-8-for-x86_64-appstream-rpms 72 k | |||

Enabling module streams: | |||

idm client | |||

llvm-toolset rhel8 | |||

perl-App-cpanminus 1.7044 | |||

perl-DBD-Pg 3.7 | |||

perl-DBI 1.641 | |||

perl-YAML 1.24 | |||

postgresql 10 | |||

python36 3.6 | |||

ruby 2.5 | |||

Transaction Summary | |||

-================================================================================================================================ | |||

Install 489 Packages | |||

Total download size: 330 M | |||

Installed size: 1.3 G | |||

</syntaxhighlight> | |||

<syntaxhighlight lang="text"> | |||

Is this ok [y/N]: | |||

</syntaxhighlight> | |||

<syntaxhighlight lang="text"> | |||

Downloading Packages: | |||

(1/489): akmods-0.5.7-8.el8.noarch.rpm 115 kB/s | 34 kB 00:00 | |||

(2/489): anvil-node-2.90-1.2704.25b460.el8.noarch.rpm 602 kB/s | 25 kB 00:00 | |||

(3/489): akmod-drbd-9.2.4-1.el8.x86_64.rpm 2.6 MB/s | 1.3 MB 00:00 | |||

<...snip...> | |||

(487/489): perl-Devel-StackTrace-2.03-2.el8.noarch.rpm 180 kB/s | 35 kB 00:00 | |||

(488/489): perl-Specio-0.42-2.el8.noarch.rpm 410 kB/s | 159 kB 00:00 | |||

(489/489): perl-File-DesktopEntry-0.22-7.el8.noarch.rpm 137 kB/s | 27 kB 00:00 | |||

---------------------------------------------------------------------------------------------------------------------------------- | |||

Total 5.7 MB/s | 330 MB 00:57 | |||

Anvil Community Repository (rhel-8) 1.6 MB/s | 1.6 kB 00:00 | |||

Importing GPG key 0xD548C925: | |||

Userid : "Alteeve's Niche! Inc. repository <support@alteeve.ca>" | |||

Fingerprint: 3082 E979 518A 78DD 9569 CD2E 9D42 AA76 D548 C925 | |||

From : /etc/pki/rpm-gpg/RPM-GPG-KEY-Alteeve-Official | |||

</syntaxhighlight> | |||

<syntaxhighlight lang="text"> | |||

Is this ok [y/N]: | |||

</syntaxhighlight> | |||

<syntaxhighlight lang="text"> | |||

Key imported successfully | |||

Running transaction check | |||

Transaction check succeeded. | |||

Running transaction test | |||

Transaction test succeeded. | |||

Running transaction | |||

Preparing : 1/1 | |||

Installing : ruby-libs-2.5.9-110.module+el8.6.0+15956+aa803fc1.x86_64 1/489 | |||

Installing : telnet-1:0.17-76.el8.x86_64 2/489 | |||

Installing : rubygem-io-console-0.4.6-110.module+el8.6.0+15956+aa803fc1.x86_64 3/489 | |||

<...snip...> | |||

Verifying : perl-Devel-StackTrace-1:2.03-2.el8.noarch 487/489 | |||

Verifying : perl-namespace-clean-0.27-7.el8.noarch 488/489 | |||

Verifying : perl-File-DesktopEntry-0.22-7.el8.noarch 489/489 | |||

Installed products updated. | |||

Installed: | |||

NetworkManager-initscripts-updown-1:1.40.16-3.el8_8.noarch | |||

abattis-cantarell-fonts-0.0.25-6.el8.noarch | |||

akmod-drbd-9.2.4-1.el8.x86_64 | |||

<...snip...> | |||

yum-utils-4.0.21-19.el8_8.noarch | |||

zlib-devel-1.2.11-21.el8_7.x86_64 | |||

zstd-1.4.4-1.el8.x86_64 | |||

Complete! | |||

</syntaxhighlight> | |||

=== Disaster Recovery Host; Installing anvil-dr === | |||

<syntaxhighlight lang="bash"> | |||

dnf install anvil-dr | dnf install anvil-dr | ||

</syntaxhighlight> | </syntaxhighlight> | ||

<syntaxhighlight lang="text"> | |||

Updating Subscription Management repositories. | |||

Last metadata expiration check: 0:00:46 ago on Fri 28 Jul 2023 06:10:37 PM EDT. | |||

Dependencies resolved. | |||

-================================================================================================================================= | |||

Package Arch Version Repository Size | |||

-================================================================================================================================= | |||

Installing: | |||

anvil-dr noarch 2.90-1.2704.25b460.el8 anvil-community-rhel-8 8.5 k | |||

Installing dependencies: | |||

NetworkManager-initscripts-updown | |||

noarch 1:1.40.16-3.el8_8 rhel-8-for-x86_64-baseos-rpms 143 k | |||

SDL x86_64 1.2.15-39.el8 rhel-8-for-x86_64-appstream-rpms 218 k | |||

akmod-drbd x86_64 9.2.4-1.el8 anvil-community-rhel-8 1.3 M | |||

akmods noarch 0.5.7-8.el8 anvil-community-rhel-8 34 k | |||

<...snip...> | |||

geolite2-country noarch 20180605-1.el8 rhel-8-for-x86_64-appstream-rpms 1.0 M | |||

openssl-devel x86_64 1:1.1.1k-9.el8_7 rhel-8-for-x86_64-baseos-rpms 2.3 M | |||

telnet x86_64 1:0.17-76.el8 rhel-8-for-x86_64-appstream-rpms 72 k | |||

Enabling module streams: | |||

idm client | |||

llvm-toolset rhel8 | |||

perl-App-cpanminus 1.7044 | |||

perl-DBD-Pg 3.7 | |||

perl-DBI 1.641 | |||

perl-YAML 1.24 | |||

postgresql 10 | |||

python36 3.6 | |||

Transaction Summary | |||

-================================================================================================================================= | |||

Install 459 Packages | |||

Total download size: 315 M | |||

Installed size: 1.3 G | |||

</syntaxhighlight> | |||

<syntaxhighlight lang="text"> | |||

Is this ok [y/N]: | |||

</syntaxhighlight> | |||

<syntaxhighlight lang="text"> | |||

Downloading Packages: | |||

(1/459): akmods-0.5.7-8.el8.noarch.rpm 123 kB/s | 34 kB 00:00 | |||

(2/459): anvil-dr-2.90-1.2704.25b460.el8.noarch.rpm 229 kB/s | 8.5 kB 00:00 | |||

(3/459): anvil-core-2.90-1.2704.25b460.el8.noarch.rpm 1.9 MB/s | 1.0 MB 00:00 | |||

<...snip...> | |||

(457/459): perl-Capture-Tiny-0.46-4.el8.noarch.rpm 107 kB/s | 39 kB 00:00 | |||

(458/459): perl-Devel-StackTrace-2.03-2.el8.noarch.rpm 125 kB/s | 35 kB 00:00 | |||

(459/459): perl-File-DesktopEntry-0.22-7.el8.noarch.rpm 277 kB/s | 27 kB 00:00 | |||

---------------------------------------------------------------------------------------------------------------------------------- | |||

Total 6.4 MB/s | 315 MB 00:48 | |||

Anvil Community Repository (rhel-8) 1.6 MB/s | 1.6 kB 00:00 | |||

Importing GPG key 0xD548C925: | |||

Userid : "Alteeve's Niche! Inc. repository <support@alteeve.ca>" | |||

Fingerprint: 3082 E979 518A 78DD 9569 CD2E 9D42 AA76 D548 C925 | |||

From : /etc/pki/rpm-gpg/RPM-GPG-KEY-Alteeve-Official | |||

</syntaxhighlight> | |||

<syntaxhighlight lang="text"> | |||

Is this ok [y/N]: | |||

</syntaxhighlight> | |||

<syntaxhighlight lang="text"> | |||

Key imported successfully | |||

Running transaction check | |||

Transaction check succeeded. | |||

Running transaction test | |||

Transaction test succeeded. | |||

Running transaction | |||

Preparing : 1/1 | |||

Installing : telnet-1:0.17-76.el8.x86_64 1/459 | |||

Installing : nspr-4.34.0-3.el8_6.x86_64 2/459 | |||

Running scriptlet: nspr-4.34.0-3.el8_6.x86_64 2/459 | |||

<...snip...> | |||

Verifying : perl-Devel-StackTrace-1:2.03-2.el8.noarch 457/459 | |||

Verifying : perl-namespace-clean-0.27-7.el8.noarch 458/459 | |||

Verifying : perl-File-DesktopEntry-0.22-7.el8.noarch 459/459 | |||

Installed products updated. | |||

Installed: | |||

NetworkManager-initscripts-updown-1:1.40.16-3.el8_8.noarch | |||

SDL-1.2.15-39.el8.x86_64 | |||

abattis-cantarell-fonts-0.0.25-6.el8.noarch | |||

<...snip...> | |||

yum-utils-4.0.21-19.el8_8.noarch | |||

zlib-devel-1.2.11-21.el8_7.x86_64 | |||

zstd-1.4.4-1.el8.x86_64 | |||

Complete! | |||

</syntaxhighlight> | |||

= Configuring the Striker Dashboards= | |||

{{note|1=The <span class="code">admin</span> user will automatically be created by <span class="code">anvil-daemon</span> after <span class="code">anvil-striker</span> is installed. You don't need to create this account manually.}} | |||

There are no default passwords on Anvil! systems. So the first step is to set the password for the <span class="code">admin</span> user. | |||

<syntaxhighlight lang="bash"> | |||

passwd admin | |||

</syntaxhighlight> | |||

<syntaxhighlight lang="text"> | |||

Changing password for user admin. | |||

</syntaxhighlight> | |||

Enter the password you want to use twice. | |||

<syntaxhighlight lang="text"> | |||

New password: | |||

Retype new password: | |||

</syntaxhighlight> | |||

<syntaxhighlight lang="text"> | |||

passwd: all authentication tokens updated successfully. | |||

</syntaxhighlight> | |||

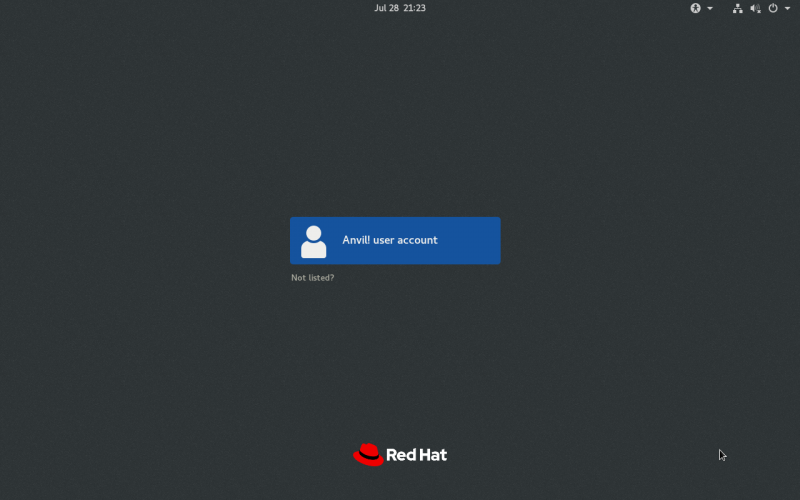

Now we can switch to the graphical interface! | |||

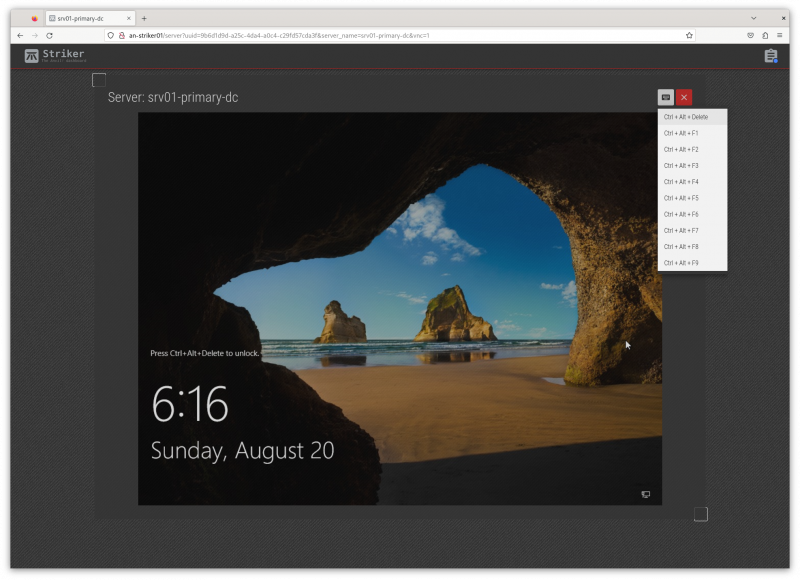

== Striker Dashboard == | |||

{{note|1=If you don't see the graphical login, either reboot the machine, or run <span class="code">systemctl isolate graphical.target</span>.}} | |||

[[image:an-striker01-rhel8-m3-initial-setup-01.png|thumb|center|800px|Striker graphical login.]] | |||

Log in to the ''Anvil! user account'' with the password you set above. | |||

[[image:an-striker01-rhel8-m3-initial-setup-02.png|thumb|center|800px|Activities menu and Firefox icon.]] | |||

Click on ''Activities'' on the top-left corner, and then click on the Firefox icon to open the browser. | |||

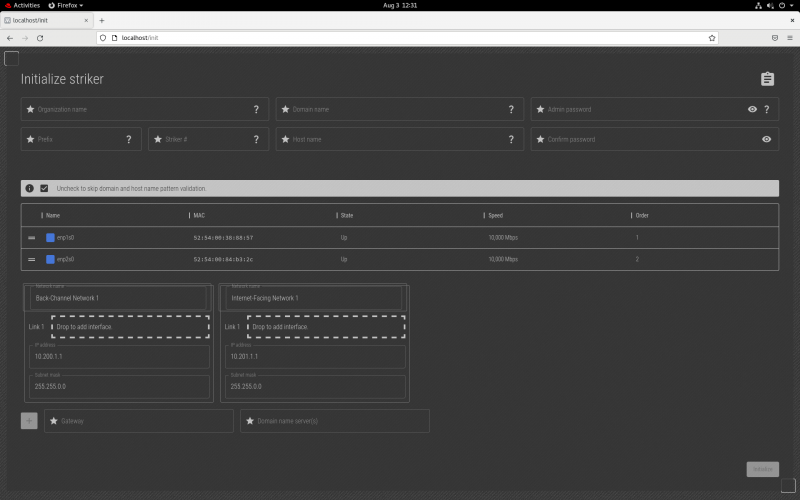

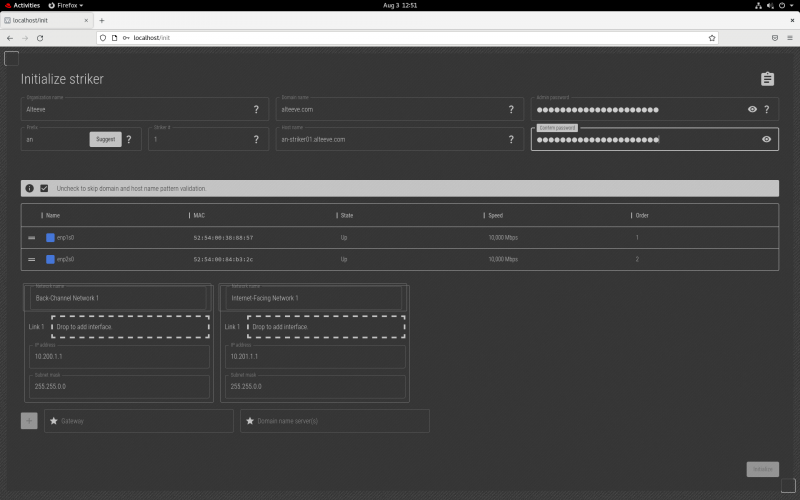

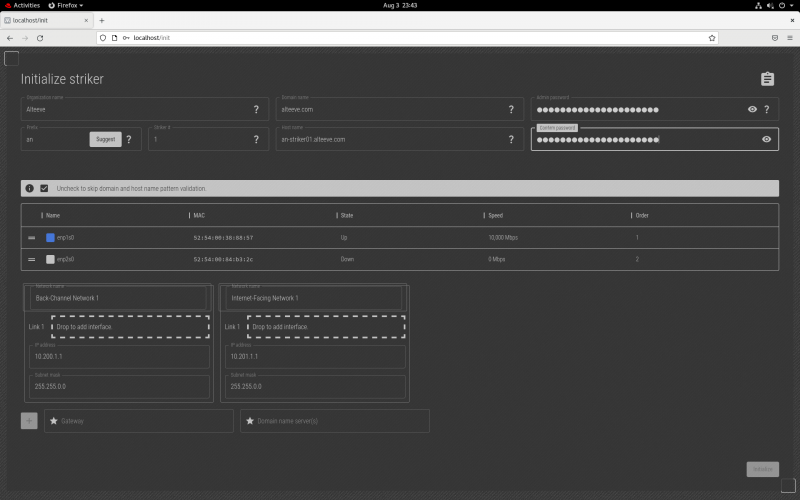

[[image:an-striker01-rhel8-m3-initial-setup-03.png|thumb|center|800px|Connecting to ''localhost'' to start the configuration.]] | |||

In Firefox, enter the URL <span class="code">http://localhost</span>. This will load the initial Striker configuration page where we'll start configuring the dashboard. | |||

[[image:an-striker01-rhel8-m3-initial-setup-04.png|thumb|center|800px|Filling out the configuration form.]] | |||

Fields; | |||

{| class="wikitable" | |||

!style="white-space:nowrap; text-align:left;"|Organization name | |||

|This is a descriptive name for the given Anvil! cluster. Generally this is a company, organization or site name. | |||

|- | |||

!style="white-space:nowrap; text-align:left;"|Domain name | |||

|This is the domain name that will be used when setting host names in the cluster. Generally this is the domain of the organization who use the Anvil! cluster. | |||

|- | |||

!style="white-space:nowrap; text-align:left;"|Prefix | |||

|This is a short, generally 2~5 characters, descriptive prefix for a given Anvil! cluster. It is used as the leading part of a machine's short host name. | |||

|- | |||

!style="white-space:nowrap; text-align:left;"|Striker # | |||

|Most Anvil! clusters have two Striker dashboards. This sets the sequence number, so the first is '1' and the second is '2'. If you have multiple Anvil! clusters, the first Striker on the second Anvil! cluster would be '3', etc. | |||

|- | |||

!style="white-space:nowrap; text-align:left;"|Host name | |||

|This is the host name for this striker. Generally it's in the format of <prefix>-striker0<sequence>.<domain>. | |||

|- | |||

!style="white-space:nowrap; text-align:left;"|Admin Password | |||

|This is the cluster's password, and should generally be the same password set when you set the password for the 'admin' user. | |||

|- | |||

!style="white-space:nowrap; text-align:left;"|Confirm Password | |||

|Re-enter the password to confirm the typo you entered doesn't have a typo. | |||

|} | |} | ||

{{note|1=It is strongly recommended that you keep the "Organization Name", "Domain Name", "Prefix" and "Admin Passwords" the same on Strikers that will be peered together.}} | |||

=== Network Configuration === | |||

One of the "trickier" parts of configuring an Anvil! machine is figuring out which physical network interface are to be used for which job. | |||

There are found networks used in Anvil! clusters. If you're not familiar with the networks, you may want to read this first; | |||

* [[Anvil! Networking]] | |||

The middle section shows the existing network interfaces, <span class="code">enp1s0</span> and <span class="code">enp2s0</span> in the example above. In the screenshot above, both interfaces show their <span class="code">State</span> as "<span class="code">On</span>". This shows that both interfaces are physically connected to a network switch. | |||

Which physical interface you want to use for a given task will be up to you, but above is an article explaining how Alteeve does it, and why. | |||

* [[Network Interface Planning]] | |||

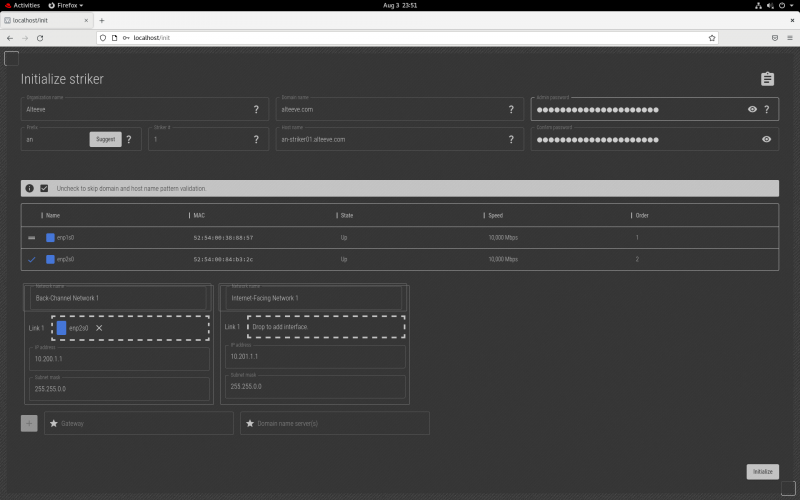

In this example, the Striker has two interfaces, one plugged into the [[BCN]], and one plugged into the [[IFN]]. To find which one is which, we'll unplug the cable going to the interface connected to the BCN, and we'll watch to see which interface state changes to "<span class="code">Down</span>". | |||

[[image:an-striker01-rhel8-m3-initial-setup-05.png|thumb|center|800px|BCN link down, <span class="code">enp2s0</span> showing as "<span class="code">Down</span>".]] | |||

When we unplug the network cable going to the BCN interface, we can see that the <span class="code">enp2s0</span> interface has changed to show that it is "<span class="code">Down</span>". Now that we know which interface is used to connect to the BCN, we can reconnect the network cable. | |||

[[image:an-striker01-rhel8-m3-initial-setup-06.png|thumb|center|800px|Interface <span class="code">enp2s0</span> configured for use as the BCN 1 link.]] | |||

To configure the <span class="code">enp2s0</span> interface for use as the "''Back-Channel Network 1''" interface, click or press on the lines to the left of the interface name, and drag it, into the <span class="code">Link 1</span> box. | |||

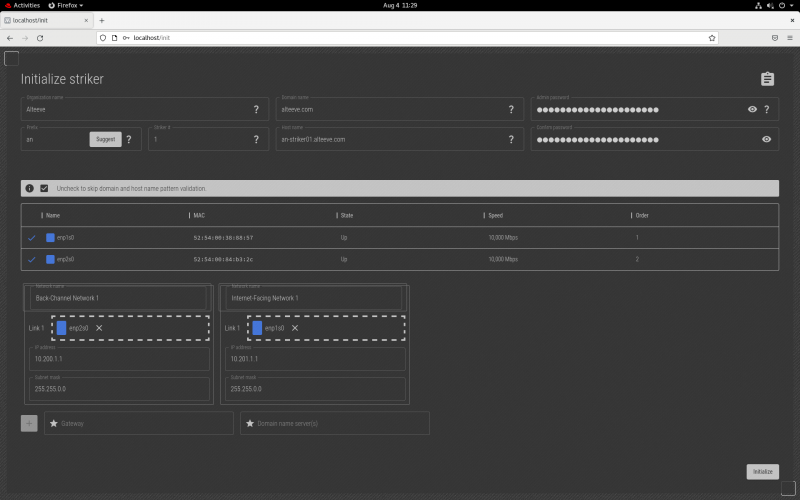

[[image:an-striker01-rhel8-m3-initial-setup-07.png|thumb|center|800px|Interface <span class="code">enp1s0</span> configured for use as the IFN 1 link.]] | |||

This striker dashboard only has two network interfaces, so we know that obviously <span class="code">enp1s0</span> must be the IFN interface. | |||

{{note|1=If you want redundancy on the Striker dashboard, you can use four interfaces, and you will see two links per network. Unplug the cables connected to the first switch, and they become '''Link 1'''. The interface for the second switch will go to '''Link 2'''. Configuring bonds this way will be covered when we configure subnodes.}} | |||

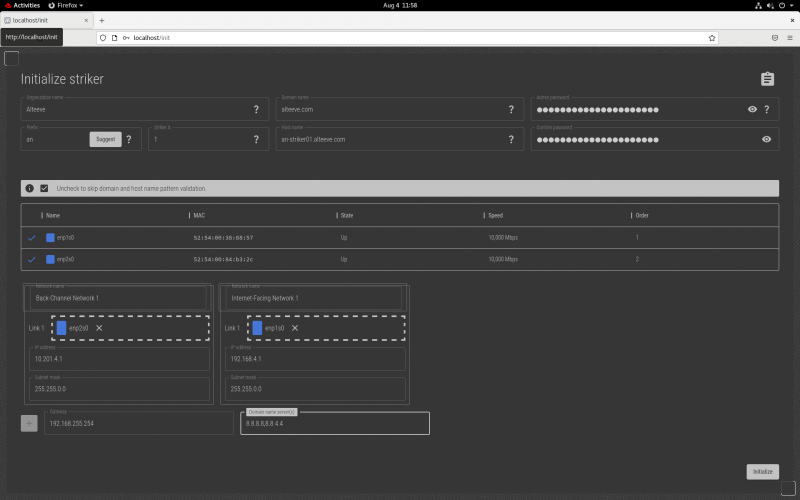

[[image:an-striker01-rhel8-m3-initial-setup-08.png|thumb|center|800px|Assigning IPs, default gateway and DNS servers.]] | |||

Once you're sure that the IPs you want are set, click on '''Initialize''' to send the new configuration. | |||

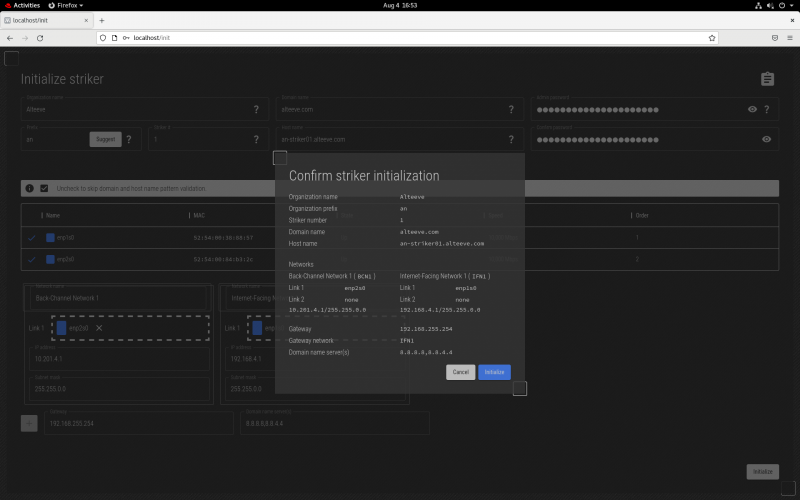

[[image:an-striker01-rhel8-m3-initial-setup-09.png|thumb|center|800px|New configuration confirmation page.]] | |||

If you're happy with the values set, click '''Initialize''' again, and it will send | |||

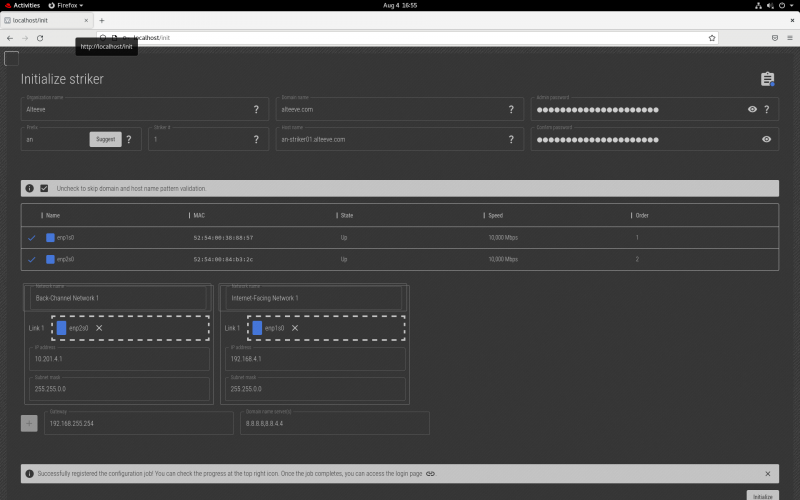

[[image:an-striker01-rhel8-m3-initial-setup-10.png|thumb|center|800px|New configuration job has been registered.]] | |||

After a moment, the Striker will reboot! | |||

=== First Login === | |||

After the dashboard reboots, log back in as you did before and restart Firefox. | |||

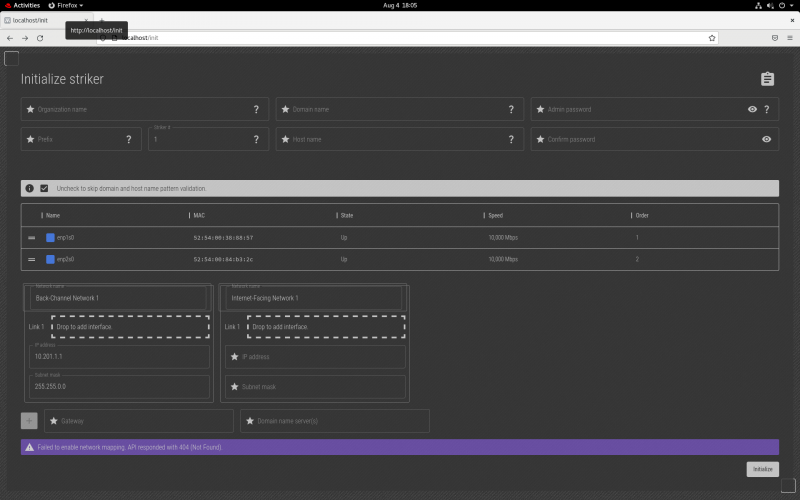

{{note|1=If the browser tries to reload the last page, it will go back to the configuration page (note the '''Failed to enable the network mapping API''' message and the url being <span class="code">http://localhost/init</span>). If this happens, ignore the page. The data will be stale an inaccurate.}} | |||

[[image:an-striker01-rhel8-m3-initial-setup-11.png|thumb|center|800px|Example of the reloaded, stale, cached config page, '''ignore this tab'''.]] | |||

Example of the old config page being loaded. Ignore this and go to the base url <span class="code">http://localhost</span>. | |||

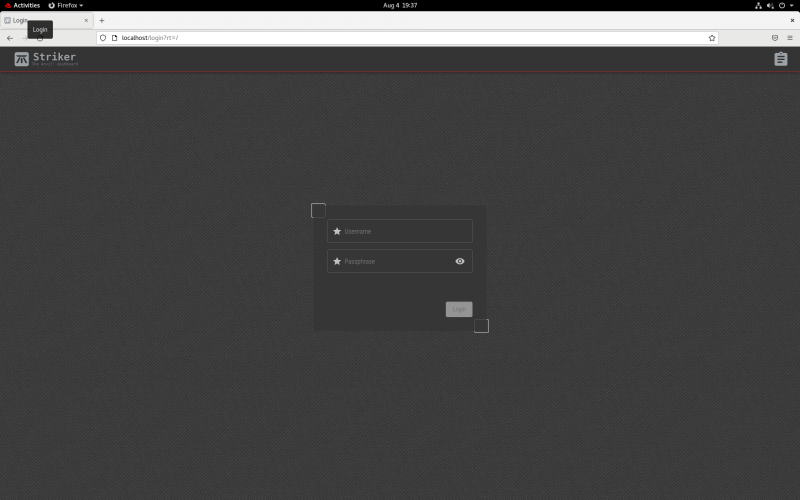

[[image:an-striker01-rhel8-m3-initial-setup-12.png|thumb|center|800px|The Striker Dashboard login page.]] | |||

Login with the user name <span class="code">admin</span> and use the password you set earlier. | |||

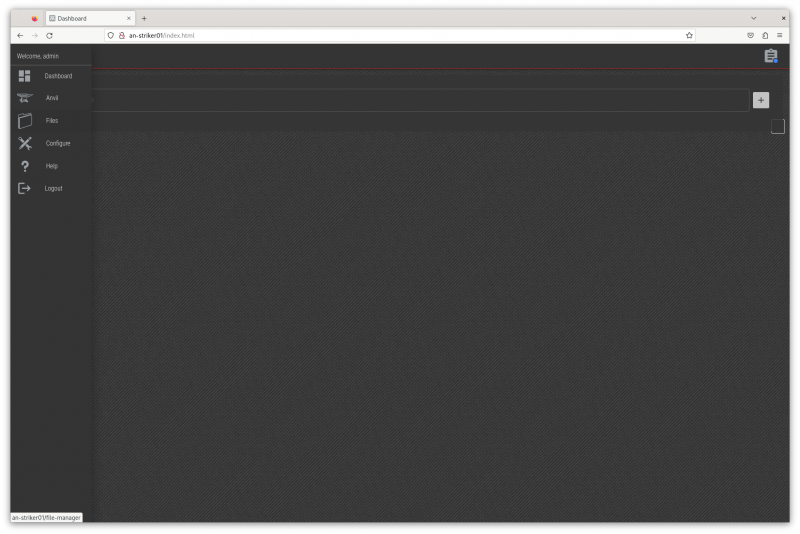

[[image:an-striker01-rhel8-m3-initial-setup-13.png|thumb|center|800px|The Striker Dashboard main page.]] | |||

The main page looks really spartan at first, but later this is where you'll find all the servers hosted on you Anvil! node(s). | |||

= Peering Striker Dashboards = | |||

{{note|1=For redundancy, we recommend having two Striker dashboards. Repeat the steps above to configure the other dashboard. Once both are configured, we will peer them together. Once peered, you can use either dashboard, they will be kept in sync and one will always be redundant for the other.}} | |||

With two configured strikers ready, lets peer them. For this example, we'll be working on <span class="code">an-striker01</span>, and peering with <span class="code">an-striker02</span> which has the [[BCN]] IP address <span class="code">10.201.4.2</span>. | |||

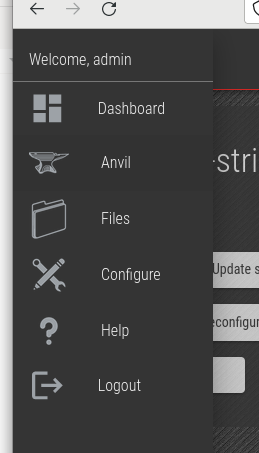

[[image:an-striker01-rhel8-m3-peering-01.png|thumb|center|516px|Clicking the '''Striker''' logo to open the menu.]] | |||

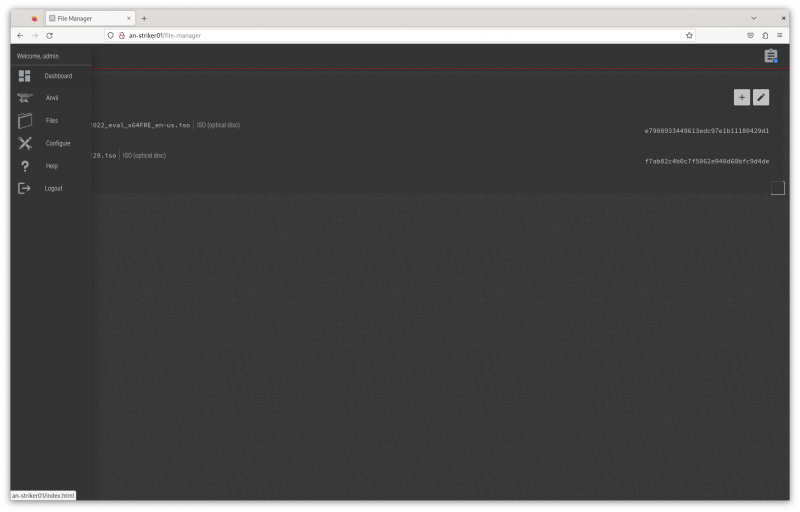

Click on the ''Striker'' logo at the top left, which opens the Striker menu. | |||

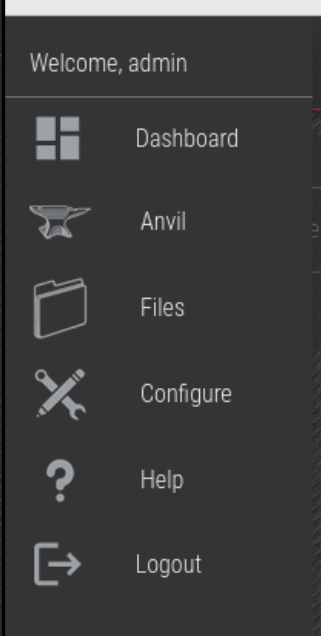

[[image:an-striker01-rhel8-m3-peering-02.png|thumb|center|321px|Click on '''Configure'''.]] | |||

Click on the ''Configure'' menu item. | |||

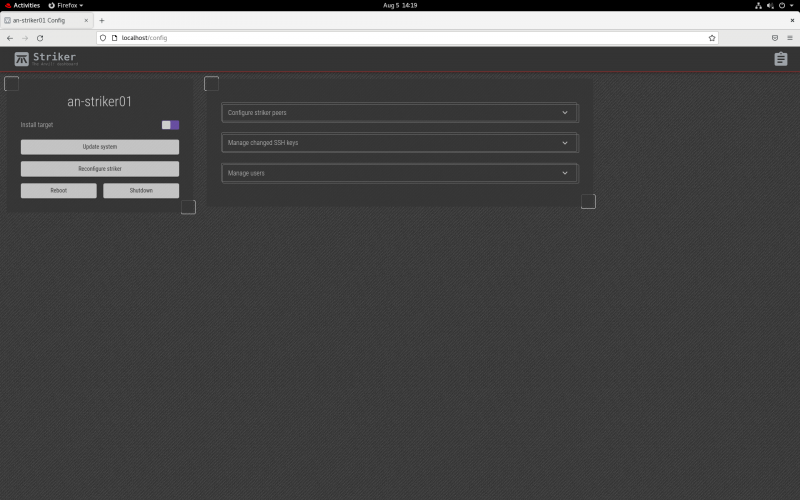

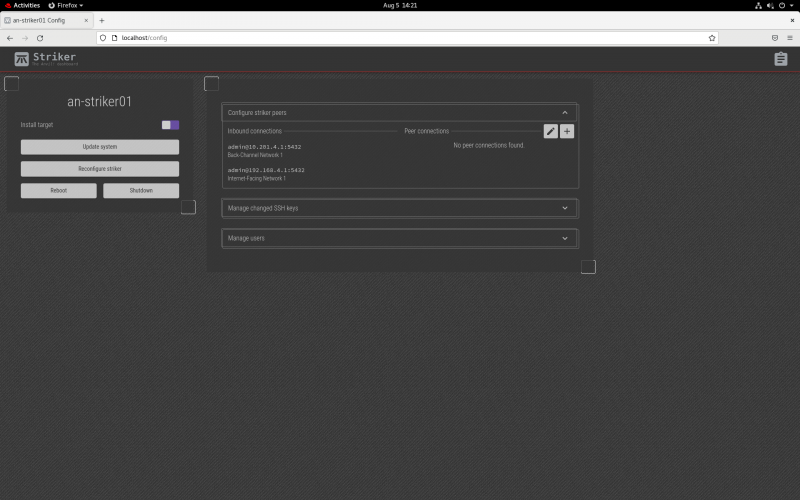

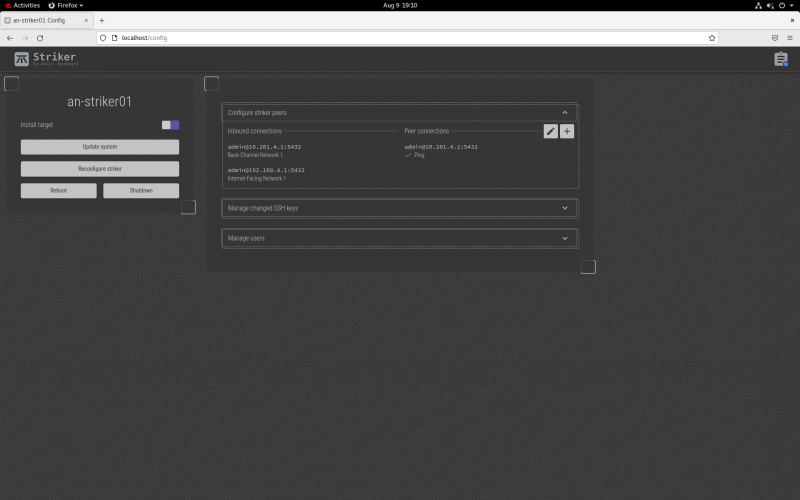

[[image:an-striker01-rhel8-m3-peering-03.png|thumb|center|800px|The Striker configuration menu.]] | |||

Here we see the menu for configuring (or reconfiguring) the Striker dashboard. | |||

[[image:an-striker01-rhel8-m3-peering-04.png|thumb|center|800px|Expanded '''Configure Striker Peers''' menu.]] | |||

Click on the title of '''Configure Striker Peers''' to expand the peer menu. | |||

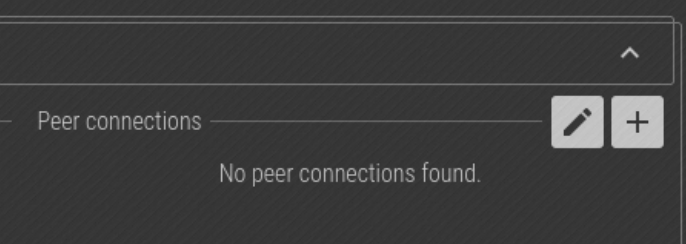

[[image:an-striker01-rhel8-m3-peering-05.png|thumb|center|686px|The '''+''' icon to add a peer.]] | |||

Click on the "+" icon on the top-right to open the new peer menu. | |||

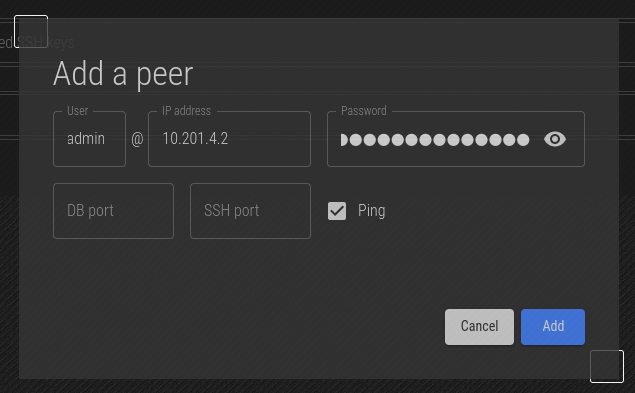

[[image:an-striker01-rhel8-m3-peering-06.png|thumb|center|635px|Peer striker form.]] | |||

In almost all cases, the Striker being peered is on the same BCN as the other. As such, we'll connect as the <span class="code">admin</span> user to the peer striker's BCN IP address, <span class="code">10.201.4.2</span> in this example. We can leave the (postgres) database and ssh ports blank (which then default to <span class="code">5432</span> and <span class="code">22</span>, respectively). | |||

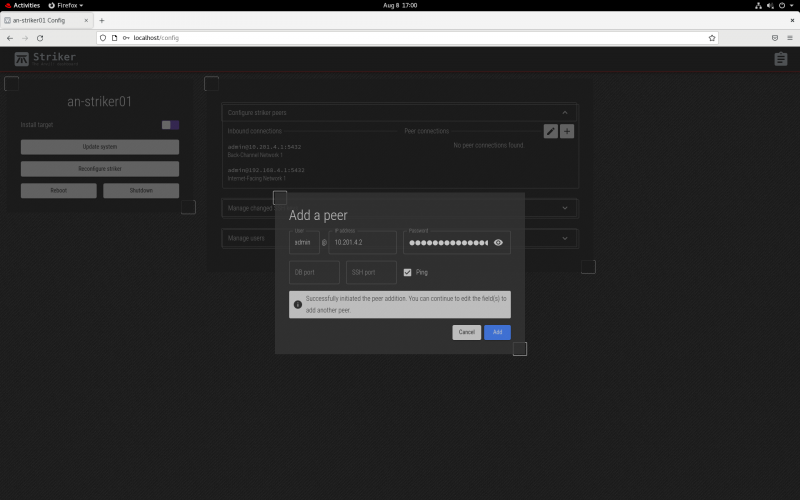

[[image:an-striker01-rhel8-m3-peering-07.png|thumb|center|800px|Save the job.]] | |||

Once filled out, click '''Add''' and the job to peer will be saved. Give it a minute or two, and the peering should be complete. | |||

[[image:an-striker01-rhel8-m3-peering-08.png|thumb|center|800px|The peer Striker is now displayed.]] | |||

After a couple minutes, you will see the peer striker appear in the list. At this point, the two Strikers now operate as one. Should one ever fail, the other can be used in its place. | |||

= Configuring the First Node = | |||

An Anvil! node is a pair of subnodes acting as one machine, providing full redundancy to hosted servers. | |||

The process of configuring a node is; | |||

1. Initialize each subnode | |||

2. Configure backing UPSes/PDUs | |||

3. Create an "Install Manifest" | |||

4. Run the Manifest | |||

== Initializing Subnodes == | |||

To initialize a node (or DR host), we need to enter it's current IP address and current <span class="code">root</span> password. This is used to allow striker to log into it and update the subnode's configuration to use the databases on the Striker dashboards. Once this is done, all further interaction with the subnodes will be via the database. | |||

[[image:an-striker01-rhel8-m3-initialize-subnode-01.png|thumb|center|259px|Striker Menu -> Anvil]] | |||

Click on the '''Striker''' logo to open the menu, and then click on '''Anvil'''. | |||

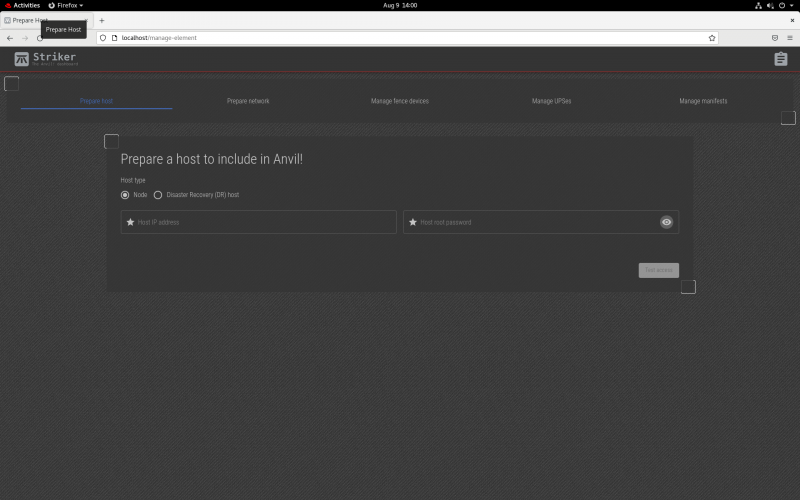

{{note|1=There are two options below, '''Node''' and '''Disaster Recovery (DR) Host'''. We're working on creating an Anvil! node, which is a matched pair of subnodes, so we'll select that. We'll look at DR hosts later.}} | |||

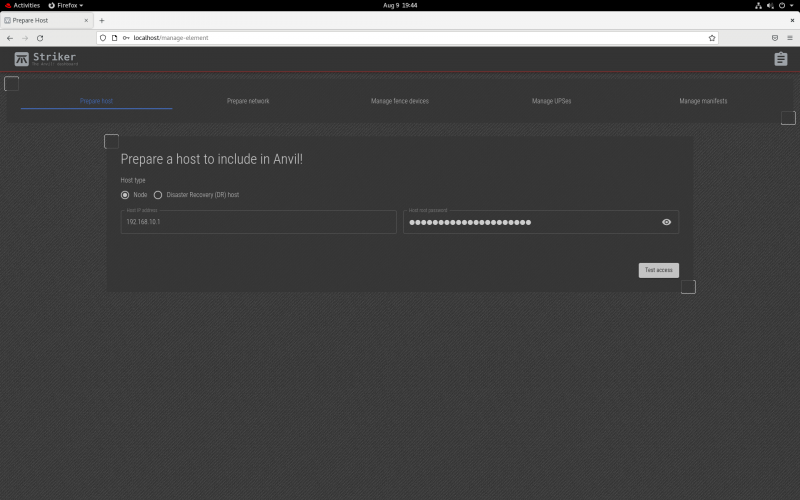

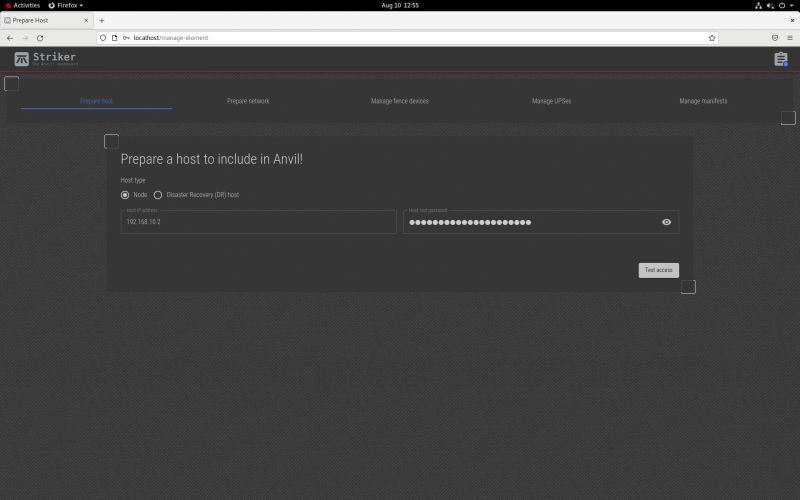

[[image:an-striker01-rhel8-m3-initialize-subnode-02.png|thumb|center|800px|"Prepare a host" menu.]] | |||

We're initializing a subnode, so click on '''Node'''. | |||

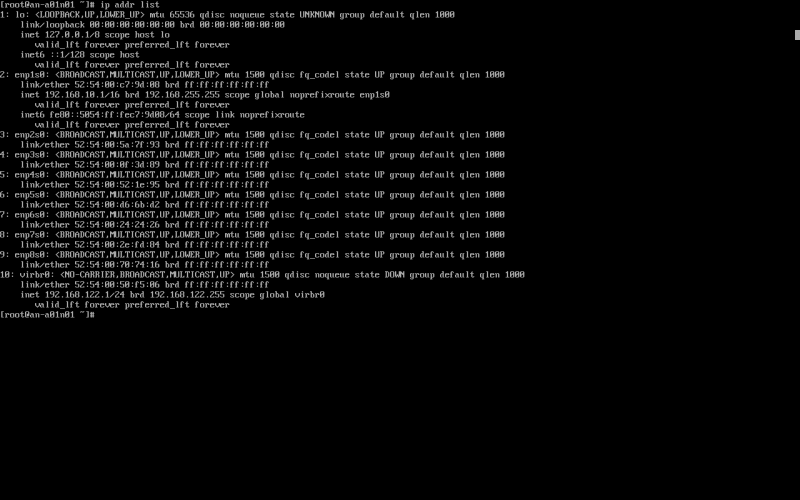

[[image:an-striker01-rhel8-m3-initialize-subnode-03.png|thumb|center|800px|Showing the IP assigned to the freshly installed subnode.]] | |||

The IP address is the one that you assigned during the initial install of the OS on the subnode. If you left it as DHCP, you can check to see what the IP address is using the <span class="code">ip addr list</span> command on the target subnode. In the example above, we see the IP is set to <span class="code">192.168.10.1</span>. | |||

[[image:an-striker01-rhel8-m3-initialize-subnode-04.png|thumb|center|800px|Preparing the subnode for initialization.]] | |||

Enter the IP address and password, and then click '''Test Access'''. | |||

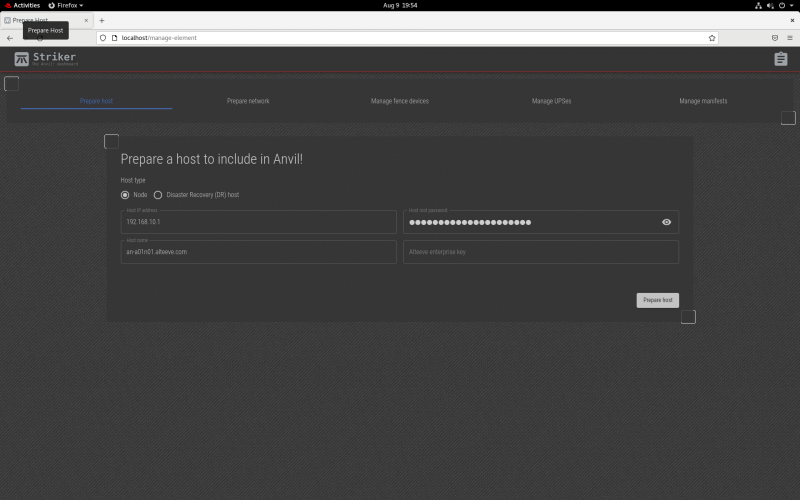

[[image:an-striker01-rhel8-m3-initialize-subnode-05.png|thumb|center|800px|Subnode access confirmed.]] | |||

In this example, the existing hostname is, <span class="code">an-a01n01.alteeve.com</span> which helps to confirm that we connected to node 1's first subnode. If you have an enterprise support key, enter it in this form. In our example, we'll be using the Alteeve community repo. | |||

We're happy with this, click on '''Prepare Host'''. | |||

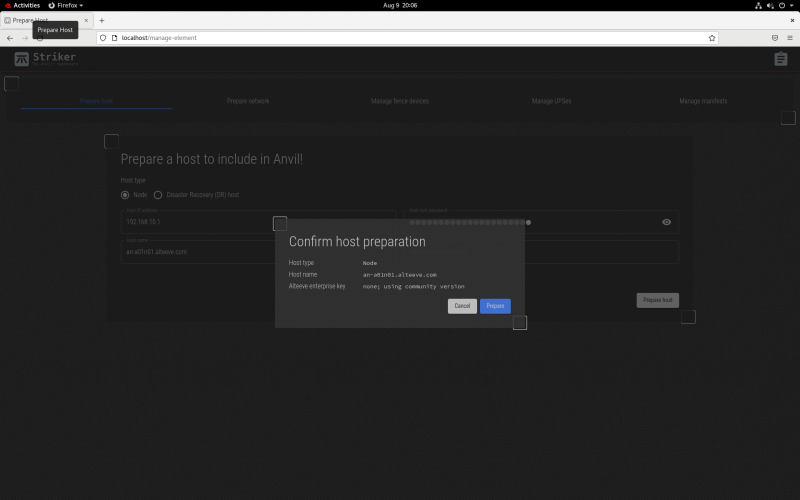

[[image:an-striker01-rhel8-m3-initialize-subnode-06.png|thumb|center|800px|Subnode initialization confirmation screen.]] | |||

The plan to initialize is present, if you're happy, click on '''Prepare'''. The job will be saved, and you can then repeat until your subnodes are initialized. | |||

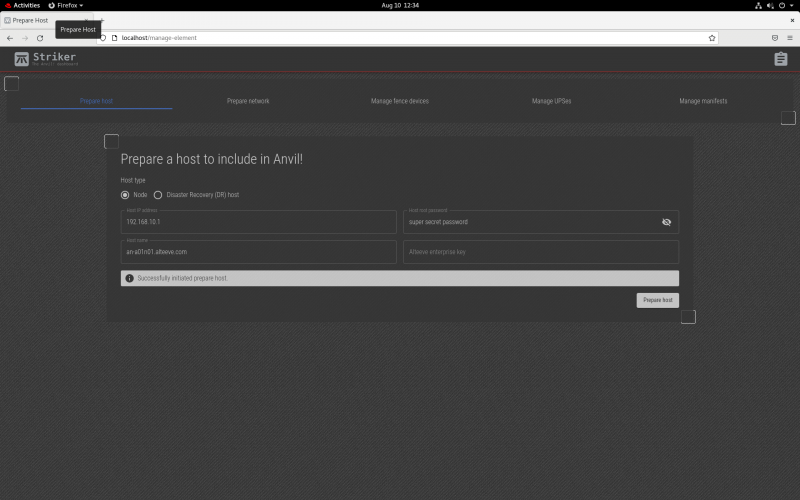

[[image:an-striker01-rhel8-m3-initialize-subnode-07.png|thumb|center|800px|Subnode initialization job saved.]] | |||

You'll see that the job has been saved! | |||

[[image:an-striker01-rhel8-m3-initialize-subnode-08.png|thumb|center|800px|Initialization another subnode.]] | |||

To initialize a new subnode, just change the <span class="code">Host IP Address</span> to the next IP, and the form will revert to test the new connection. | |||

From there, you proceed as you did above. | |||

== Mapping Configuration == | |||

{{note|1=In the examples below, you will see <span class="code">an-a01dr01</span>. This is a DR node that will be discussed later in the tutorial.}} | |||

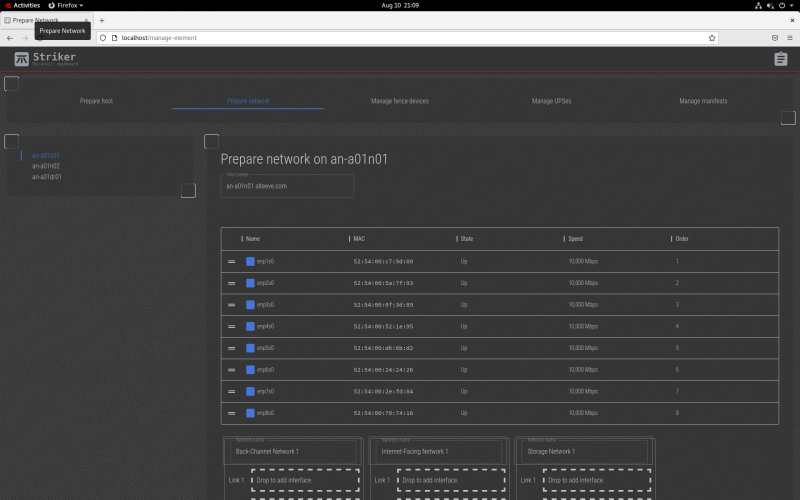

With the two subnodes initialized, we can now configure their networks! | |||

Subnodes must used redundant (specifically, <span class="code">active-passive</span> bonds). They must have a network connections in the Back-Channel, Internet-Facing and Storage Networks. In our example here, we're going to also create a connection in the Migration Network as wel, as we've got 8 interfaces to work with. | |||

[[image:an-striker01-rhel8-m3-mapping-node-network-01.png|thumb|center|800px|Prepare Network menu]] | |||

With our subnodes initialized, we can now click on '''Prepare Network'''. This opens up a list of subnodes and DR hosts that are unconfigured. In our case, there are three unconfigured hosts; <span class="code">an-a01n01</span>, <span class="code">an-a01n02</span> and the DR host <span class="code">an-a01dr01</span>. The subnode <span class="code">an-a01n01</span> is already selected, so we'll begin with it. | |||

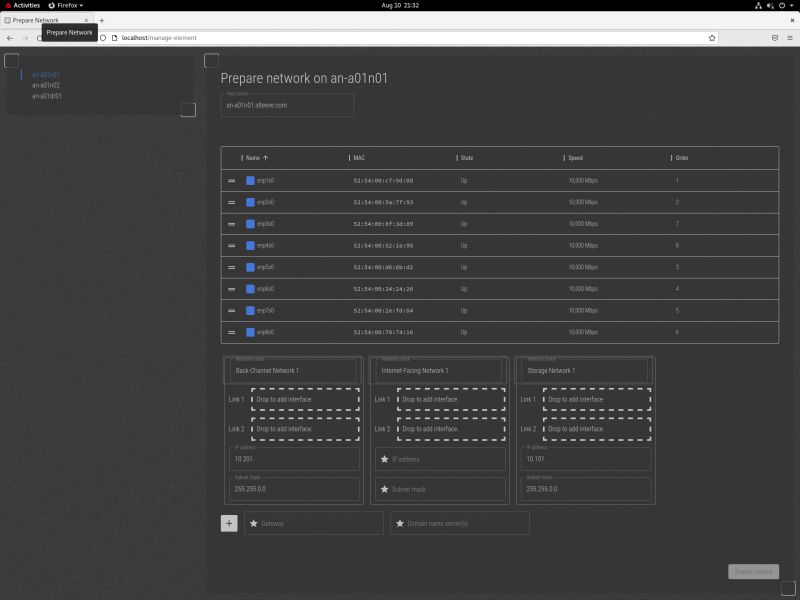

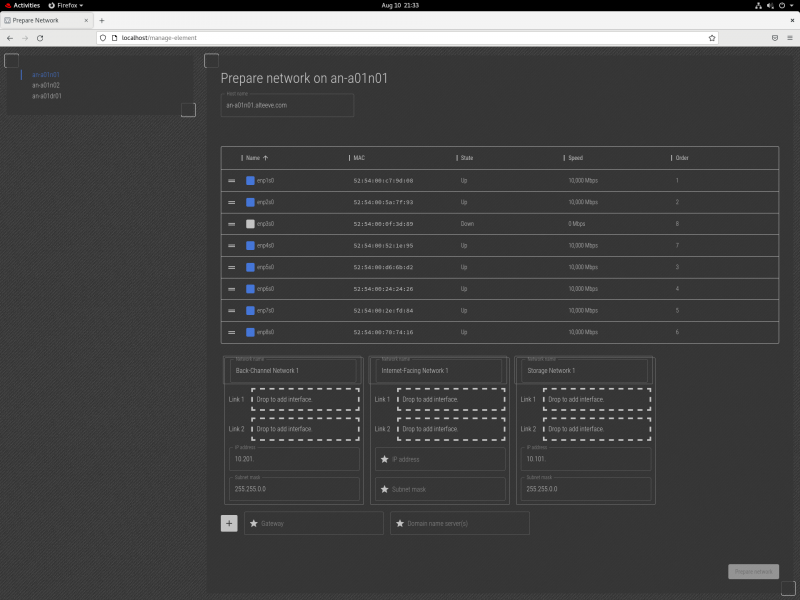

[[image:an-striker01-rhel8-m3-mapping-node-network-02.png|thumb|center|800px|List of network interfaces on 'an-a01n01'.]] | |||

This works the same way as how we configured the Striker, except for two things. First, and most obvious, there are a lot more interfaces to work with. | |||

{{todo|1=The ordering should show you which interface most recently link cycled, allowing the user to see which interface cycled during loss of connection. See github [https://github.com/ClusterLabs/anvil/issues/424 issue #424].}} | |||

Second, and more importantly, when we unplug the cable that the Striker dashboard is using to talk to the node, we will lose communication for a while. If that happens, you will not see the interface go '''down'''. When this happens, plug the interface back in, and move on to the next interface. When you're done mapping the network, only one interface and one slot will be left. | |||

Knowing this, if you want to unplug and plug in all interfaces in a known order (ie, "BCN1 - Link 1", "BCN 1 - Link 2", "IFN 1 - Link 1", "IFN 1 - Link 2" ... etc), you can do so, then return to the web interface and map the Order number to the order you cycled interfaces, and know which is which. | |||

For this tutorial though, we're going to plug cycle the interfaces one at a time. | |||

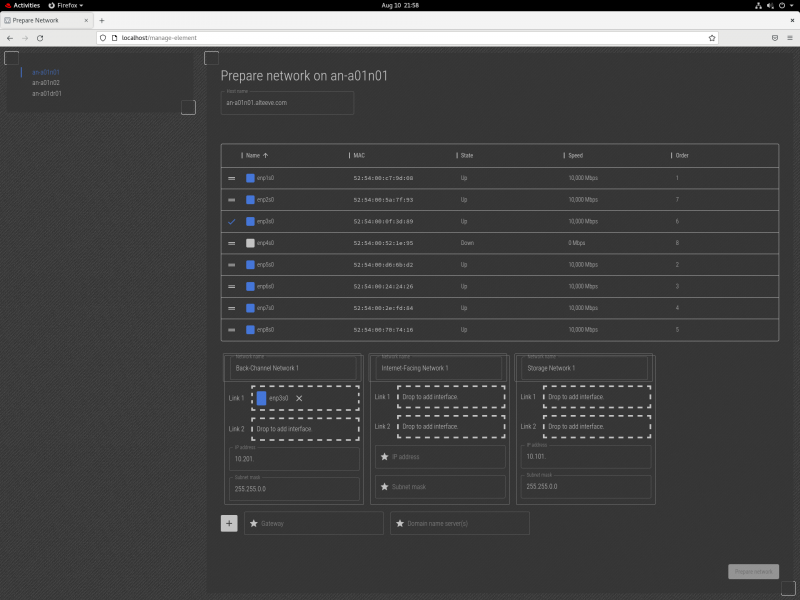

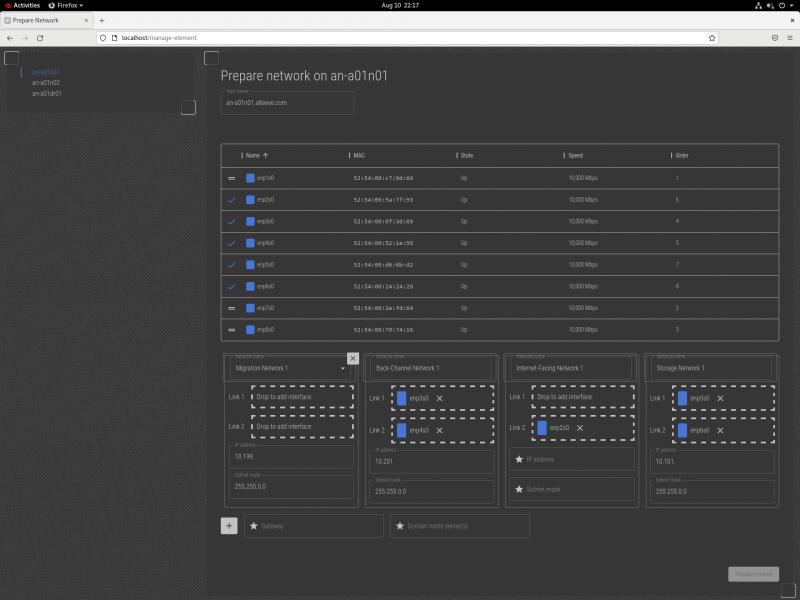

[[image:an-striker01-rhel8-m3-mapping-node-network-03.png|thumb|center|800px|The interface to set as "BCN 1 - Link 1" is unplugged.]] | |||

Lets start! Here, the network interface we want to make "BCN 1 - Link 1" is unplugged. With the cable unplugged, we can see that the <span class="code">enp3s0</span> interface is down. So we know that is the link we want. Click and drag it to the "Back-Channel Network 1" -> "Link 1" slot. | |||

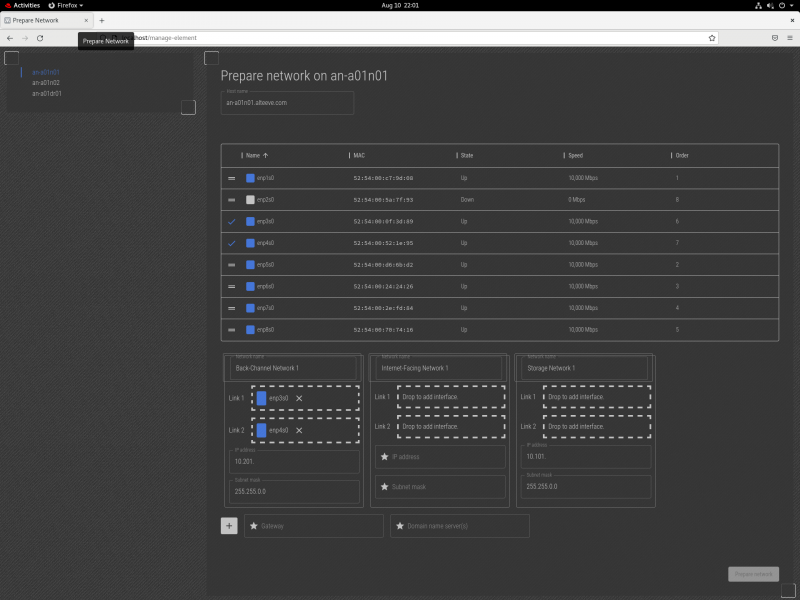

[[image:an-striker01-rhel8-m3-mapping-node-network-04.png|thumb|center|800px|The interface to set as "BCN 1 - Link 2" is unplugged.]] | |||

Next we see that <span class="code">enp4s0</span> is unplugged. So now we can drag that to the "Back-Channel Network 1" -> "Link 2" slot. | |||

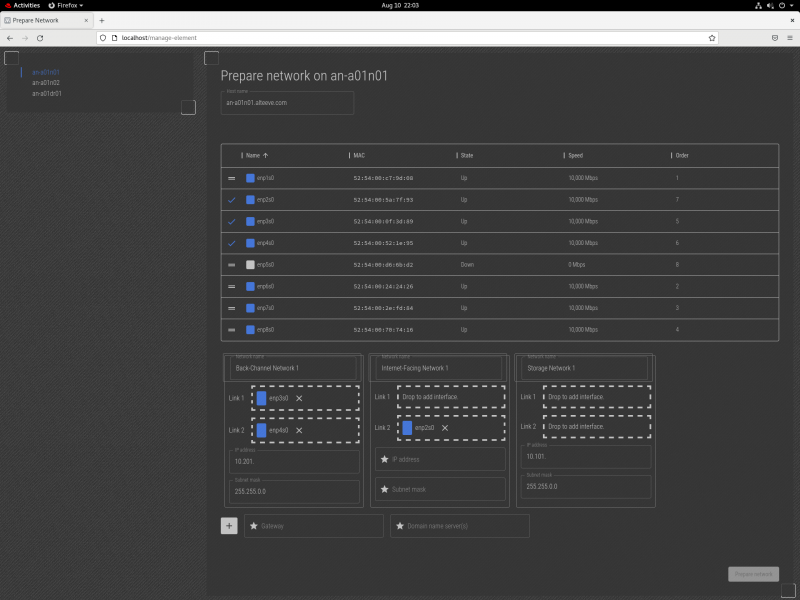

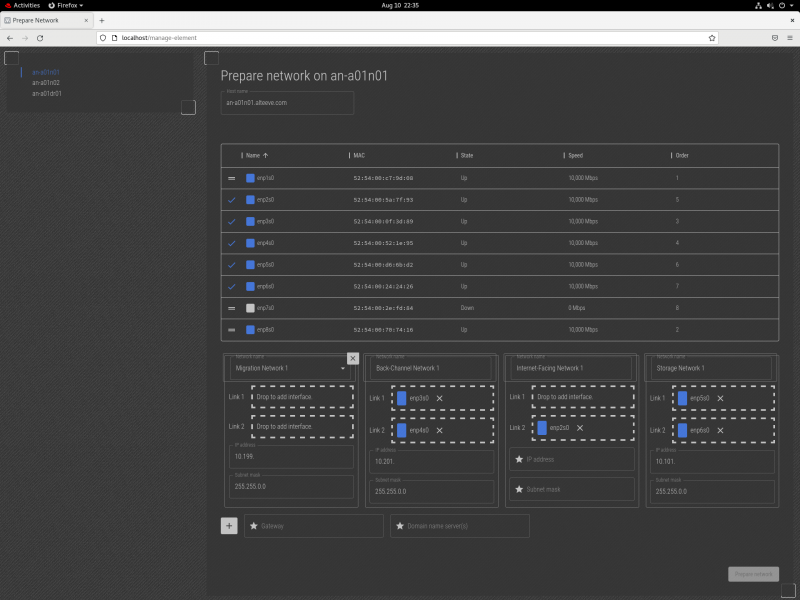

[[image:an-striker01-rhel8-m3-mapping-node-network-05.png|thumb|center|800px|The interface to set as "IFN 1 - Link 2" is unplugged.]] | |||

Now, we ran into the issue mentioned above. When we unplugged the cable to the interface we want to make "Internet-Facing Network, Link 1", we lost connection to the subnode, and could not see the interface go offline. For now, we'll skip it, plug it back in, and unplug the interface we want to be "Internet-Facing Network, Link 2". | |||

Next we see that <span class="code">enp2s0</span> is unplugged. So now we can drag that to the "Internet-Facing Network 1" -> "Link 2" slot. | |||

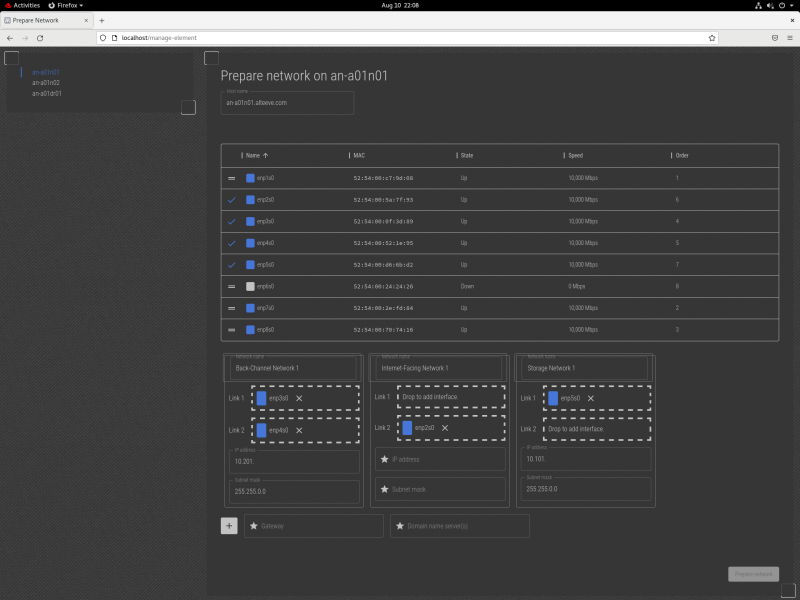

[[image:an-striker01-rhel8-m3-mapping-node-network-06.png|thumb|center|800px|The interface to set as "SN 1 - Link 1" is unplugged.]] | |||

Next, we'll unplug the cable going to the network interface we want to use as "Storage Network, Link 1". We see that <span class="code">enp5s0</span> is down, so we'll drag that to the "Internet-Facing Network 1" -> "Link 1" slot. | |||

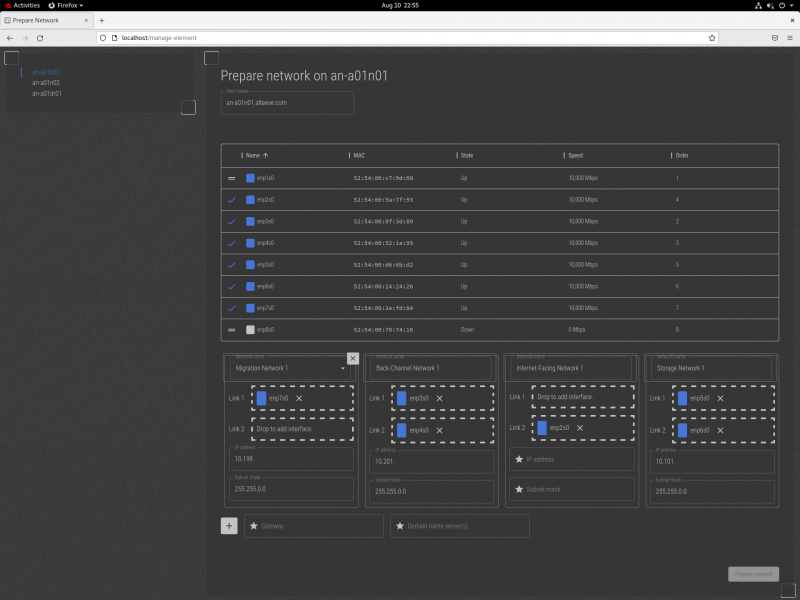

[[image:an-striker01-rhel8-m3-mapping-node-network-07.png|thumb|center|800px|The interface to set as "SN 1 - Link 2" is unplugged.]] | |||

Next, we'll unplug the cable going to the network interface we want to use as "Storage Network, Link 2". We see that <span class="code">enp6s0</span> is down, so we'll drag that to the "Internet-Facing Network 1" -> "Link 2" slot. | |||

{{Note|1=In the near future, the ''Migration Network'' panel will auto-display when 8+ interfaces exist.}} | |||

[[image:an-striker01-rhel8-m3-mapping-node-network-08.png|thumb|center|800px|Adding the "Migration Network" Menu.]] | |||

We need to add the "Migration Network" panel, and to do that, click on the "+" icon at the lower left. It will add the panel, and then click on the "Network Name" to select "Migration Network 1". | |||

[[image:an-striker01-rhel8-m3-mapping-node-network-09.png|thumb|center|800px|The interface to set as "MN 1 - Link 1" is unplugged.]] | |||

Now we can get back to mapping, and here we've unplugged the network cable going to the "Migration Network, Link 1" and we see <span class="code">enp7s0</span> is down. So we can drag that into the "Migration Network 1" -> "Link 1" slot. | |||

[[image:an-striker01-rhel8-m3-mapping-node-network-10.png|thumb|center|800px|The interface to set as "MN 1 - Link 2" is unplugged.]] | |||

Lastly, we unplugged the network cable going to the "Migration Network, Link 2" and we see <span class="code">enp8s0</span> is down. So we can drag that into the "Migration Network 1" -> "Link 2" slot. | |||

With that, the only interface still not mapped is <span class="code">ens1p0</span>, so we can confirm that it is the interface we couldn't map earlier, and so it can be dragged into the "Internet-Facing Network" -> "Link 1" slot. | |||

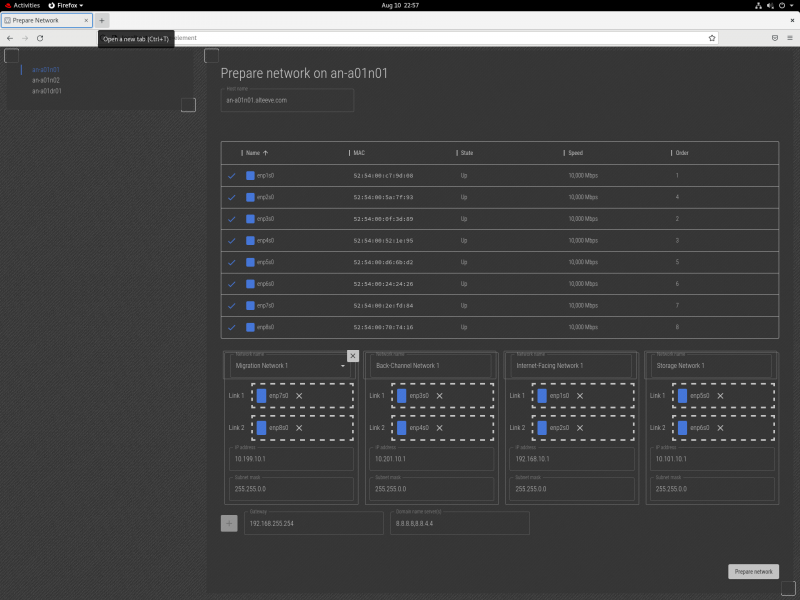

[[image:an-striker01-rhel8-m3-mapping-node-network-11.png|thumb|center|800px|All interfaces are mapped and IPs are assigned!]] | |||

The last step is to assign IP addresses. The subnet prefixes for all but the IFN are set, so you can add the IPs you want. Given this is Anvil! node 1, the IPs we want to set are: | |||

{| class="wikitable" | |||

!style="white-space:nowrap; text-align:center;"|host | |||

!style="white-space:nowrap; text-align:center;"|Back-Channel Network 1 | |||

!style="white-space:nowrap; text-align:center;"|Internet-Facing Network 1 | |||

!style="white-space:nowrap; text-align:center;"|Storage Network 1 | |||

!style="white-space:nowrap; text-align:center;"|Migration Network 1 | |||

|- | |||

!style="white-space:nowrap; text-align:left;"|<span class="code">an-a01n01</span> | |||

|style="white-space:nowrap; text-align:left;"|<span class="code">10.201.10.1/16</span> | |||

|style="white-space:nowrap; text-align:left;"|<span class="code">192.168.10.1/16</span> | |||

|style="white-space:nowrap; text-align:left;"|<span class="code">10.101.10.1/16</span> | |||

|style="white-space:nowrap; text-align:left;"|<span class="code">10.199.10.1/16</span> | |||

|- | |||

!style="white-space:nowrap; text-align:left;"|<span class="code">an-a01n02</span> | |||

|style="white-space:nowrap; text-align:left;"|<span class="code">10.201.10.2/16</span> | |||

|style="white-space:nowrap; text-align:left;"|<span class="code">192.168.10.2/16</span> | |||

|style="white-space:nowrap; text-align:left;"|<span class="code">10.101.10.2/16</span> | |||

|style="white-space:nowrap; text-align:left;"|<span class="code">10.199.10.2/16</span> | |||

|- | |||

!style="white-space:nowrap; text-align:left;"|<span class="code">an-a01dr01</span> | |||

|style="white-space:nowrap; text-align:left;"|<span class="code">10.201.10.3/16</span> | |||

|style="white-space:nowrap; text-align:left;"|<span class="code">192.168.10.3/16</span> | |||

|style="white-space:nowrap; text-align:left;"|<span class="code">10.101.10.3/16</span> | |||

|style="white-space:nowrap; text-align:left;"|<span class="code">n/a</span> | |||

|} | |||

{{note|1=You don't need to specify which network is the default gateway. The IP you enter will be matched against the IP and subnet masks automatically to determine which network the gateway belongs to.}} | |||

{{note|1=You can specify multiple DNS servers using a comma to separate them, like <span class="code">8.8.8.8,8.8.4.4</span>. The order they're written will become the order they're searched.}} | |||

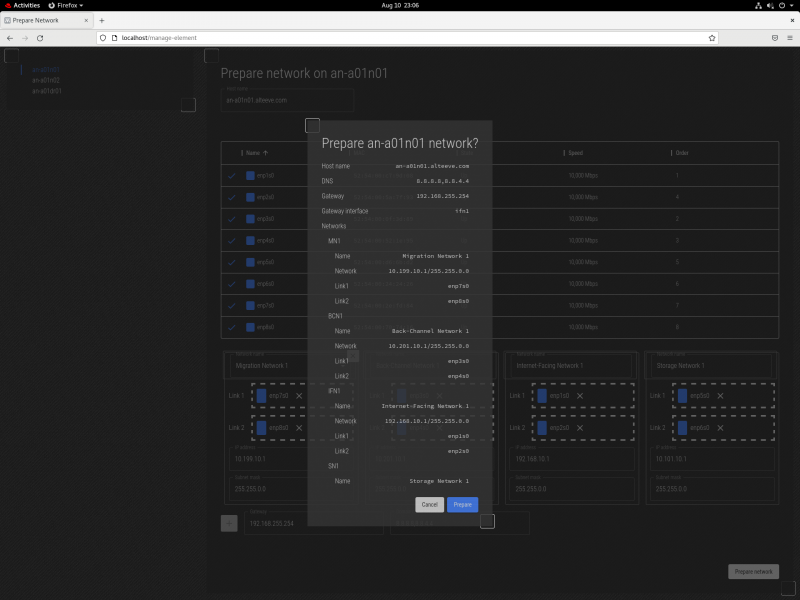

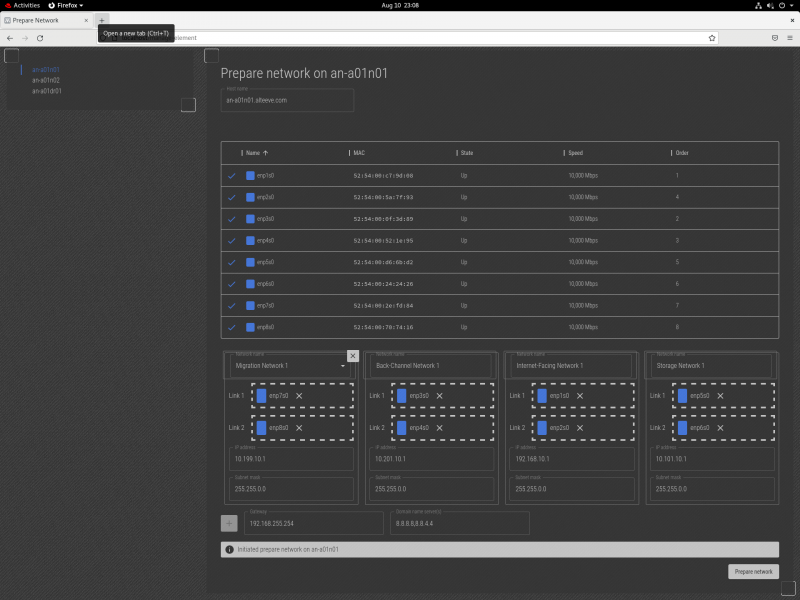

[[image:an-striker01-rhel8-m3-mapping-node-network-12.png|thumb|center|800px|Ready to configure.]] | |||

Double-check everything, and when you're happy, click on '''Prepare Network'''. You will be given a summary of what will be done. | |||

[[image:an-striker01-rhel8-m3-mapping-node-network-13.png|thumb|center|800px|Confirm and configure!]] | |||

After a few moments, you should see the mapped machine, <span class="code">an-a01n01</span> in this case, reboot. | |||

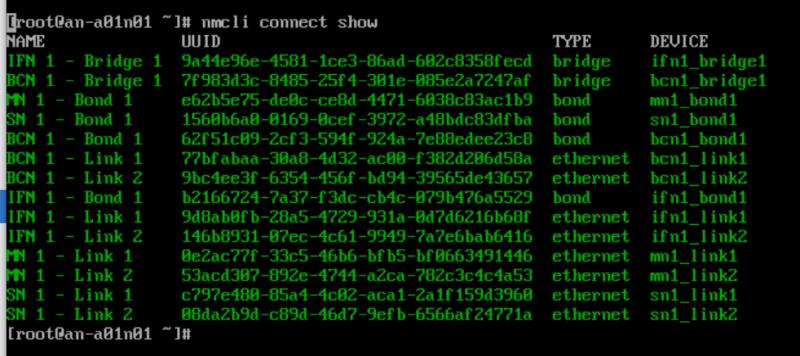

[[image:an-striker01-rhel8-m3-mapping-node-network-14.png|thumb|center|800px|Confirming the new network config on 'an-a01n01'.]] | |||

You do not need to log into it or do anything else at this point. If you're curious or want to confirm though, you can log into <span class="code">an-a01n01</span> and run either <span class="code">nmcli connection show</span> (as seen above) or <span class="code">ip addr list</span> to see the new configuration. | |||

{{note|1=Map the network on the other subnode(s) and DR host(s). Once all are mapped, we're ready to move on!}} | |||

== Adding Fence Devices == | |||

Before we begin, lets review why fence devices are so important. Here's an article talking about fencing and why it's important; | |||

* [[Fencing]] | |||

If you've working with High-Availability Clusters before, you may have heard that you need to have a third "[[quorum]]" node for vote tie breaking. We humbly argue this is wrong, and explain why here; | |||

* [[The 2-Node Myth]] | |||

In our example Anvil! cluster, the subnodes have [[IPMI]] [[BMC]]s, and those will be the primary fence method. IPMI are special in that the Anvil! cluster can auto-detect and auto-configure BMCs. So even though we're going to configure fence devices here, we do '''not''' need to configure them manually. | |||

So our secondary method of fencing is using APC branded [[PDU]]s. These are basically smart power bars which, via a network connection, can be logged into and have individual outlets (ports) powered down or up on command. So, if for some reason the IPMI fence failed, the backup would be to log into a pair of PDUs (one per [[PSU]] on the target subnode) and cut the power. This way, we can sever all power to the target node, forcing it off. | |||

{{warning|1=PDUs do not have a mechanism for confirming that down-stream devices are off. So it's critical that the subnodes are actually plugged into the given PDU and outlet that we configure them to be on!}} | |||

At this point, we're not going to configure nodes and ports. The purpose of this stage of the configuration is simply to define which PDUs exist. So lets add a pair. | |||

We're going to be configuring a pair of [[APC]] [[AP7900B]] PDUs. | |||

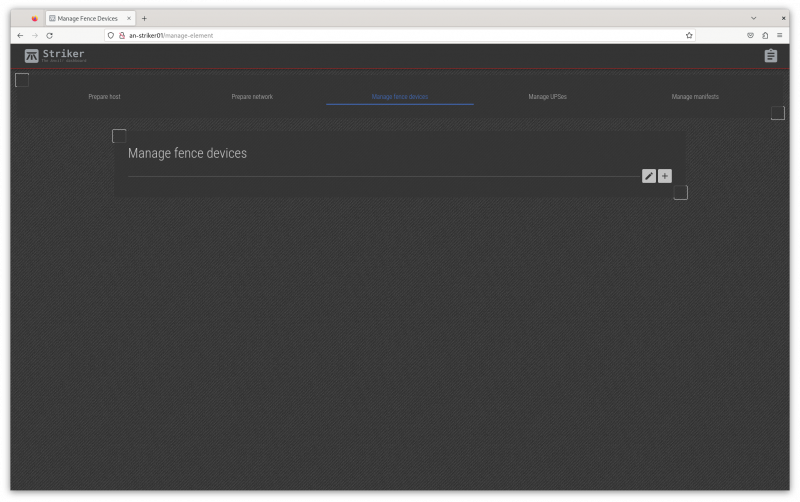

[[image:an-striker01-rhel8-m3-fence-setup-01.png|thumb|center|800px|The Fence device page.]] | |||

Clicking on the '''Manage Fence Devices''' section of the top bar will open the Fence menu. | |||

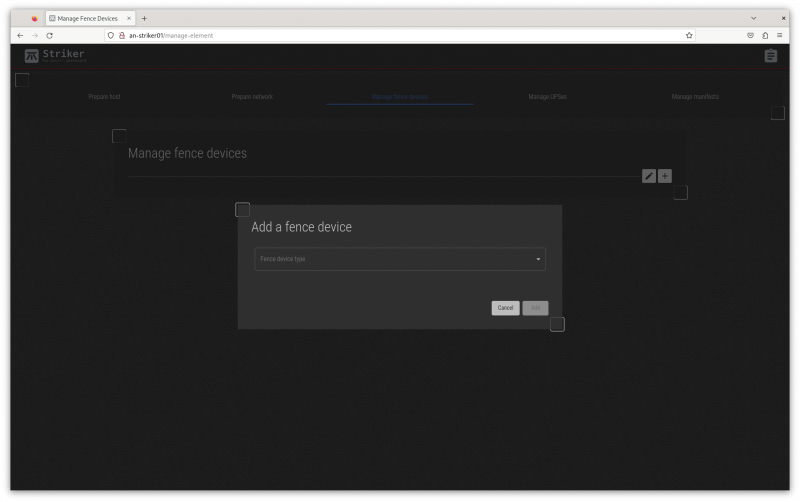

[[image:an-striker01-rhel8-m3-fence-setup-02.png|thumb|center|800px|The new fence device menu.]] | |||

Click on the '''+''' icon on the right to open the new fence device menu. | |||

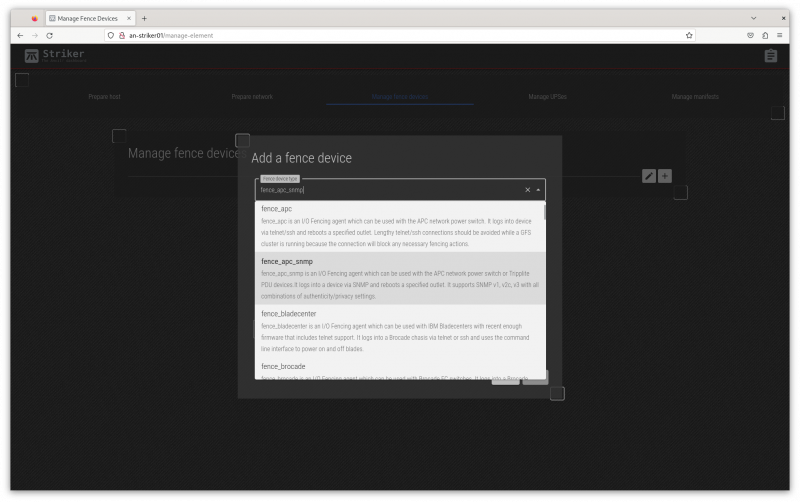

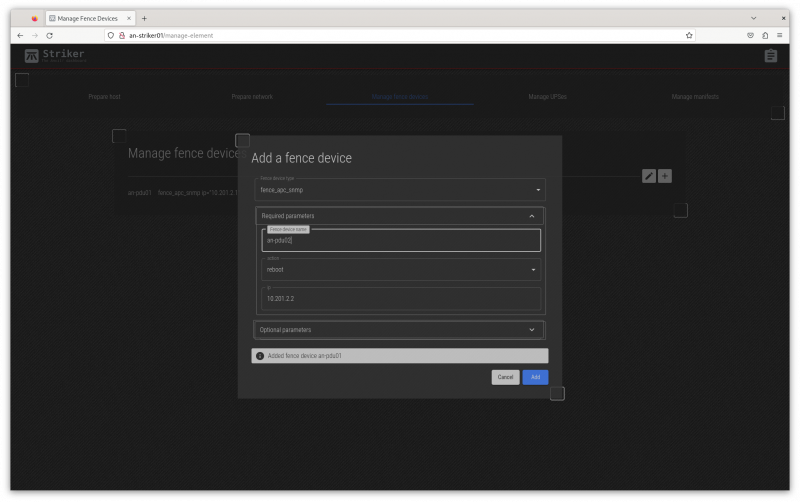

[[image:an-striker01-rhel8-m3-fence-setup-04.png|thumb|center|800px|The <span class="code">fence_apc_snmp</span> option.]] | |||

{{note|1=There are two fence agents for APC PDUs. Be sure to select '<span class="code">scan_apc_snmp</span>'!}} | |||

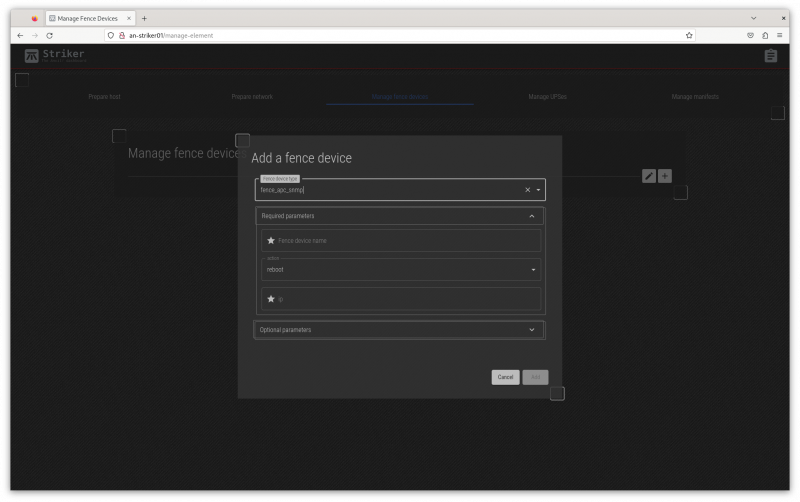

[[image:an-striker01-rhel8-m3-fence-setup-03.png|thumb|center|800px|The <span class="code">fence_apc_snmp</span> parameter menu.]] | |||

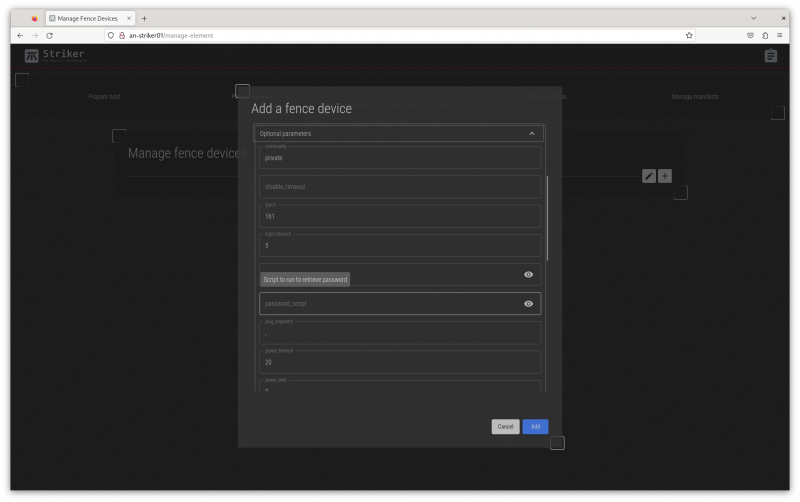

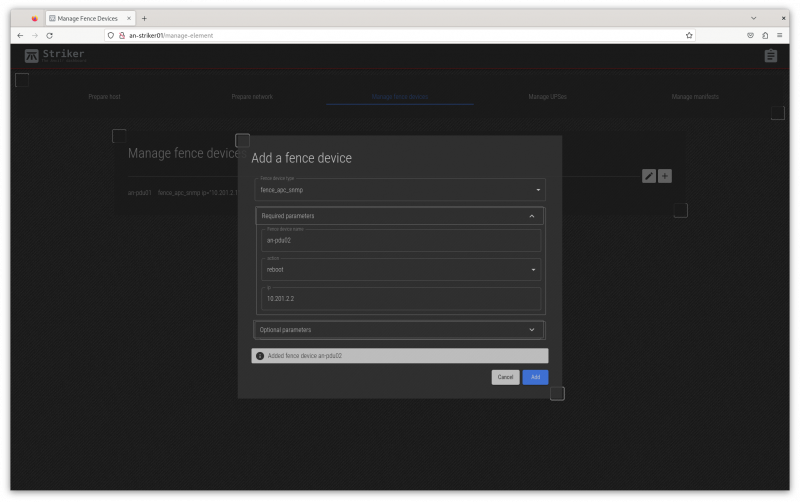

Every fence agent has a series of parameters that are required, and some that are optional. The required fields are displayed first, and the optional fields can be displayed by clicking on '''Optional Parameters''' to expand the list of those parameters. | |||

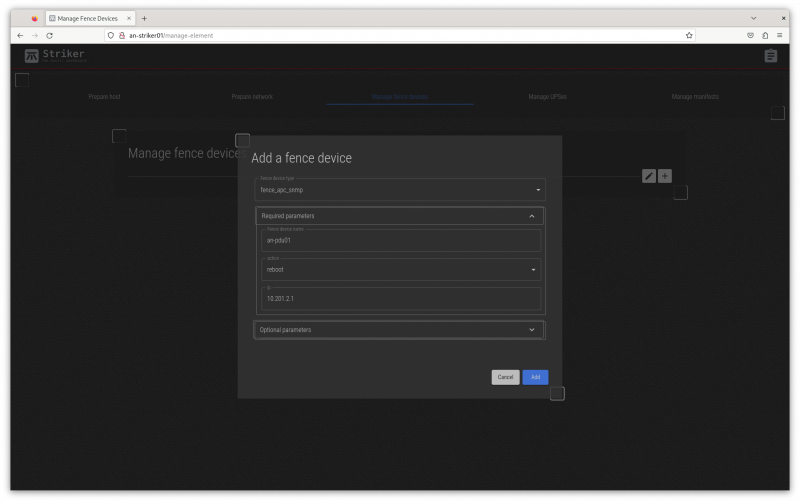

[[image:an-striker01-rhel8-m3-fence-setup-05.png|thumb|center|800px|Required parameters set.]] | |||

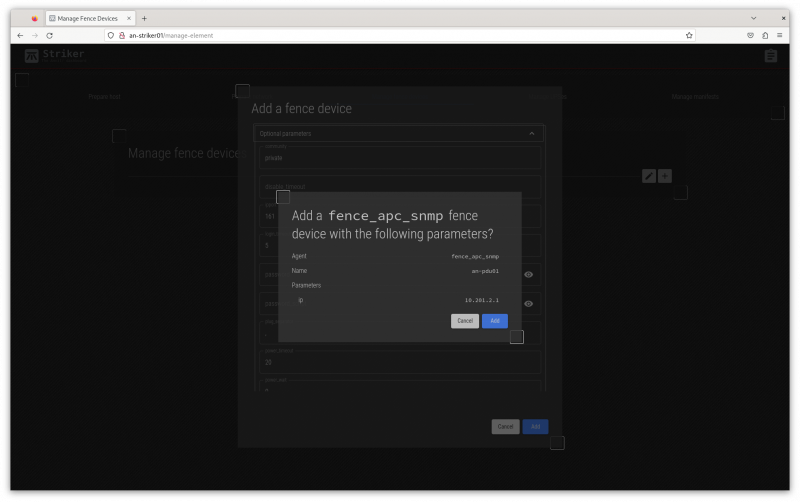

In our example, we'll first add ''<span class="code">an-pdu01</span>'' as the name, we will leave ''<span class="code">action</span>'' as <span class="code">reboot</span>, and we'll set the IP address to <span class="code">10.201.2.1</span>. | |||

[[image:an-striker01-rhel8-m3-fence-setup-06.png|thumb|center|800px|Showing some of the optional parameters.]] | |||

We do not need to set any of the optional parameters, but above we show what the menu looks like. Note how mousing over a field will display it's help (as provided by the fence agent itself). | |||

[[image:an-striker01-rhel8-m3-fence-setup-07.png|thumb|center|800px|Showing the summary.]] | |||

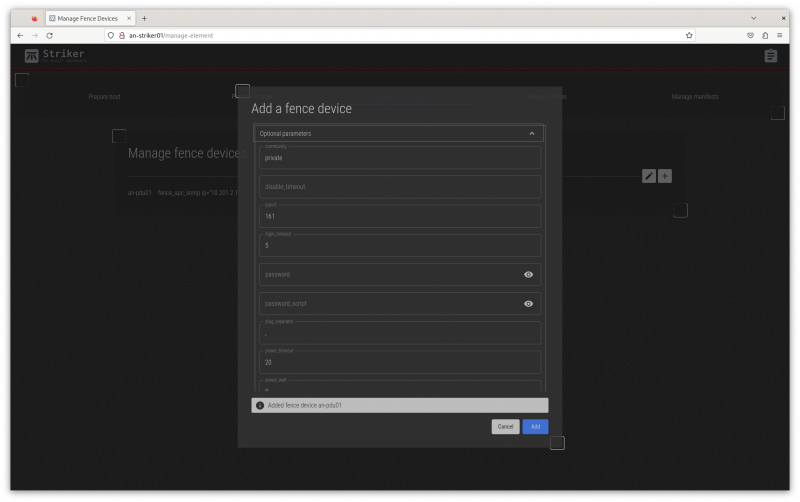

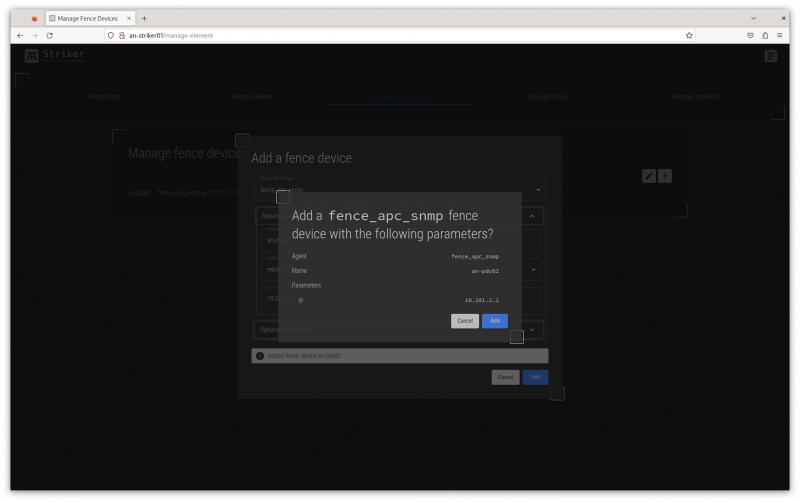

Click on '''Add''' and the summary of what you entered. Check it over, and if you're happy, click on '''Add''' to save it. | |||

[[image:an-striker01-rhel8-m3-fence-setup-08.png|thumb|center|800px|The new fence device has been saved.]] | |||

The new device is saved! | |||

[[image:an-striker01-rhel8-m3-fence-setup-09.png|thumb|center|800px|Updating the field for <span class="code">an-pdu02</span>.]] | |||

Go back up and change the name and IP, and we can save the second PDU. | |||

[[image:an-striker01-rhel8-m3-fence-setup-10.png|thumb|center|800px|Confirming the new <span class="code">an-pdu02</span> parameters.]] | |||

As before, review and confirm the new values are correct. | |||

[[image:an-striker01-rhel8-m3-fence-setup-11.png|thumb|center|800px|Saving the new <span class="code">an-pdu02</span> fence device.]] | |||

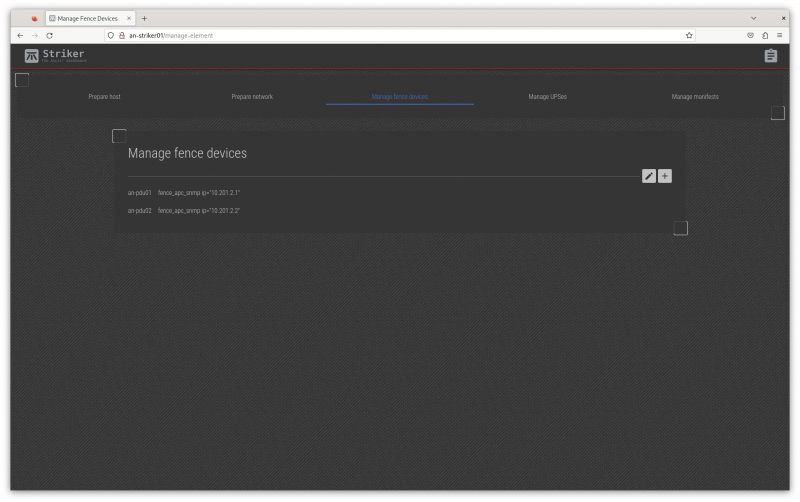

And saved! Now click ''Cancel'' to close the menu. | |||

[[image:an-striker01-rhel8-m3-fence-setup-12.png|thumb|center|800px|The two new fence devices are now visible.]] | |||

Now we can see the two new fence devices that we added! | |||

== Adding UPSes == | |||

The UPSes provide emergency power in the case mains power is lost. As important though, Scancore uses the power status to monitor for power problems before they turn to power outages. | |||

We're going to be configuring a pair of [[APC]] [[SMT1500RM2U]] UPSes. | |||

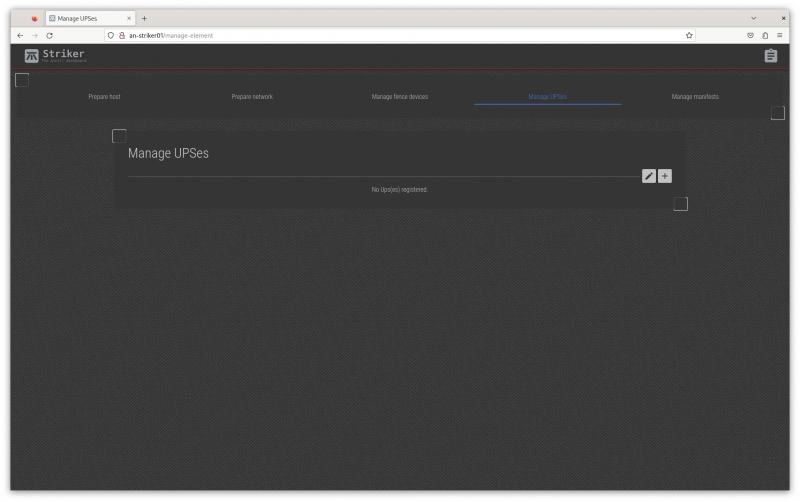

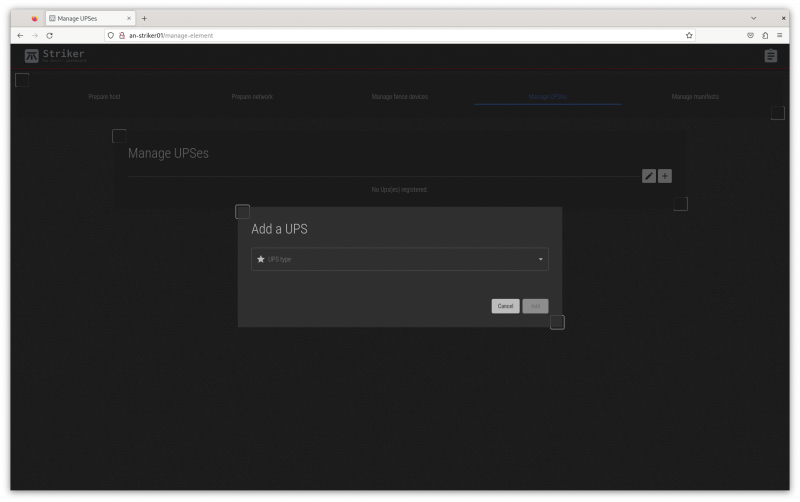

[[image:an-striker01-rhel8-m3-ups-setup-01.png|thumb|center|800px|The "Manage UPSes" page.]] | |||

Clicking on the '''Manage UPSes''' tab brings up the UPSes menu. | |||

[[image:an-striker01-rhel8-m3-ups-setup-02.png|thumb|center|800px|The new UPS type select option.]] | |||

Click on '''+''' to add a new UPS. | |||

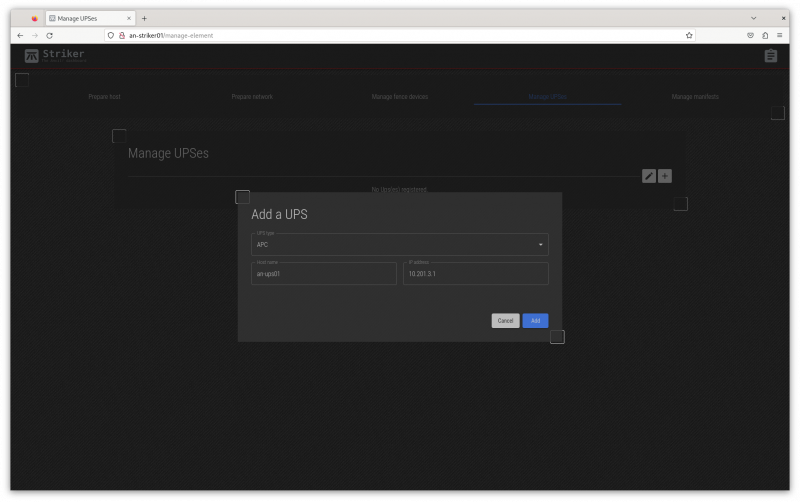

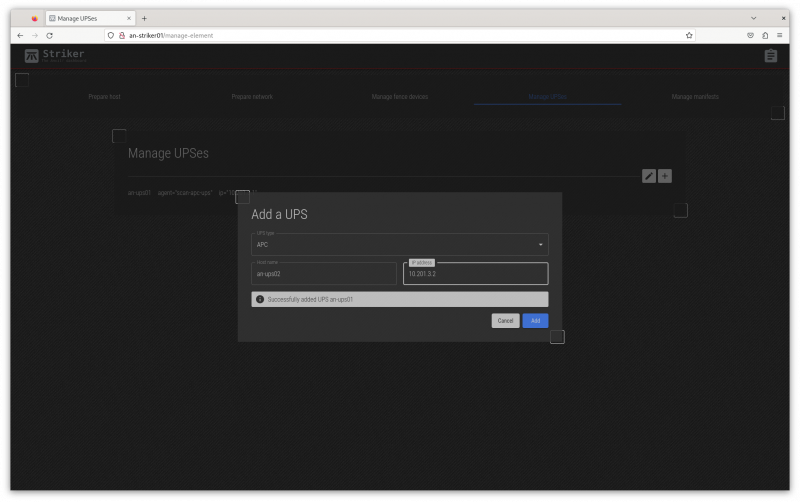

[[image:an-striker01-rhel8-m3-ups-setup-03.png|thumb|center|800px|Adding a new APC UPS.]] | |||

{{note|1=At this time, only APC brand UPSes are supported. If you would like a certain brand to be supported, please [[contact us]] and we'll try to add support.}} | |||

Select the ''APC'' and then enter the name and IP address. For our first UPS, we'll use the name <span class="code">an-ups01</span> and the IP address <span class="code">10.201.3.1</span>. | |||

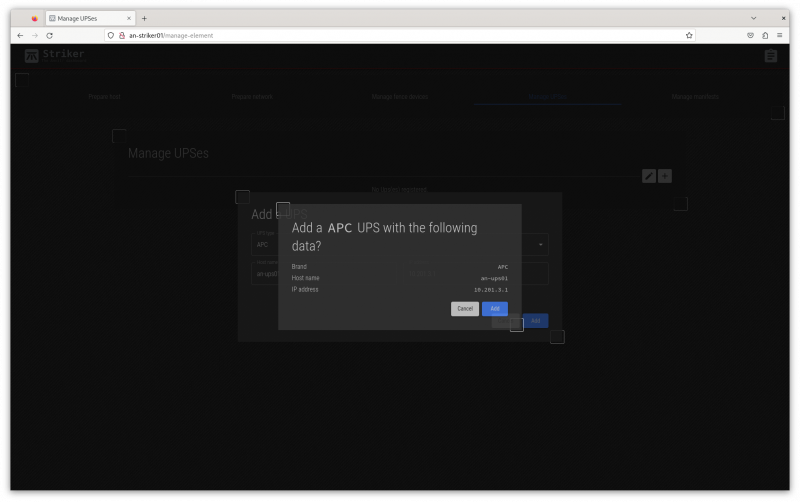

[[image:an-striker01-rhel8-m3-ups-setup-04.png|thumb|center|800px|Confirming the new UPS.]] | |||

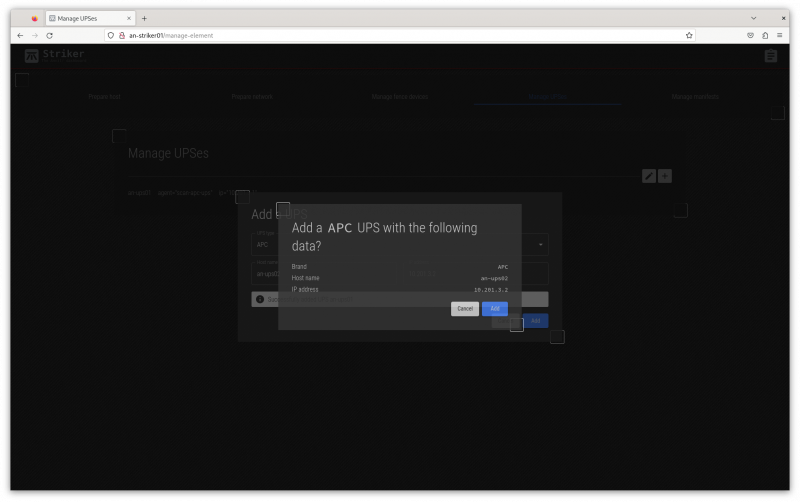

Click on ''Add'' and confirm that what you entered it accurate. | |||

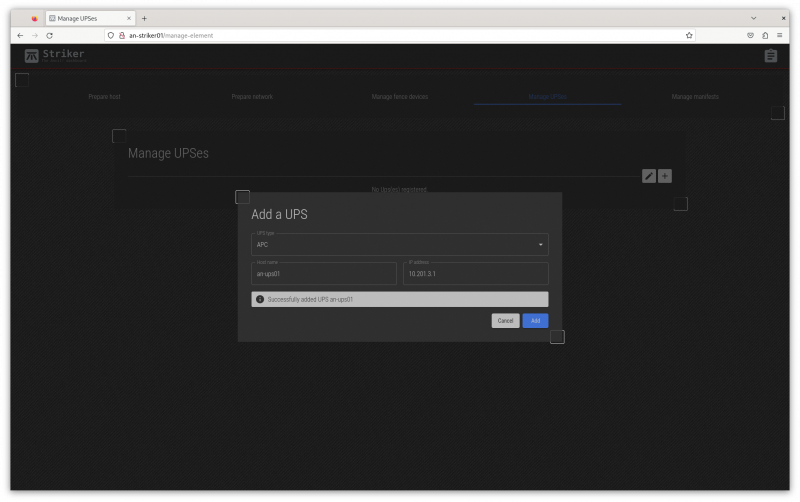

[[image:an-striker01-rhel8-m3-ups-setup-05.png|thumb|center|800px|Saving the new UPS.]] | |||

Click on ''Add'' again to save the new UPS. | |||

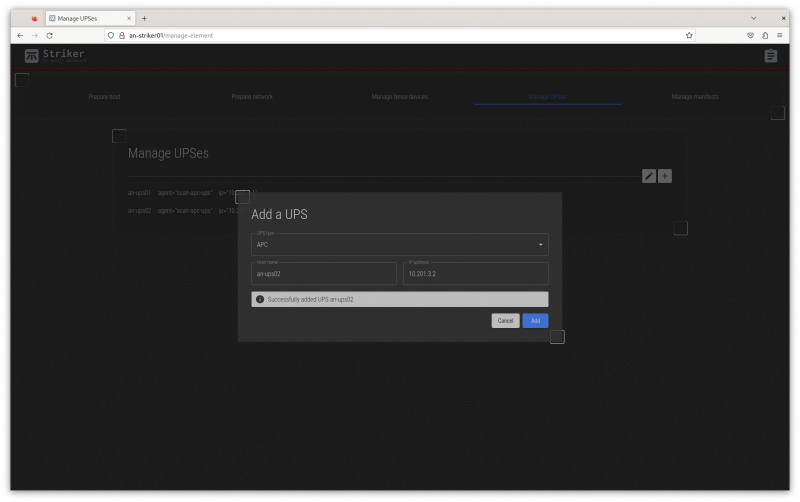

[[image:an-striker01-rhel8-m3-ups-setup-06.png|thumb|center|800px|Updating the form for the second UPS.]] | |||

Edit the name and IP for the second UPS. | |||

[[image:an-striker01-rhel8-m3-ups-setup-07.png|thumb|center|800px|Confirm the second UPS.]] | |||

Confirm the data for the second UPS is accurate, the click ''Add'' again to save. | |||

[[image:an-striker01-rhel8-m3-ups-setup-08.png|thumb|center|800px|The second UPS is saved.]] | |||

The second UPS has been saved! | |||

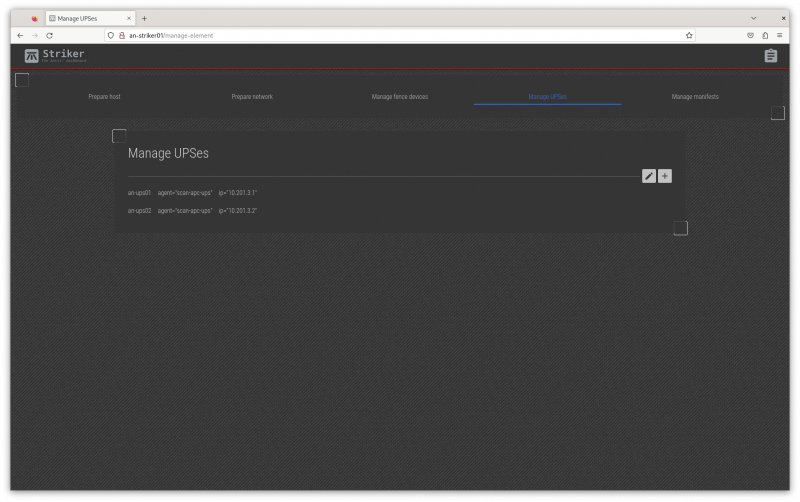

[[image:an-striker01-rhel8-m3-ups-setup-09.png|thumb|center|800px|The UPSes are now recorded.]] | |||

Press ''Cancel'' to close the form and you will see your new UPSes! | |||

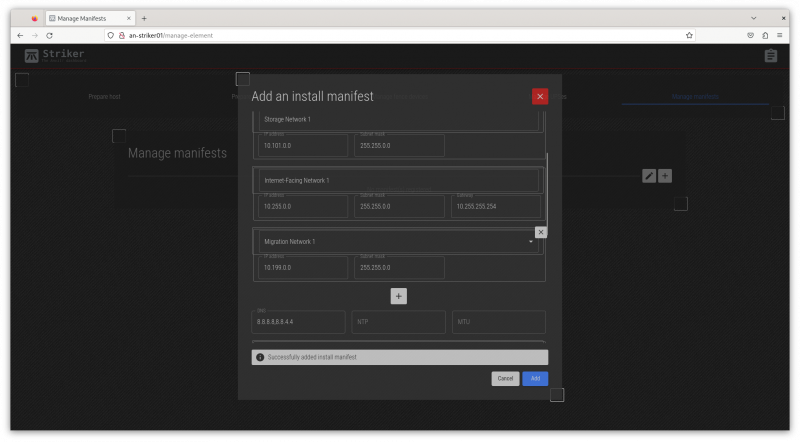

== Creating an 'Install Manifest' == | |||

Before we start, we need to discuss what an "Install Manifest" is. | |||

In the simplest form, an Install Manifest is a "recipe" that defines how a node is to be configured. It links specific machines to be subnodes, sets their host names and IPs, and links subnodes to fence devices and UPSes. | |||

So lets start. | |||

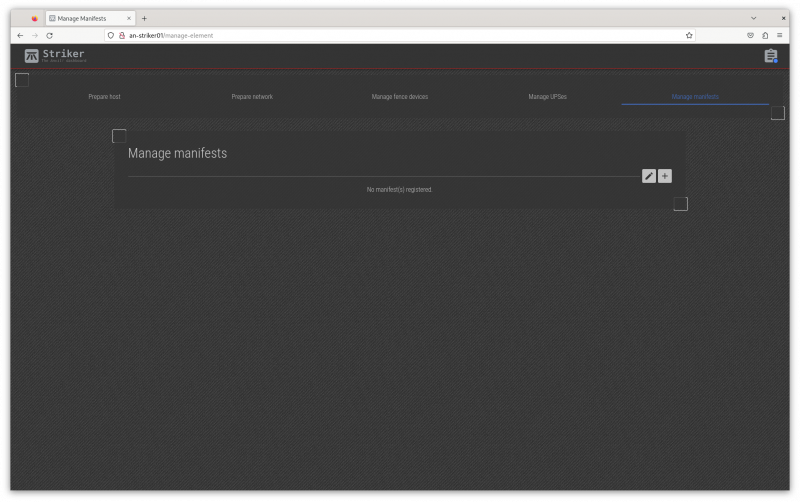

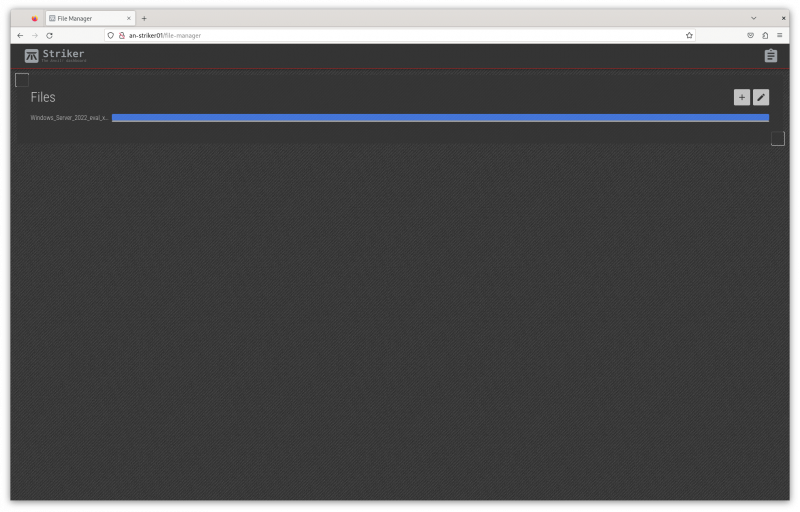

[[image:an-striker01-rhel8-m3-create-install-manifest-01.png|thumb|center|800px|The "Install Manifest" tab.]] | |||

Click on the "Install Manifest" tab to open the manifest menu. | |||

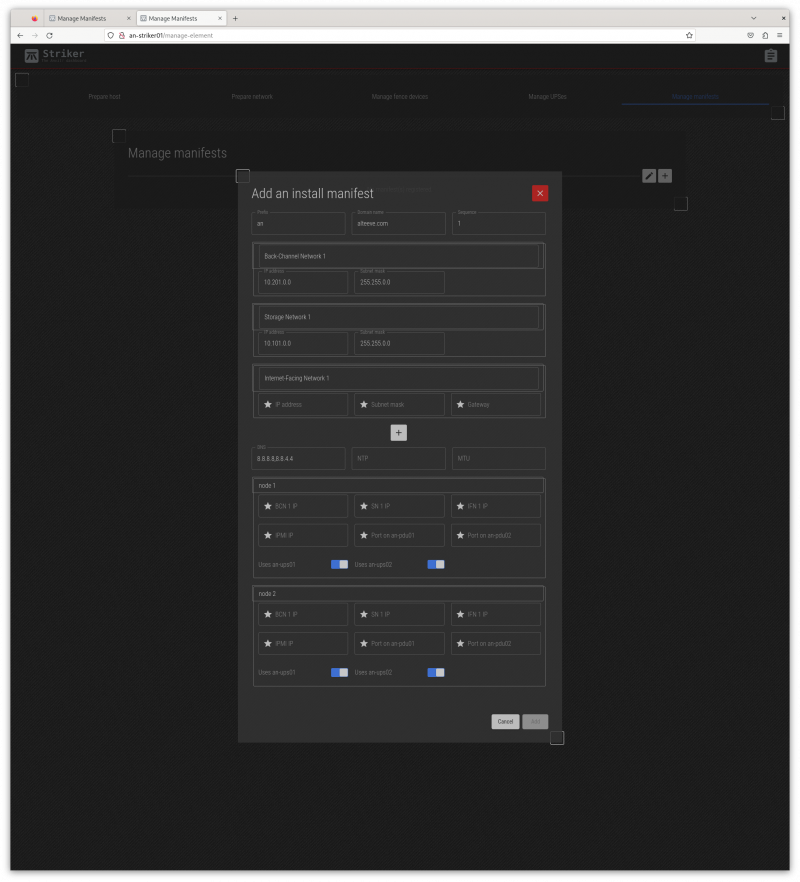

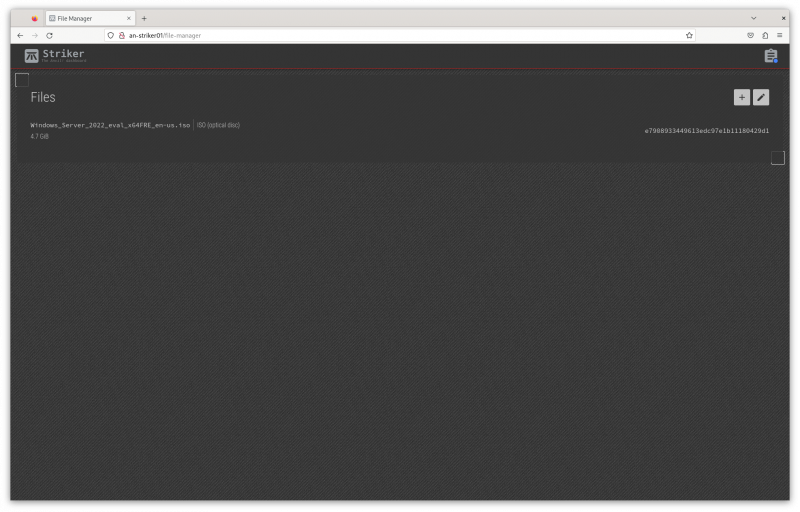

[[image:an-striker01-rhel8-m3-create-install-manifest-02.png|thumb|center|800px|The "Install Manifest" menu.]] | |||

The manifest menu has several fields. Lets look at them; | |||

Fields; | |||

{| class="wikitable" | |||

!style="white-space:nowrap; text-align:left;"|Prefix | |||

|This is a prefix, 1 to 5 characters long, used to identify where the node will be used. It's a short, descriptive string attached to the start of short host names. | |||

|- | |||

!style="white-space:nowrap; text-align:left;"|Domain Name | |||

|This is the domain name used to describe the owner / operator of the node. | |||

|- | |||

!style="white-space:nowrap; text-align:left;"|Sequence | |||

|Starting at '1', this indicated the node's sequence number. This will be used to determine the IP addresses assigned to the subnodes. See [[Anvil! Networking]] for how this works in detail. | |||

|- | |||

!style="white-space:nowrap; text-align:left;"|Networks Group | |||

|The first three network fields define the subnets, and are not where actual IPs are assigned. Below the default three networks is a '''+''' button to add additional networks. | |||

|- | |||

!style="white-space:nowrap; text-align:left;"|DNS | |||

|This is where the DNS IP(s) can be assigned. You can specify multiple by using a comma-separated list, with no spaces. (ie: <span class="code">8.8.8.8,8.8.4.4</span>). | |||

|- | |||

!style="white-space:nowrap; text-align:left;"|NTP | |||

|If you run your own [[NTP]] server, particularly if you restrict access to outside time servers, define your NTP servers here, as a comma-separated list if there are multiple. | |||

|- | |||

!style="white-space:nowrap; text-align:left;"|MTU | |||

|If you are certain that your network supports MTUs over 1500, you can set the desired MTU here. '''NOTE''': This is not well tested yet, and it's use is not yet advised. It is planned to add per-network MTU support later. See [https://github.com/ClusterLabs/anvil/issues/435 Issue #435]. | |||

|- | |||

!style="white-space:nowrap; text-align:left;"|Subnode 1 and 2 | |||

|This section is where you can set the IP address, a selection of which UPSes power the subnodes, and where needed, fence device ports used to isolate the subnode in an emergency. | |||

|} | |||

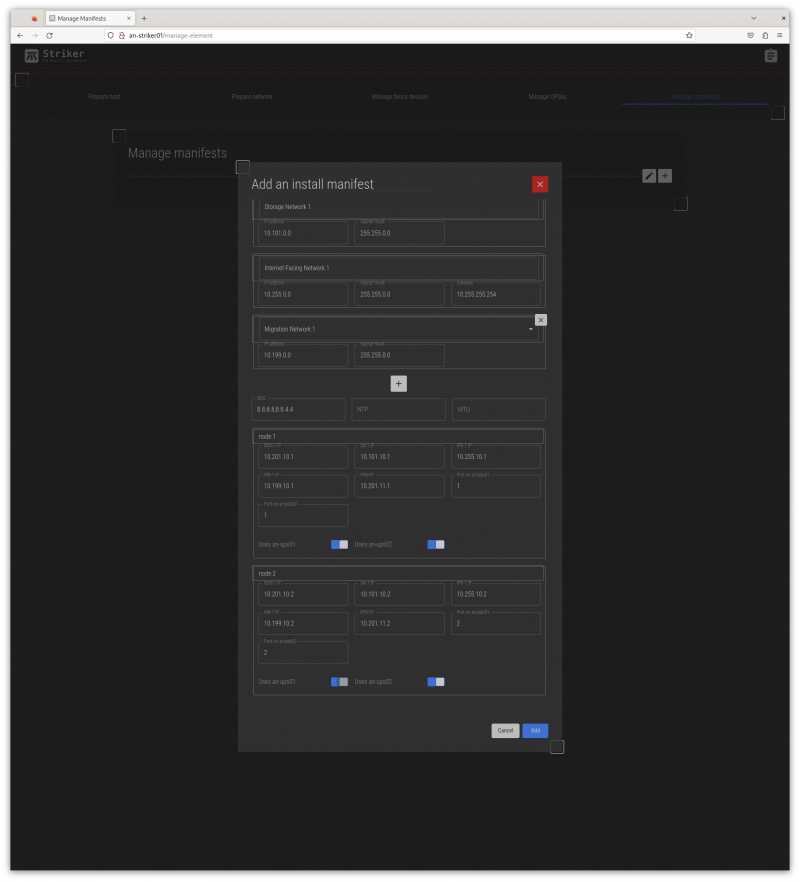

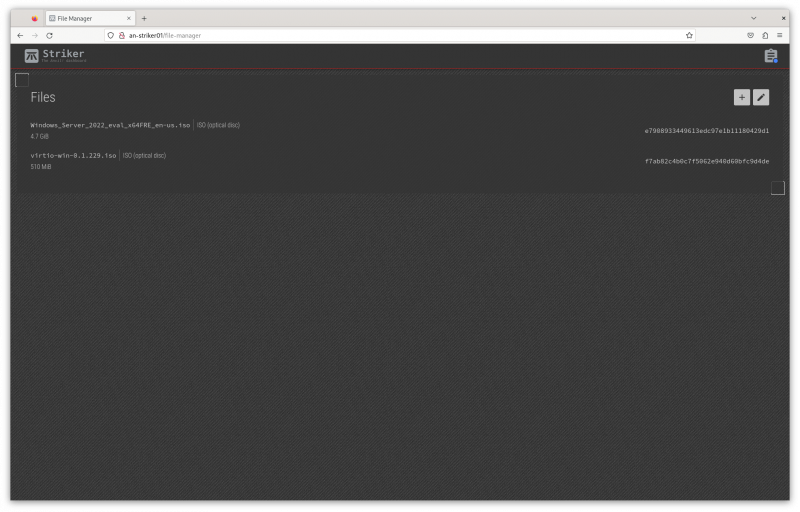

[[image:an-striker01-rhel8-m3-create-install-manifest-03.png|thumb|center|800px|The completed "Install Manifest" menu for node 1, "an-anvil-01".]] | |||

Below this are the networks being used on this Anvil! node. The [[BCN]], [[SN]] and [[IFN]] are always defined. In our case, we also have a [[MN]], so we'll click on the '''+''' to add it. | |||

{{note|1=In our example, we're using APC PDUs. It's critical that the ports entered into the fence device port correspond accurately to the numbered outlets on the PDUs! The ports specified '''must''' map to the outlets powering the subnode.}} | |||

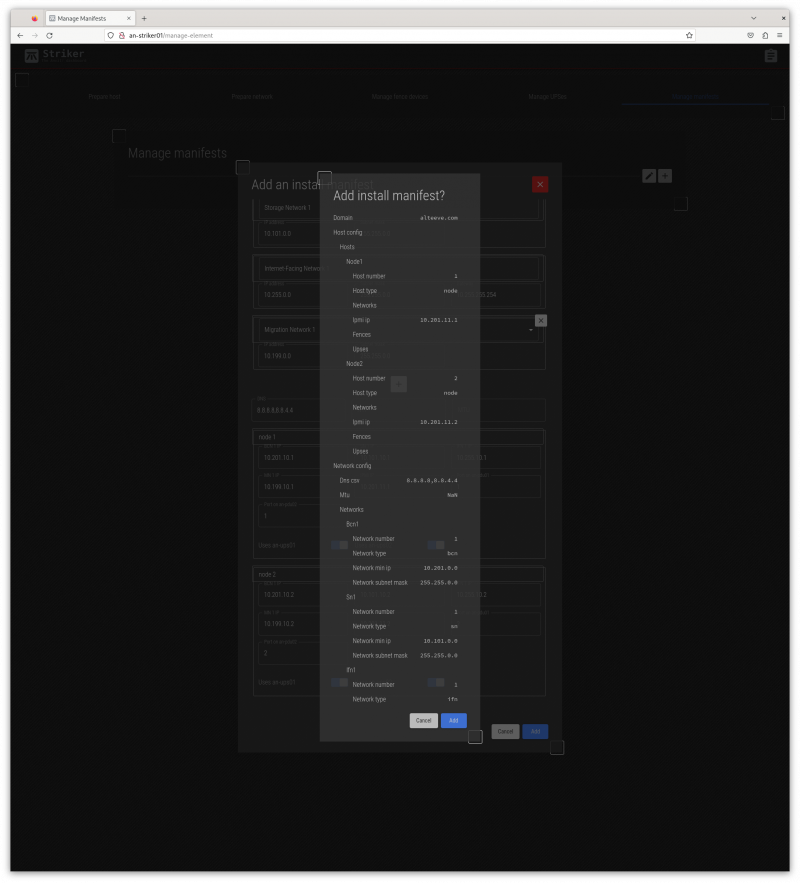

[[image:an-striker01-rhel8-m3-create-install-manifest-04.png|thumb|center|800px|The new "Install Manifest" confirmation.]] | |||

Click ''Add'', and you'll see a summary of the manifest. Review the summary carefully. | |||

[[image:an-striker01-rhel8-m3-create-install-manifest-05.png|thumb|center|800px|The new "Install Manifest" is saved.]] | |||

If you're happy, click ''Add''. You'll see the saved message, and then you can click on the red '''X''' to close the menu. | |||

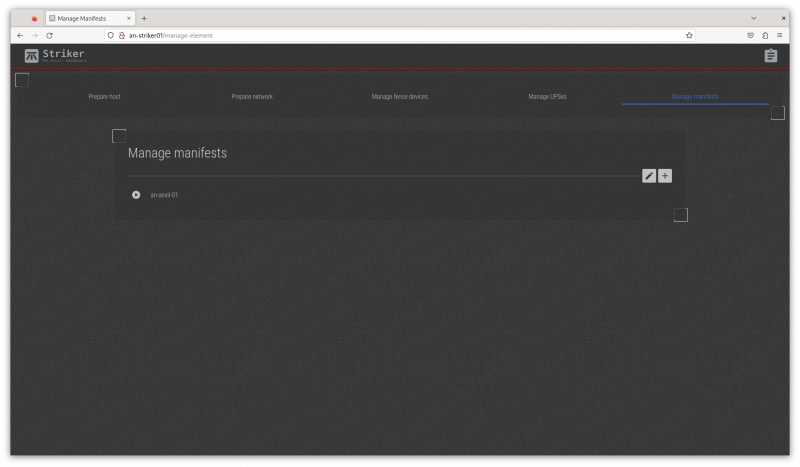

[[image:an-striker01-rhel8-m3-create-install-manifest-06.png|thumb|center|800px|The new manifest exists.]] | |||

Now we see the new <span class="code">an-anvil-01</span> install manifest! | |||

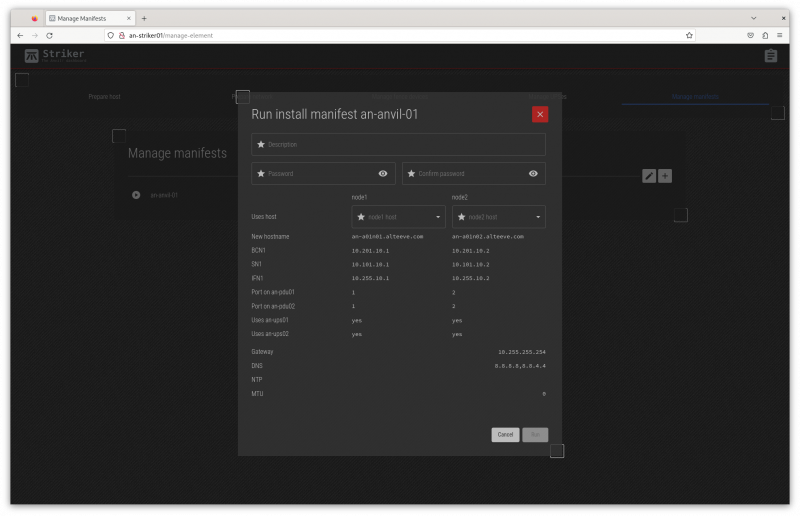

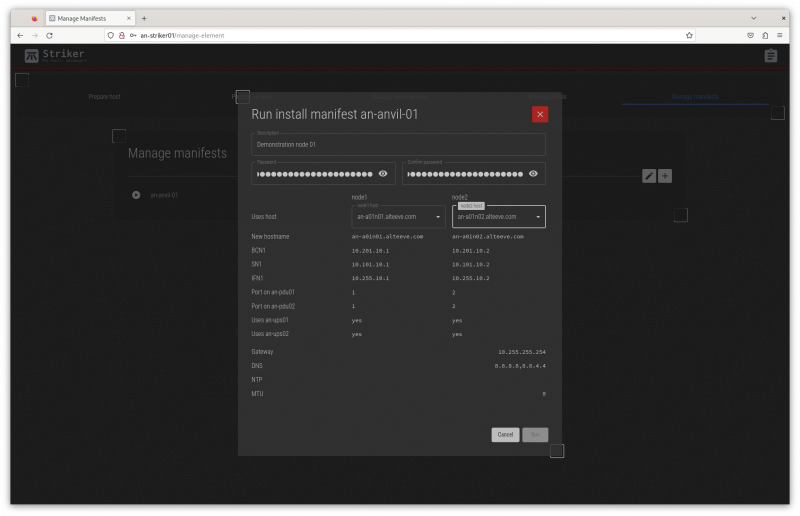

== Running an Install Manifest == | |||

Now we're ready to create our first Anvil! node! | |||

To build an Anvil! node, we use the manifest to take the config we want, and apply them to the physical subnodes we prepared earlier. This will take those two unassigned subnodes and tie them together into an Anvil! node. | |||

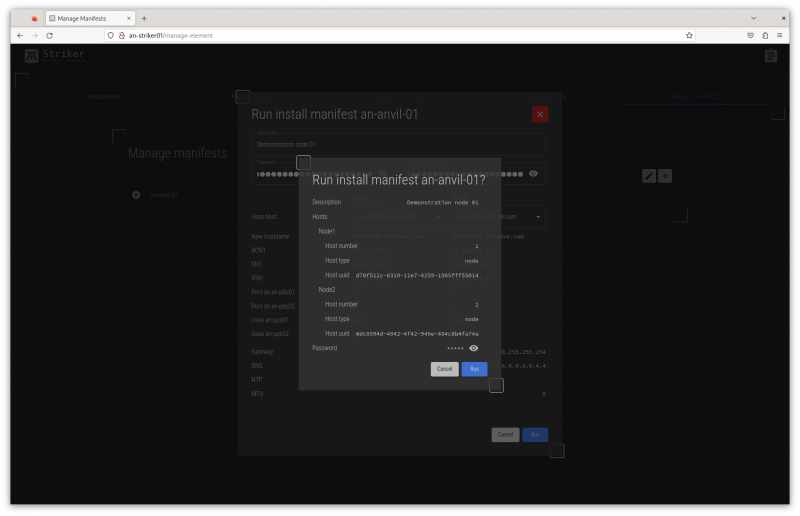

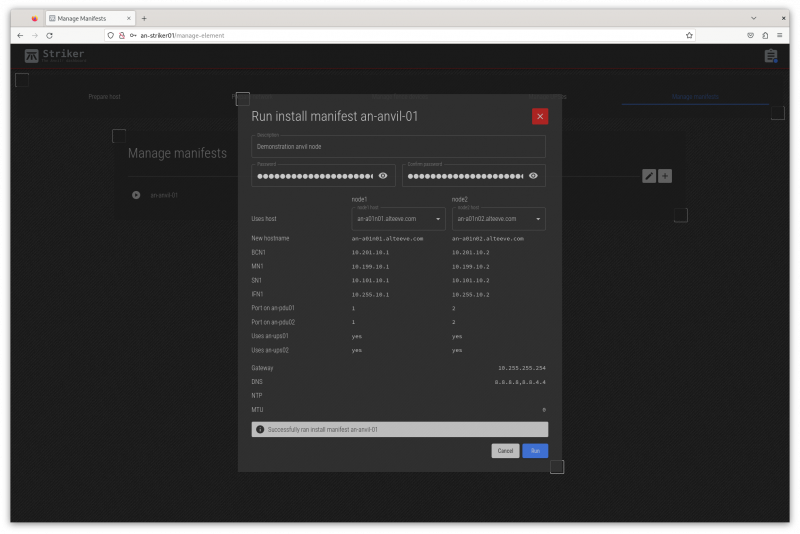

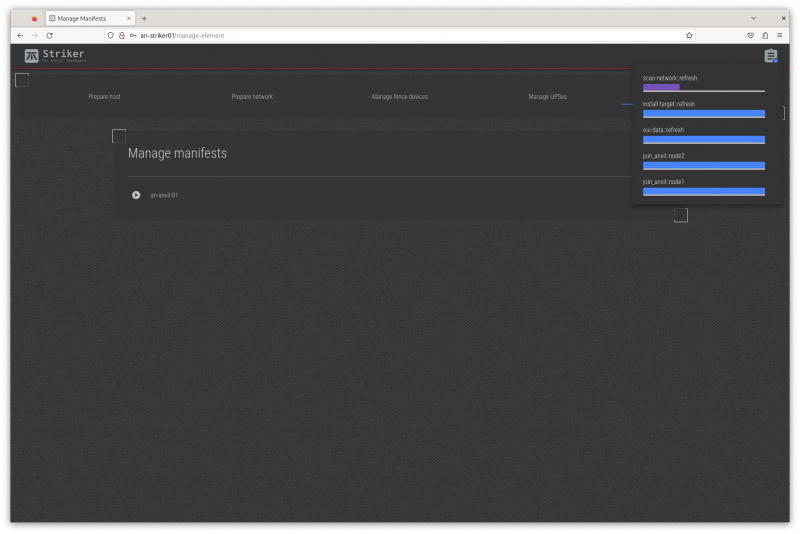

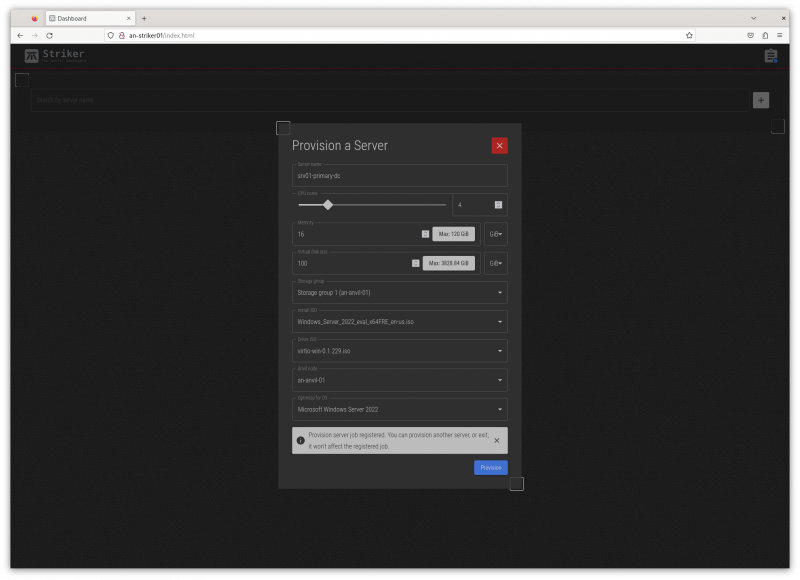

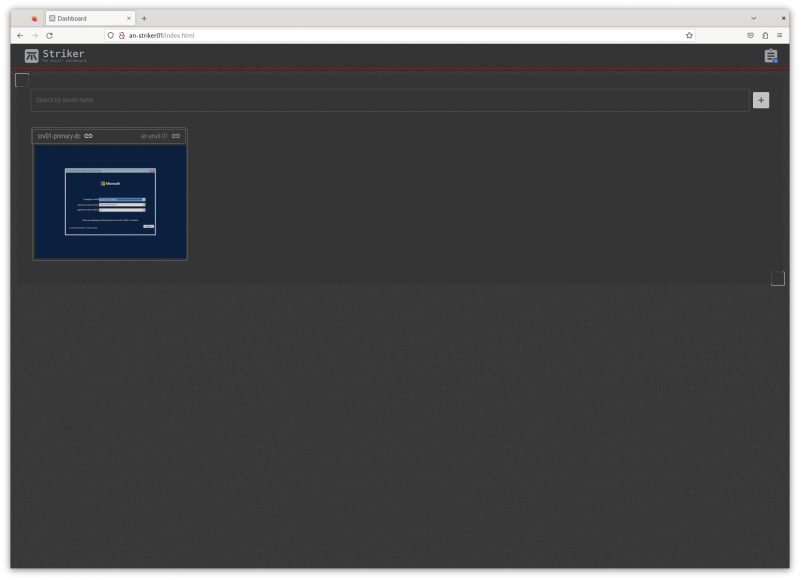

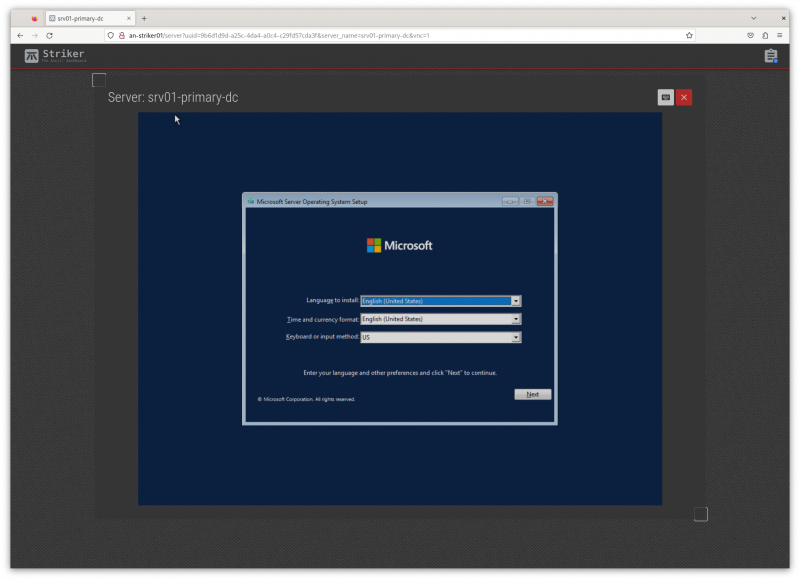

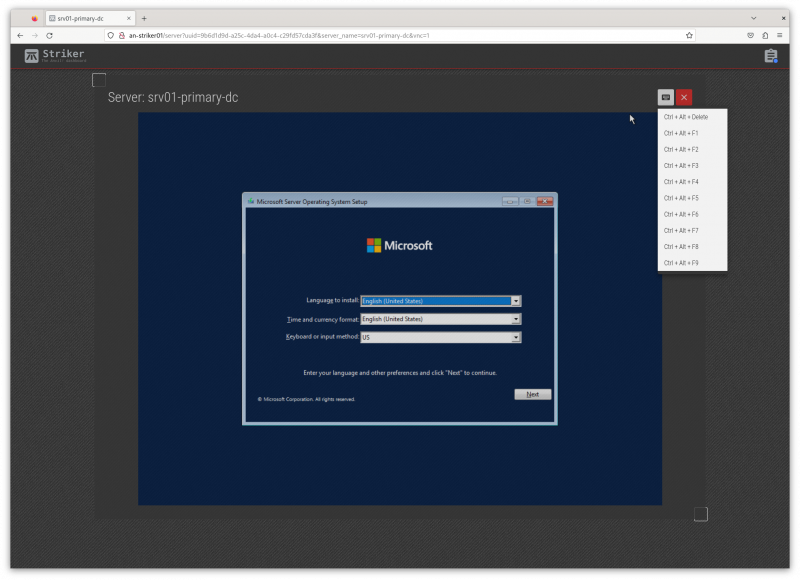

[[image:an-striker01-rhel8-m3-run-install-manifest-01.png|thumb|center|800px|The 'run manifest' menu]] | |||